Message boards : News : WU: OPM simulations

| Author | Message |

|---|---|

|

Hi everyone. | |

| ID: 43305 | Rating: 0 | rate:

| |

ha, I was lucky to get 2 of them for crunching | |

| ID: 43307 | Rating: 0 | rate:

| |

|

Nice, impressive structures to simulate. | |

| ID: 43308 | Rating: 0 | rate:

| |

|

For the moment we are just looking if the automatical preparation can produce working simulations. Once the simulations are completed I will check for any big changes that happened (changes in protein structure, detachment from the membrane) and try to determine what caused those changes if possible. | |

| ID: 43311 | Rating: 0 | rate:

| |

|

I see tasks ready to send on the server status page :-) | |

| ID: 43319 | Rating: 0 | rate:

| |

|

I am sending them now. Please report any serious issues here. | |

| ID: 43328 | Rating: 0 | rate:

| |

|

OPM996 is classed as a Short Run but using 980ti seems it will take around 7 hours | |

| ID: 43329 | Rating: 0 | rate:

| |

|

Server Status calls it Short Run but in BOINC under Application it's Long Run. Got 2 already running fine. | |

| ID: 43330 | Rating: 0 | rate:

| |

|

On my 980ti it is running as short run and will take over 7 hours or longer to complete at current rate | |

| ID: 43331 | Rating: 0 | rate:

| |

|

The runs were tested on 780s. Simulations that run under 6 hours on a 780 are labeled as short. | |

| ID: 43332 | Rating: 0 | rate:

| |

|

I am sending both short and long runs in this project as I mentioned. They are split accordingly depending on runtime. | |

| ID: 43333 | Rating: 0 | rate:

| |

|

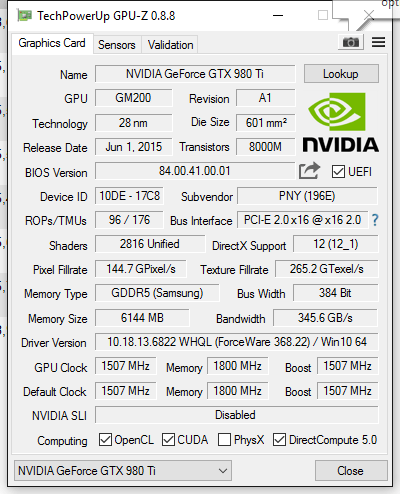

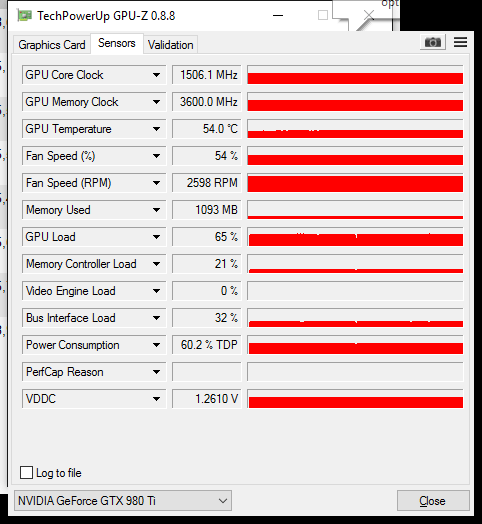

No I am only running one at a time and it has only completed 15.57% after 1hr 11min on a 980ti utilization 60% | |

| ID: 43334 | Rating: 0 | rate:

| |

|

You got one of the edge cases apparently. 2k1a has exactly 6.0 hours projected runtime on my 780 so it got put in the short queue since my condition was >6 hours goes to long. | |

| ID: 43336 | Rating: 0 | rate:

| |

|

Not working from what BOINC estimates but from real time and it will take betwen 7 to 8hrs | |

| ID: 43337 | Rating: 0 | rate:

| |

|

Boinc will estimate completion time based on the GFlops you put against the task, but if previous GFlops were not accurate the estimate will not be accurate. | |

| ID: 43338 | Rating: 0 | rate:

| |

|

On my GTX980Ti's, the former long runs were running about 20.000 seconds, yielding 255.000 points. | |

| ID: 43341 | Rating: 0 | rate:

| |

On my GTX980Ti's, the former long runs were running about 20.000 seconds, yielding 255.000 points. This is what got: 2jp3R4-SDOERR_opm996-0-1-RND2694_0 11593350 9 May 2016 | 9:19:35 UTC 9 May 2016 | 21:02:28 UTC Completed and validated 40,147.05 39,779.98 205,350.00 Long runs (8-12 hours on fastest card) v8.48 (cuda65) 1cbrR7-SDOERR_opm996-0-1-RND5441_0 11593203 9 May 2016 | 9:19:35 UTC 9 May 2016 | 21:32:36 UTC Completed and validated 41,906.29 41,465.53 232,200.00 Long runs (8-12 hours on fastest card) v8.48 (cuda65) | |

| ID: 43342 | Rating: 0 | rate:

| |

|

Yeah, they're pretty long, my 980ti is looking like it'll take about 20 hours at 70-75% usage. Don't mind the long run, I'm glad to have work to crunch, though I do hope the credit is equivalently good. | |

| ID: 43344 | Rating: 0 | rate:

| |

... I'm glad to have work to crunch me too :-) | |

| ID: 43345 | Rating: 0 | rate:

| |

|

I crunched a WU lasting 18.1 hours for 203.850 credits on my GTX970. | |

| ID: 43347 | Rating: 0 | rate:

| |

I crunched a WU lasting 18.1 hours for 203.850 credits on my GTX970. Similar experience here with 4gbrR2-SDOERR_opm996-0-1-RND1953 3oaxR8-SDOERR_opm996-0-1-RND1378 | |

| ID: 43348 | Rating: 0 | rate:

| |

|

Seems like I might have underestimated the real runtime. We use a script that caclulates the projected runtime on my 780s. So it seems it's a bit too optimistic on it's estimates :/ Longer WUs (projected time over 18 hours) though should give 4x credits. | |

| ID: 43349 | Rating: 0 | rate:

| |

|

OK, Thanks Stefan | |

| ID: 43351 | Rating: 0 | rate:

| |

OPM996 is classed as a Short Run but using 980ti seems it will take around 7 hours I received several "short" tasks on a machine set run only short tasks. They took 14 hours on a 750Ti. Short tasks in the past only took 4-5 hours on the 750. May I suggest that these be reconsidered as long tasks and not sent to short task only machines. | |

| ID: 43352 | Rating: 0 | rate:

| |

|

I'm computing the WU 2kogR2-SDOERR_opm996-0-1-RND7448 but after 24hrs it is still at 15% (750ti)... Please don't say me I have to abort it! | |

| ID: 43353 | Rating: 0 | rate:

| |

|

Some of them are pretty big. Had one task task take 20 hours. Long tasks usually finish around 6.50-7 hours for reference | |

| ID: 43354 | Rating: 0 | rate:

| |

[...]I have 2 WU's (2b5fR8 & 2b5fR3) running, one on each of my GTX970's.[...] With no other CPU WU's running I've recorded ~10% CPU usage for each GTX970 (2) WU while a GTX750 WU at ~6% of my Quad core 3.2GHz Haswell system. (3WU = ~26% total CPU usage) My GPU(s) current (opm996 long WU) estimated total Runtime. Completion rate (based on) 12~24hours of real-time crunching. 2r83R1 (GTX970) = 27hr 45min (3.600% per 1hr @ 70% GPU usage / 1501MHz) 1bakR0 (GTX970) = 23hr 30min (4.320% per 1hr @ 65% / 1501MHz) 1u27R2 (GTX750) = 40hr (2.520% per 1hr @ 80% / 1401MHz) 2I35R5 (GT650m) = 70hr (1.433% per 1hr @ 75% / 790MHz) Newer (Beta) BOINC clients introduced an (accurate) per min or hour progress rate feature - available in advanced view (task proprieties) commands bar. | |

| ID: 43355 | Rating: 0 | rate:

| |

Seems like I might have underestimated the real runtime. We use a script that caclulates the projected runtime on my 780s. So it seems it's a bit too optimistic on it's estimates :/ Longer WUs (projected time over 18 hours) though should give 4x credits. It would be hugely appreciated if you could find a way of hooking up the projections of that script to the <rsc_fpops_est> field of the associated workunits. With the BOINC server version in use here, a single mis-estimated task (I have one which has been running for 29 hours already) can mess up the BOINC client's scheduling - for other projects, as well as this one - for the next couple of weeks. | |

| ID: 43356 | Rating: 0 | rate:

| |

|

I have noticed that the last batch of WUs are worth a lot less credit per time spent crunching, but I also wanted to report that we might have some bad WUs going out. I spent quite a lot of time on this workunit, and noticed that the only returned tasks for this WU is "Error while computing." The workunit in question: https://gpugrid.net/workunit.php?wuid=11593942 | |

| ID: 43357 | Rating: 0 | rate:

| |

I have noticed that the last batch of WUs are worth a lot less credit per time spent crunching, but I also wanted to report that we might have some bad WUs going out. I spent quite a lot of time on this workunit, and noticed that the only returned tasks for this WU is "Error while computing." The workunit in question: https://gpugrid.net/workunit.php?wuid=11593942 You've been crashing tasks for a couple weeks, including the older 'BestUmbrella_chalcone' ones. See below. You need to lower your GPU overclock, if you want to be stable with GPUGrid tasks! Downclock it until you never see "The simulation has become unstable." https://gpugrid.net/results.php?hostid=319330 https://gpugrid.net/result.php?resultid=15096591 2b6oR6-SDOERR_opm996-0-1-RND0942_1 10 May 2016 | 7:15:45 UTC https://gpugrid.net/result.php?resultid=15086372 28 Apr 2016 | 11:34:16 UTC e45s20_e17s22p1f138-GERARD_CXCL12_BestUmbrella_chalcone3441-0-1-RND8729_0 https://gpugrid.net/result.php?resultid=15084884 27 Apr 2016 | 23:52:34 UTC e44s17_e43s20p1f173-GERARD_CXCL12_BestUmbrella_chalcone2212-0-1-RND5557_0 https://gpugrid.net/result.php?resultid=15084715 26 Apr 2016 | 23:30:01 UTC e43s16_e31s21p1f321-GERARD_CXCL12_BestUmbrella_chalcone4131-0-1-RND6256_0 https://gpugrid.net/result.php?resultid=15084712 26 Apr 2016 | 23:22:44 UTC e43s13_e20s7p1f45-GERARD_CXCL12_BestUmbrella_chalcone4131-0-1-RND8139_0 https://gpugrid.net/result.php?resultid=15082560 25 Apr 2016 | 18:49:58 UTC e42s11_e31s18p1f391-GERARD_CXCL12_BestUmbrella_chalcone2731-0-1-RND8654_0 | |

| ID: 43358 | Rating: 0 | rate:

| |

I have noticed that the last batch of WUs are worth a lot less credit per time spent crunching, but I also wanted to report that we might have some bad WUs going out. I spent quite a lot of time on this workunit, and noticed that the only returned tasks for this WU is "Error while computing." The workunit in question: https://gpugrid.net/workunit.php?wuid=11593942 Yeah I was crashing for weeks until I found what I thought were stable clocks. I ran a few WUs without crashing and then GPUgrid was out of work temporarily. From that point I was crunching asteroids/milkyway without any error. I'll lower my GPU clocks or up the voltage and report back. Thanks! | |

| ID: 43359 | Rating: 0 | rate:

| |

|

| |

| ID: 43360 | Rating: 0 | rate:

| |

|

For future reference, I have a couple of suggestions: | |

| ID: 43361 | Rating: 0 | rate:

| |

For future reference, I have a couple of suggestions: Generally that's the case but ultimately this is a different type of research and it simply requires that some work be performed on the CPU (you can't do it on the GPU). The WUs are getting longer, GPU s are getting faster, but CPU speed is stagnant. Something got to give, and with the Pascal cards coming out soon, you have put out a new version anyway. Over the years WU's have remained about the same length overall. Occasionally there are extra-long tasks but such batches are rare. GPU's are getting faster, more adapt, the number of shaders (cuda cores here) is increasing and CUDA development continues. The problem isn't just CPU frequency (though an AMD Athlon 64 X2 5000+ is going to struggle a bit), WDDM is an 11%+ bottleneck (increasing with GPU performance) and when the GPUGrid app needs the CPU to perform a calculation it's down to the PCIE bridge and perhaps to some extent not being multi-threaded on the CPU. Note that it's the same ACEMD app (not updated since Jan) just a different batch of tasks (batches vary depending on the research type). My GPU usage on these latest SDOERR WUs is between 70% to 80%, compared to 85% to 95% for the GERARD BestUmbrella units. I don't think this is reinventing the wheel, just updating it. This does have to be done sooner or later. The Volta cards are coming out in only a few short years. I'm seeing ~75% GPU usage on my 970's too. On Linux and XP I would expect it to be around 90%. I've increased my Memory to 3505MHz to reduce the MCU (~41%) @1345MHz [power 85%], ~37% @1253MHz. I've watched the GPU load and it varies continuously with these WU's, as does the Power. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43362 | Rating: 0 | rate:

| |

|

Current (Opm996 long) batch are 10 million step simulations with a large "nAtom" variation (30k <<>> 90k). | |

| ID: 43364 | Rating: 0 | rate:

| |

|

4n6hR2-SDOERR_opm996-0-1-RND7021_0 100.440 Natoms 16h 36m 46s (59.806s) 903.900 credits (GTX980Ti) | |

| ID: 43365 | Rating: 0 | rate:

| |

|

If you have a small GPU, it's likely you will not finish some of these tasks inside 5 days which 'I think' is still the cut-off for credit [correct me if I'm wrong]! Suggest people with small cards look at the progression % and time taken in Boinc Manager and from that work out if the tasks will finish inside 5 days or not. If it's going to take over 120h consider aborting. | |

| ID: 43366 | Rating: 0 | rate:

| |

|

This one looks like it's going to take 30hrs :) 44% after 13 hours. | |

| ID: 43367 | Rating: 0 | rate:

| |

|

My last 2 valid tasks (970's) took around 31h also. | |

| ID: 43368 | Rating: 0 | rate:

| |

though should give 4x credits. where is my 4x credit? I see only at least twice as much running time with less credit.. ____________ Regards, Josef  | |

| ID: 43369 | Rating: 0 | rate:

| |

For future reference, I have a couple of suggestions: Fine, they underestimated the runtimes, but I stand by my statement for super long runs category. The future WU application version should be made less CPU dependent. As for making the WUs less CPU dependent, there are somethings that are easy to move to GPU calculation, some that are difficult, maybe not impossible, but definitely impractical. Okay, I get this point, and I am not asking for the moon, but something more must be done to avoid or minimize this bottleneck, from a programming standpoint. That's all I'm saying. | |

| ID: 43370 | Rating: 0 | rate:

| |

|

@Retvari @MrJo I mentioned the credit algorithm in this post https://www.gpugrid.net/forum_thread.php?id=4299&nowrap=true#43328 | |

| ID: 43372 | Rating: 0 | rate:

| |

|

Don't worry about that Stefan it's a curve ball that you've thrown us that's all. "I expect it to be over by the end of the week"I like your optimism given the users who hold on to a WU for 5 days and never return and those that continually error even after long run times. Surely there must be a way to suspend their ability to get WU's completely until they Log In. They seriously impact our ability to complete a batch in good time. | |

| ID: 43373 | Rating: 0 | rate:

| |

I like your optimism given the users who hold on to a WU for 5 days and never return and those that continually error even after long run times. 5 days does sound optimistic. Maybe you can ensure resends go to top cards with a low failure rate? Would be useful if the likes of this batch was better controlled; only sent to known good cards - see the RAC's of the 'participants' this WU was sent to: https://www.gpugrid.net/workunit.php?wuid=11595345 I suspect that none of these cards could ever return extra long tasks within 5days (not enough GFlops) even if properly setup: NVIDIA GeForce GT 520M (1024MB) driver: 358.87 [too slow and not enough RAM] NVIDIA GeForce GT 640 (2048MB) driver: 340.52 [too slow, only cuda6.0 driver] NVIDIA Quadro K4000 (3072MB) driver: 340.62 [too slow, only cuda6.0 driver] NVIDIA GeForce GTX 560 Ti (2048MB) driver: 365.10 [best chance to finish but runs too hot, all tasks fail on this card when it hits ~85C, needs to cool it properly {prioritise temperature}] Like the idea that WU's don't get sent to systems with high error rates until they log in (and are directed to a recommended settings page). ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43374 | Rating: 0 | rate:

| |

|

Hi SK, | |

| ID: 43375 | Rating: 0 | rate:

| |

|

Actually there is a way to send WUs only to top users by increasing the job priority over 1000. I thought I had it at max priority but I just found out that it's only set to 'high' which is 600. | |

| ID: 43376 | Rating: 0 | rate:

| |

|

Thanks Stefan, | |

| ID: 43377 | Rating: 0 | rate:

| |

Actually there is a way to send WUs only to top users by increasing the job priority over 1000. I thought I had it at max priority but I just found out that it's only set to 'high' which is 600.Priority usually does not cause exclusion, only lower probability. Does priority over 1000 really exclude the less reliable hosts from task scheduling when there are no lower priority tasks in the queue? I think it is true only when there are lower priority tasks also queued to give them to less reliable hosts. It is a documented feature? | |

| ID: 43378 | Rating: 0 | rate:

| |

Hi SK, Exactly, send a WU to 6 clients in succession that don't run it or return it for 5days and after a month the WU still isn't complete. IMO if client systems report a cache of over 1day they shouldn't get work from this project. I highlighted a best case scenario (where several systems were involved); the WU runs soon after receipt, fails immediately, gets reported quickly and can be sent out again. Even in this situation it went to 4 clients that failed to complete the WU. To send and receive replies from 4 systems took 7h. My second point was that the WU never really had a chance on any of those systems. They weren't capable of running these tasks (not enough RAM, too slow, oldish driver [slower or more buggy], too hot, or just not powerful enough to return an extra long WU inside 5days). ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43379 | Rating: 0 | rate:

| |

I take your point. In the 7 hours that WU was being bounced from bad client to bad client a fast card would have completed it. Totally agree. In fact Retvari could have completed it and taken an hour out for lunch. HaHa | |

| ID: 43380 | Rating: 0 | rate:

| |

I understand the runtime was underestimated The principle in the law "In dubio pro reo" should also apply here. When in doubt, more points. I only sent WUs that were projected to run under 24 hours on a 780 Not everyone has a 780. I run several 970's, 770's, 760's, 680's and 950's (don't use my 750 any longer). A WU should be such that it can be completed by a midrange-card within 24 hours. A 680 ore a 770 is still a good card. Alternatively one could make a fundraising campaign so poor Cruncher's get some 980i's ;-) ____________ Regards, Josef  | |

| ID: 43381 | Rating: 0 | rate:

| |

|

https://boinc.berkeley.edu/trac/wiki/ProjectOptions Accelerating retries

The goal of this mechanism is to send timeout-generated retries to hosts that are likely to finish them fast.

Here's how it works:

• Hosts are deemed "reliable" (a slight misnomer) if they satisfy turnaround time and error rate criteria.

• A job instance is deemed "need-reliable" if its priority is above a threshold.

• The scheduler tries to send need-reliable jobs to reliable hosts.

When it does, it reduces the delay bound of the job.

• When job replicas are created in response to errors or timeouts,

their priority is raised relative to the job's base priority.

The configurable parameters are:

<reliable_on_priority>X</reliable_on_priority>

Results with priority at least reliable_on_priority are treated as "need-reliable".

They'll be sent preferentially to reliable hosts.

<reliable_max_avg_turnaround>secs</reliable_max_avg_turnaround>

Hosts whose average turnaround is at most reliable_max_avg_turnaround

and that have at least 10 consecutive valid results e are considered 'reliable'.

Make sure you set this low enough that a significant fraction (e.g. 25%) of your hosts qualify.

<reliable_reduced_delay_bound>X</reliable_reduced_delay_bound>

When a need-reliable result is sent to a reliable host,

multiply the delay bound by reliable_reduced_delay_bound (typically 0.5 or so).

<reliable_priority_on_over>X</reliable_priority_on_over>

<reliable_priority_on_over_except_error>X</reliable_priority_on_over_except_error>

If reliable_priority_on_over is nonzero,

increase the priority of duplicate jobs by that amount over the job's base priority.

Otherwise, if reliable_priority_on_over_except_error is nonzero,

increase the priority of duplicates caused by timeout (not error) by that amount.

(Typically only one of these is nonzero, and is equal to reliable_on_priority.)

NOTE: this mechanism can be used to preferentially send ANY job, not just retries,

to fast/reliable hosts.

To do so, set the workunit's priority to reliable_on_priority or greater.

____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43382 | Rating: 0 | rate:

| |

I understand the runtime was underestimated When there is WU variation in runtime fixing credit based on anticipated runtime is always going to be hit and miss, and on a task by task basis. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43383 | Rating: 0 | rate:

| |

|

It's not only low end cards | |

| ID: 43384 | Rating: 0 | rate:

| |

It's not only low end cards https://www.gpugrid.net/workunit.php?wuid=11594288 | |

| ID: 43385 | Rating: 0 | rate:

| |

|

https://www.gpugrid.net/workunit.php?wuid=11595159 https://www.gpugrid.net/workunit.php?wuid=11594288 287647 NVIDIA GeForce GT 520 (1023MB) driver: 352.63 201720 NVIDIA Tesla K20m (4095MB) driver: 340.29 (cuda6.0 - might be an issue) 125384 Error while downloading, also NVIDIA GeForce GT 640 (1024MB) driver: 361.91 321762 looks like a GTX980Ti but actually tried to run on the 2nd card, a GTX560Ti which only had 1GB GDDR5 54461 another 560Ti with 1GB GDDR5 329196 NVIDIA GeForce GTX 550 Ti (1023MB) driver: 361.42 Looks like 4 out of 6 fails where due to the cards only having 1GB GDDR, one failed to download but only had 1GB GDDR anyway, the other might be due to using an older driver with these WU's. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43386 | Rating: 0 | rate:

| |

|

Would it make sense if a moderator or staff contacted these repeat offenders with a PM, asking them if they would consider detaching or try a less strenuous project? | |

| ID: 43389 | Rating: 0 | rate:

| |

|

In theory the server can send messages to hosts following repeated failures, if these are logged: | |

| ID: 43390 | Rating: 0 | rate:

| |

[...] The problem with equilibrations is that we cannot split them into multiple steps like the normal simulations so we just have to push through this batch and then we are done. I expect it to be over by the end of the week. No more simulations are being sent out a few days now so only the ones that cancel or fail will be resent automatically. Will there be any future OPM batches available or is this the end of OPM? I've enjoyed crunching OPM996 (non-fixed) credit WU. The unpredictable runtime simulations (interchangeable Natom batch) an exciting type of WU. Variable Natom for each task creates an allure of mystery. If viable - OPM a choice WU for Summer time crunching from it's lower power requirement compared to some other WU's. (Umbrella type WU would also help contend with the summer heat.) 100.440 Natoms 16h 36m 46s (59.806s) 903.900 credits (GTX980Ti) Is 903,900 the most credit ever given to an ACEMD WU? | |

| ID: 43391 | Rating: 0 | rate:

| |

|

Or just deny them new tasks until they login as your previous idea. This would solve the probem for the most part. Just can't understand the project not adopting this approach as they would like WUs returned ASAP and users don't want to be sat idle while these hosts hold us up | |

| ID: 43392 | Rating: 0 | rate:

| |

I think it is so far.100.440 Natoms 16h 36m 46s (59.806s) 903.900 credits (GTX980Ti)Is 903,900 the most credit ever given to an ACEMD WU? There is a larger model which contains 101.237 atoms, and this could generate 911,100 credits with the +50% bonus. Unfortunately one of my slower (GTX980) host received one such task, and it has been processed under 90,589 seconds (25h 9m 49s) (plus the time it spent queued), so it has earned "only" +25% bonus, resulting in "only" 759,250 credits. | |

| ID: 43393 | Rating: 0 | rate:

| |

I think it is so far.100.440 Natoms 16h 36m 46s (59.806s) 903.900 credits (GTX980Ti)Is 903,900 the most credit ever given to an ACEMD WU? I got that beat with 113536 Natoms. It took my computer over 30 hours to complete, so I lost the 50% bonus. I actually got 2 of them. See links below: https://www.gpugrid.net/result.php?resultid=15092125 https://www.gpugrid.net/result.php?resultid=15092126 Anyone have anything bigger? This was actually fun. Let's do it again, but put it into the super long category. | |

| ID: 43394 | Rating: 0 | rate:

| |

I got that beat with 113536 Natoms. It took my computer over 30 hours to complete, so I lost the 50% bonus.Wow, that would get 1.021.800 credits with the +50% bonus. I hope that one of my GTX980Ti hosts will receive such a workunit :) | |

| ID: 43395 | Rating: 0 | rate:

| |

|

717000 credits (with 25% bonus) was the highest I received. Would have been 860700 if returned inside 24h, but would require a bigger card. | |

| ID: 43396 | Rating: 0 | rate:

| |

|

Mines bigger, 117122 Natoms took around 24 hours, 878,500.00 credits with the 25% bonus. | |

| ID: 43399 | Rating: 0 | rate:

| |

717000 credits (with 25% bonus) was the highest I received. Would have been 860700 if returned inside 24h, but would require a bigger card. Have completed 14 of the OPM, including 6 of the 91848 Natoms size with credit of only 275,500 each (no bonuses). They took well over twice the time of earlier WUs that yielded >200,000 credits. Haven't seen any of the huge credit variety being discussed. | |

| ID: 43403 | Rating: 0 | rate:

| |

Mines bigger, 117122 Natoms took around 24 hours, 878,500.00 credits with the 25% bonus.That would get 1.054.200 credits if returned under 24h. As I see these workunits are running out, only those remain which are processed by slow hosts. We may receive some when the 5 days deadline expires. | |

| ID: 43404 | Rating: 0 | rate:

| |

Might have got the lowest credit though ;pYou're not even close :) 2k72R5-SDOERR_opm996-0-1-RND1700_0 137.850 credits (including 50% bonus) 30637 atoms 3.017 ns/day 10M steps 2lbgR1-SDOERR_opm996-0-1-RND1419_0 130.350 credits (including 50% bonus) 28956 atoms 2.002 ns/day 10M steps 2lbgR0-SDOERR_opm996-0-1-RND9460_0 130.350 credits (including 50% bonus) 28956 atoms 2.027 ns/day 10M steps 2kbvR0-SDOERR_opm996-0-1-RND1815_1 129.900 credits (including 50% bonus) 28872 Natoms 1.937 ns/day 10M steps 1vf6R5-SDOERR_opm998-0-1-RND7439_0 122.550 credits (including 50% bonus) 54487 Natoms 5.002 ns/day 5M steps 1kqwR7-SDOERR_opm998-0-1-RND9523_1 106.650 credits (including 50% bonus) 47409 atoms 4.533 ns/day 5M steps | |

| ID: 43405 | Rating: 0 | rate:

| |

My GPU(s) current (opm996 long WU) estimated total Runtime. Completion rate (based on) 12~24hours of real-time crunching. The OPM WUs spelled the death knell for my last 2 super-clocked 650 Ti GPUs. They weren't too bad with the earlier WUs but were ridiculously slow with the OPMs. Probably due to having only 1GB of memory. Anyway, pulled them out of the machines. Down to a flock (perhaps: gaggle, pack, herd, swarm, pod?) of 2GB 750 Ti cards and a 670. Also noticed that machines with only 1 NV GPU processed the OPMs faster. This wasn't the case for earlier WUs. | |

| ID: 43406 | Rating: 0 | rate:

| |

My GPU(s) current (opm996 long WU) estimated total Runtime. Completion rate (based on) 12~24hours of real-time crunching. It's there in 7.6.22 (non-beta). For the GERARD_FXCXCL tasks it's about 8% on my 970's. The OPM WUs spelled the death knell for my last 2 super-clocked 650 Ti GPUs. They weren't too bad with the earlier WUs but were ridiculously slow with the OPMs. Probably due to having only 1GB of memory. Anyway, pulled them out of the machines. Down to a flock (perhaps: gaggle, pack, herd, swarm, pod?) of 2GB 750 Ti cards and a 670. Clutch, brood, rookery, skulk, crash, congregation, sleuth, school, shoal, army, quiver, gang, pride, bank, bouquet. Also noticed that machines with only 1 NV GPU processed the OPMs faster. This wasn't the case for earlier WUs. Probably due to the increased bus and CPU usage. I tried to alleviate this by freeing up more CPU and increasing the GDDR5 freq., but the GPU clocks could run higher too, due to slightly less GPU and power usage. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43409 | Rating: 0 | rate:

| |

I was lucky to get one such workunit on a GTX980Ti host.I got that beat with 113536 Natoms. It took my computer over 30 hours to complete, so I lost the 50% bonus.Wow, that would get 1.021.800 credits with the +50% bonus. I hope that one of my GTX980Ti hosts will receive such a workunit :) It's 64.5% processed under 12 hours, so it will take ~18h 30m to complete. I keep my fingers crossed :) We'll see the result in the morning. | |

| ID: 43415 | Rating: 0 | rate:

| |

My GPU(s) current (opm996 long WU) estimated total Runtime. Completion rate (based on) 12~24hours of real-time crunching. Waiting on (2) formerly Timed out OPM to finish up on my 970's (23hr & 25hr estimated runtime). OPM WU was sent after hot day then cool evening ocean breeze -97 error GERARD_FXCXCL (50C GTX970) bent the knee for May sun. I was lucky to get one such workunit. 960MB & 1020MB GDDR5 for each of my current OPM (60~80k Natoms guess). | |

| ID: 43417 | Rating: 0 | rate:

| |

I'm happy to report that the workunit finished fine:I was lucky to get one such workunit on a GTX980Ti host.I got that beat with 113536 Natoms. It took my computer over 30 hours to complete, so I lost the 50% bonus.Wow, that would get 1.021.800 credits with the +50% bonus. I hope that one of my GTX980Ti hosts will receive such a workunit :) 1sujR0-SDOERR_opm996-0-1-RND0758_1 1.021.800 credits (including 50% bonus), 67.146 sec (18h 39m 6s), 113536 atoms 6.707 ns/day | |

| ID: 43419 | Rating: 0 | rate:

| |

1.021.800 credits ... you lucky one :-) | |

| ID: 43421 | Rating: 0 | rate:

| |

Waiting on (2) formerly Timed out OPM to finish up on my 970's (23hr & 25hr estimated runtime). OPM WU was sent after hot day then cool evening ocean breeze -97 error GERARD_FXCXCL (50C GTX970) bent the knee for May sun.Your GPUs are too hot. Your GT 630 reaches 80°C (176°F), while in your laptop your GT650M reaches 93°C (199°F) which is crazy. Your host with 4 GPUs has two GTX970s, a GTX 750 and a GT630. There's no point in risking the stability of the simulations running on your fast GPUs by putting low-end GPUs in the same host. Packing 4 GPU to a single PC for 24/7 crunching requires water cooling, (or PCIe riser cards to make breathing space between the cards). Crunching on laptops is not recommended. But if you do, you should place your laptop on its side while not in use, to make the air outlet facing up and the bottom of the laptop vertical (so the fan could take more air in). You should also regularly clean the fan & the fins with compressed air. | |

| ID: 43422 | Rating: 0 | rate:

| |

|

Finally recieved an opm task that should finish inside 24h, 4fkdR3-SDOERR_opm996-0-1-RND2483_1 | |

| ID: 43423 | Rating: 0 | rate:

| |

Waiting on (2) formerly Timed out OPM to finish up on my 970's (23hr & 25hr estimated runtime). OPM WU was sent after hot day then cool evening ocean breeze -97 error GERARD_FXCXCL (50C GTX970) bent the knee for May sun.Your GPUs are too hot. Your GT 630 reaches 80°C (176°F), while in your laptop your GT650M reaches 93°C (199°F) which is crazy. Heed the good advice! Note that 93C is the GPU's temperature cut-off point. The GPU self-throttles to protect itself because it's dangerously hot. It doesn't have a cut-off point to protect the rest of the system and GPU's are Not designed to run at high temps continuously. Use temperature and fan controlling apps such as NVIDIA Inspector and MSI Afterburner to protect your hardware. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43424 | Rating: 0 | rate:

| |

I'm happy to report that the workunit finished fine:I was lucky to get one such workunit on a GTX980Ti host.I got that beat with 113536 Natoms. It took my computer over 30 hours to complete, so I lost the 50% bonus.Wow, that would get 1.021.800 credits with the +50% bonus. I hope that one of my GTX980Ti hosts will receive such a workunit :) I also got another big one: 3pp2R9-SDOERR_opm996-0-1-RND0623_1 Run time 80,841.79 CPU time 80,414.03 120040 Natoms Credit 1,080,300.00 https://www.gpugrid.net/result.php?resultid=15102827 This was done on my windows 10 machine in under 24 hours with 50% bonus while working on this unit on the other card: 3um7R7-SDOERR_opm996-0-1-RND5030_1 Run time 67,202.94 CPU time 66,809.66 91343 Natoms Credit 822,000.00 https://www.gpugrid.net/result.php?resultid=15102779 Though, this did cost me my number 1 position in the performance tab. | |

| ID: 43425 | Rating: 0 | rate:

| |

Though, this did cost me my number 1 position in the performance tab.Never mind! :D  | |

| ID: 43426 | Rating: 0 | rate:

| |

|

@Retvari | |

| ID: 43428 | Rating: 0 | rate:

| |

|

Top Average Performers is a very misleading and ill-conceived chart because it is based on average performance of a user and ALL his hosts rather than a host in particular. Got one of the looser-files again: https://www.gpugrid.net/result.php?resultid=15103135. Only 171,150 Credits for a runtime of 64,394.64 There are no good or bad ones, there are just some you get more or less credit for. | |

| ID: 43429 | Rating: 0 | rate:

| |

@Retvari The Natom amount only known after a WU validates cleanly in (<stderr> file). One way to gauge Natom size is the GPU memory usage. The really big models (credit wise and Natom seem to confirm some OPM are the largest ACEMD ever crunched) near 1.5GB while smaller models are <1.2GB or less. Long OPM WU Natom (29k to 120K) varies to point where the cruncher doesn't really know what to expect for credit. (I like this new feature since no credit amount is fixed.) Waiting on (2) formerly Timed out OPM to finish up on my 970's (23hr & 25hr estimated runtime). OPM WU was sent after hot day then cool evening ocean breeze -97 error GERARD_FXCXCL (50C GTX970) bent the knee for May sun.Your GPUs are too hot. Your GT 630 reaches 80°C (176°F), while in your laptop your GT650M reaches 93°C (199°F) which is crazy. Tending the GPU advice - I will reconfigure. I've found an WinXP home edition UlCPC key plus it's sata1 hard drive - will a ULCPC key copied onto a USB work with a desktop system? I also have a USB drive Linux debian (tails 2.3) OS as well Parrot 3.0 I could set-up for a WIn8.1 dual-boot. Though the grapevine birds chirp mentioned graphic card performance is non-existent compared to mainline 4.* linux. I'd really like to lose the WDDM choke point so my future Pascal cards are efficient as possible. | |

| ID: 43430 | Rating: 0 | rate:

| |

|

I get one of these long runs to my notebook with GT740M. As it was slow, I stopped all other CPU projects; it was still slow. After about 60 - 70 hours it was still about %50. Anyway, it ended up with errors and I'm not getting any long runs anymore. It seems they won't finish on time... | |

| ID: 43432 | Rating: 0 | rate:

| |

|

both of the below WUs crunched with a GTX980Ti: | |

| ID: 43433 | Rating: 0 | rate:

| |

|

GERARD_FXCXCL12R is a typical work unit in terms of credits awarded. | |

| ID: 43434 | Rating: 0 | rate:

| |

By the way: Does anybody knows what happened to Stoneageman?He is crunching Einstein@home for some time now. He is ranked #8 regarding the total credits earned and #4 regarding RAC at the moment. | |

| ID: 43435 | Rating: 0 | rate:

| |

The Natom amount only known after a WU validates cleanly in (<stderr> file).The number of atoms of a running task can be found in the project's folder, in a file named as the task plus a _0 attached to the end. Though it has no .txt extension this is a clear text file, so if you open it with notepad you will find a line (5th) which contains this number: # Topology reports 32227 atoms | |

| ID: 43436 | Rating: 0 | rate:

| |

He is crunching Einstein@home for some time now. The Natom amount only known after a WU validates cleanly in (<stderr> file)The number of atoms of a running task can be found in the project's folder, in a file named as the task plus a _0 attached to the end. Thankyou for the explanation ____________ Regards, Josef  | |

| ID: 43438 | Rating: 0 | rate:

| |

Another way to look at it is that you are doing cutting edge theoretical/proof of concept science, never done before - it's bumpy. I'll look at it from this angle. ;-) ____________ Regards, Josef  | |

| ID: 43439 | Rating: 0 | rate:

| |

|

This one took over 5 days to get to me https://www.gpugrid.net/workunit.php?wuid=11595181 | |

| ID: 43440 | Rating: 0 | rate:

| |

|

Ah you guys actually reminded me of the obvious fact that the credit calculations might be off in respect to the runtime if the system does not fit into GPU memory. Afaik if the system does not fully fit in the GPU (which might happen with quite a few of the OPM systems) it will simulate quite a bit slower. | |

| ID: 43441 | Rating: 0 | rate:

| |

|

I expect the problem was predominantly the varying number of atoms - the more atoms the longer the runtime. You would have needed to factor the atom count variable into the credit model for it to work perfectly. As any subsequent runs will likely have fixed atom counts (but varying per batch) I expect they can be calibrated as normal. If further primer runs are needed it would be good to factor the atom count into the credits. | |

| ID: 43442 | Rating: 0 | rate:

| |

I expect the problem was predominantly the varying number of atoms - the more atoms the longer the runtime. You would have needed to factor the atom count variable into the credit model for it to work perfectly. As any subsequent runs will likely have fixed atom counts (but varying per batch) I expect they can be calibrated as normal. If further primer runs are needed it would be good to factor the atom count into the credits. More atoms also mean a higher GPU usage. I am currently crunching a WU with 107,436 atoms. My GPU usage is 83%, compared to the low atom WUs in this batch of 71%. Which is on a windows 10 computer with WDDM lag. My current GPU memory usage is 1692 MB. The GERARD_FXCXCL WU, by comparison, that I am running concurrently on this machine on the other card, are 80% GPU usage and 514 MB GPU memory usage with 31,718 atoms. The power usage is the same 75% for each WU, each running on 980Ti card. | |

| ID: 43443 | Rating: 0 | rate:

| |

717000 credits (with 25% bonus) was the highest I received. Would have been 860700 if returned inside 24h, but would require a bigger card. The OPMs were hopeless on all but the fastest cards. Even the Gerards lately seem to be sized to cut out the large base of super-clocked 750 Ti cards at least on the dominant WDDM based machines (the 750 Tis are still some of the most efficient GPUs that NV has ever produced). In the meantime file sizes have increased and much time is used just in the upload process. I wonder just how important it is to keep the bonus deadlines so tight considering the larger file sizes and and the fact that the admins don't even seem to be able to follow up on the WUs we're crunching by keeping new ones in the queues. It wasn't long ago that the WU times doubled, not sure why. Seems a few are gaining a bit of speed by running XP. Is that safe, considering the lack of support from MS? I've also been wanting to try running a Linux image (perhaps even from USB), but the image here hasn't been updated in years. Even sent one of the users a new GPU so he could work on a new Linux image for GPUGrid but nothing ever came of it. Any of the Linux experts up to this job? | |

| ID: 43449 | Rating: 0 | rate:

| |

|

Most of the issues are due to lack of personnel at GPUGrid. The research is mostly performed by the research students and several have just finished. | |

| ID: 43450 | Rating: 0 | rate:

| |

Most of the issues are due to lack of personnel at GPUGrid. The research is mostly performed by the research students and several have just finished. Thanks SK. Hope that you can get the Linux info updated. It would be much appreciated. I'm leery about XP at this point. Please keep us posted. I've been doing a little research into the 1 and 2 day bonus deadlines mostly by looking at a lot of different hosts. It's interesting. By moving WUs just past the 1 day deadline for a large number of GPUs, the work return may actually be getting slower. The users with the very fast GPUs generally cache as many as allowed and return times end up being close to 1 day anyway. On the other hand for instance most of my GPUs are the factory OCed 750 Ti (very popular on this project). When they were making the 1 day deadline, I set them as the only NV project and at 0 project priority. The new WU would be fetched when the old WU was returned. Zero lag. Now since I can't quite make the 1 day cutoff anyway, I set the queue for 1/2 day. Thus the turn around time is much slower (but still well inside the 2 day limit) and I actually get significantly more credit (especially when WUs are scarce). This too tight turnaround strategy by the project can actually be harmful to their overall throughput. | |

| ID: 43451 | Rating: 0 | rate:

| |

|

Some of the new Geralds are definitely a bit long as well, they seem to run about 12.240% per hour, which wouldn't be very much except that's with a overclocked 980ti, nearly the best possible scenario until pascal later this month. Like others have said, it doesn't effect me, but it would be a long time with a slower card. | |

| ID: 43452 | Rating: 0 | rate:

| |

|

This one took 10 Days 8 hours to get to me https://www.gpugrid.net/workunit.php?wuid=11595052 | |

| ID: 43454 | Rating: 0 | rate:

| |

This one took 10 Days 8 hours to get to me https://www.gpugrid.net/workunit.php?wuid=11595052 Interesting that most of the failures were from fast GPUs, even 3x 980Ti and a Titan among others. Are people OCing to much? In the "research" I mentioned above I've noticed MANY 980Ti, Titan and Titan X cards throwing constant failures. Surprised me to say the least. | |

| ID: 43456 | Rating: 0 | rate:

| |

|

I have some similar experiences: | |

| ID: 43457 | Rating: 0 | rate:

| |

Interesting that most of the failures were from fast GPUs, even 3x 980Ti and a Titan among others. Are people OCing to much? In the "research" I mentioned above I've noticed MANY 980Ti, Titan and Titan X cards throwing constant failures. Surprised me to say the least.There are different reasons for those failures as missing libraries, overclocking, wrong driver installation. The reason of timeouts are: too slow card and/or too many GPU tasks queued from different projects. | |

| ID: 43458 | Rating: 0 | rate:

| |

I have some similar experiences: Here's an interesting one: https://www.gpugrid.net/workunit.php?wuid=11593078 I'm the 8th user to receive this "SHORT" OPM WU originally issued on May 9. The closest to success was by a GTX970 (until the user aborted it). Now it's running on one of my factory OCed 750 Ti cards. That card finishes the GERARD LONG WUs in 25-25.5 hours (yeah, cry me a river). This "SHORT" WU is 60% done and should complete with a total time of about 27 hours. Show me a GPU that can finish this WU in anywhere near 2-3 hours and I'll show you a fantasy world where unicorns romp through the streets. | |

| ID: 43461 | Rating: 0 | rate:

| |

Ubuntu 16.04 has been released recently. I'm looking to try it soon and see if there is a simple way to get it up and running for here; repository drivers + Boinc from the repository. If I can I will write it up. Alas, with every version so many commands change and new problems pop up that it's always a learning process. Straying a bit off topic again, I'll risk posting this. I consider myself fairly computer literate, having built several PCs and having a little coding experience. However, I have nearly always used Windows. I've been very interested in Linux, but every time I've tried to set up a Linux host for BOINC I've been defeated. Either I couldn't get GPU drivers installed correctly or BOINC was somehow not set up correctly within Linux. If anyone would be willing to put together a step-by-step "Idiot's Guide" it would be HUGELY appreciated. | |

| ID: 43462 | Rating: 0 | rate:

| |

|

2lugR6-SDOERR_opm996-0-1-RND3712 2 days, but 6 failures: | |

| ID: 43464 | Rating: 0 | rate:

| |

I've been very interested in Linux, but every time I've tried to set up a Linux host for BOINC I've been defeated. Either I couldn't get GPU drivers installed correctly or BOINC was somehow not set up correctly within Linux. Something always goes wrong for me too, and I question my judgement for trying it once again. But I think when Mint 18 comes out, it will be worth another go. It should be simple enough (right). | |

| ID: 43465 | Rating: 0 | rate:

| |

|

The Error Rate for the latest GERARD_FX tasks is high and the OPM simulations were higher. Perhaps this should be looked into. _Application_ _unsent_ In Progress Success Error Rate

Short runs (2-3 hours on fastest card)

SDOERR_opm99 0 60 2412 48.26%

Long runs (8-12 hours on fastest card)

GERARD_FXCXCL12R_1406742_ 0 33 573 38.12%

GERARD_FXCXCL12R_1480490_ 0 31 624 35.34%

GERARD_FXCXCL12R_1507586_ 0 25 581 33.14%

GERARD_FXCXCL12R_2189739_ 0 42 560 31.79%

GERARD_FXCXCL12R_50141_ 0 35 565 35.06%

GERARD_FXCXCL12R_611559_ 0 31 565 32.09%

GERARD_FXCXCL12R_630477_ 0 34 561 34.31%

GERARD_FXCXCL12R_630478_ 0 44 599 34.75%

GERARD_FXCXCL12R_678501_ 0 30 564 40.57%

GERARD_FXCXCL12R_747791_ 0 32 568 36.89%

GERARD_FXCXCL12R_780273_ 0 42 538 39.28%

GERARD_FXCXCL12R_791302_ 0 37 497 34.78% 2 or 3 weeks ago the error rate was ~25% to 35% it's now ~35% to 40% - Maybe this varies due to release stage; early in the runs tasks go to everyone so have higher error rates, later more go to the most successful cards so the error rate drops? Selection of more choice systems might have helped with the OPM but that would also have masked the problems too. GPUGrid has always been faced with user's Bad Setup problems. If you have 2 or 3 GPU's in a box and dont use temp controlling/fan controlling software, or if you overclock the GPU or GDDR too much there is little the project can do about that (at least now). It's incredibly simple to install a program such as NVIDIA Inspector and set it to prioritise temperature, yet so few do this. IMO the GPUGrid app should by default set the temperature control. However that's an app dev issue and probably isn't something Stefan has the time to work on, even if he could do it. I've noticed some new/rarely seen before errors with these WU's, so perhaps that could be looked at too? On the side-show to this thread 'Linux' (as it might get those 25/26h runs below 24h), the problem is that lots of things change with each version and that makes instructions for previous versions obsolete. Try to follow the instructions tested under Ubuntu 11/12/13 while working with 15.4/10 and you will probably not succeed - the short-cuts have changed the commands have changed the security rights have changed, the repo drivers are too old... I've recently tried to get Boinc on a Ubuntu 15.10 system to see an NV GPU that I popped in without success. Spent ~2 days at this on and off. Systems sees the card, X-server works fine. Boinc just seems oblivious. Probably some folder security issue. Tried to upgrade to 16.04 only to be told (after downloading) that the (default sized) boot partition is too small... Would probably need to boot into Grub to repartition - too close to brain surgery to go down that route. Thought it would be faster and easier to format and install 16.04. Downloaded an image onto a W10 system, but took half a day to find an external DVD-Writer and still can't find a DVD I can write the image to (~1.4GB)... ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43466 | Rating: 0 | rate:

| |

|

If the project denied WUs to machines that continually errored and timed out we could have that error rate below 5%. | |

| ID: 43467 | Rating: 0 | rate:

| |

|

I agree on that, no doubt, but where do you draw the line? 50% failure systems, 30% 10%...? I still think a better system needs to be introduced to exclude bad systems until the user responds to a PM/Notice/Email... It could accommodate resolution to such problems and facilitate crunching again once resolved (helps the cruncher and the project). Sometimes you just get an unstable system on which every task fails until it is restarted and then it works again fine, but even that could and should be accommodated. More often it's a bad setup; wrong drivers, heavy OC/bad cooling, wrong config/ill-advise use, but occasionally the card's a dud or it's something odd/difficult to work out. | |

| ID: 43469 | Rating: 0 | rate:

| |

|

I've been using Kubuntu for 2 - 3 years. | |

| ID: 43470 | Rating: 0 | rate:

| |

I agree on that, no doubt, but where do you draw the line? 50% failure systems, 30% 10%...? I still think a better system needs to be introduced to exclude bad systems until the user responds to a PM/Notice/Email... It could accommodate resolution to such problems and facilitate crunching again once resolved (helps the cruncher and the project). Sometimes you just get an unstable system on which every task fails until it is restarted and then it works again fine, but even that could and should be accommodated. More often it's a bad setup; wrong drivers, heavy OC/bad cooling, wrong config/ill-advise use, but occasionally the card's a dud or it's something odd/difficult to work out. I think you would have to do an impact assessment on the project on what level of denial produces benefits and at what point that plateaus and turns into a negative impact. With the data that this project already has, that shouldn't be difficult. Totally agree with the idea of a test unit. Very good idea. If it is only to last 10 minutes then it must be rigorous enough to make a bad card/system fail very quickly and it must have a short completion deadline. | |

| ID: 43471 | Rating: 0 | rate:

| |

|

The majority of failures tend to be almost immediate <1min. If the system could deal with those it would be of great benefit, even if it can't do much more. | |

| ID: 43474 | Rating: 0 | rate:

| |

Once again I totally agree and to address your other question I agree on that, no doubt, but where do you draw the line? 50% failure systems, 30% 10%...? I think you have to be brutal in your approach and give this project "high standards" instead of "come one come all". This project is already an elite one based on the core contributors and the money, time and effort they put into it. Bad hosts hog WU's, slow results and deprive good hosts of work which, frustrates good hosts which, you may lose and may keep new ones from joining so "raise the bar" and turn this into a truly elite project, we all know people want to go to TOP clubs, restaraunts, universities etc. Heat/cooling/OC related failures would take a bit longer to identify but cards heat up quickly if they are not properly cooled. How long they run before failing is a bit random but would increase with time. Unfortunately you also get half-configured systems; 3 cards, two setup properly, one cooker. What else is running would also impact on temp, but dealing with situations like that isn't a priority. They can get help via the forums as usual but as far as the project is concerned you can't make their problem your problem. | |

| ID: 43475 | Rating: 0 | rate:

| |

|

Some kind of test is a very good idea, but it have to be done on a regular basis on every hosts, even on the reliable ones, as I think this test should watch for GPU temperatures as well, and if GPU temps are too high (above 85°C) then the given host should be excluded. The actual percentage could be set by scientific means based on the data available for the project, but there should be a time limit for the ban and a manual override for the user. (and a regular re-evaluation of the banned hosts). I would set it to 10%.I agree on that, no doubt, but where do you draw the line? 50% failure systems, 30% 10%...? Bad hosts hog WU's, slow results and deprive good hosts of work which, frustrates good hosts which, you may lose and may keep new ones from joining so "raise the bar" and turn this into a truly elite project, we all know people want to go to TOP clubs, restaurants, universities etc.I agree partly. I think there should be a queue available only for "elite"=reliable&fast users (or hosts), but basically it should contain the same type of work as the "normal" queue, but the batches should be separated. In this way a part of the batches would finish earlier, or they can be a single-step workunits with very long (24h+ on a GTX 980 Ti) processing times. Heat/cooling/OC related failures would take a bit longer to identify but cards heat up quickly if they are not properly cooled. How long they run before failing is a bit random but would increase with time. Unfortunately you also get half-configured systems; 3 cards, two setup properly, one cooker. What else is running would also impact on temp, but dealing with situations like that isn't a priority. Until our reliability assessment dreams come true (~never), we should find other means to reach the problematic contributors. It should be made very clear right at the start (on the project's homepage, in the BOINC manager when a user tries to join the project, in the FAQ etc) the project's minimum requirements: 1. A decent NVidia GPU (GTX 760+ or GTX 960+) 2. No overclocking (later you can try, but read the forums) 3. Other GPU projects are allowed only as a backup (0 resource share) project. Some tips should be broadcast by the project as a notice on a regular basis about the above 3 points. Also there should be someone/something who could send an email to the user who have unreliable host(s), or perhaps their username/hostname should be broadcast as a notice. | |

| ID: 43476 | Rating: 0 | rate:

| |

|

Communications would need to be automated IMO. Too big a task for admin and mods to perform manually; would be hundreds of messages daily. There is also a limit on how many PM's you and I can send. It's about 30/day for me, might be less for others? I trialled contacting people directly who are failing all workunits. From ~100 PM's I think I got about 3 replies, 1 was 6months later IIRC. Suggests ~97% of people attached don't read their PM's/check their email or they can't be bothered/don't understand how to fix their issues. | |

| ID: 43477 | Rating: 0 | rate:

| |

|

It would be very helpful, to some (especially to me!), to see a notice returned from the project's server. | |

| ID: 43478 | Rating: 0 | rate:

| |

|

Everything is getting complicated again and unfortunately that's where people tune out and NOTHING gets done. | |

| ID: 43479 | Rating: 0 | rate:

| |

|

From personal experience it's usually the smaller and older cards that are less capable of running at higher temps. It's also the case that their safe temp limit is impacted by task type; some batches run hotter. I've seen cards that are not stable at even reasonable temps (65 to 70C) but run fine if the temps are reduced to say 59C (which while this isn't reasonable [requires downclocking] is still achievable). There was several threads here about factory overclocked cards not working out of the box, but they worked well when set to reference clocks, or their voltage nudged up a bit. | |

| ID: 43483 | Rating: 0 | rate:

| |

On the side-show to this thread 'Linux' (as it might get those 25/26h runs below 24h), the problem is that lots of things change with each version and that makes instructions for previous versions obsolete. Try to follow the instructions tested under Ubuntu 11/12/13 while working with 15.4/10 and you will probably not succeed - the short-cuts have changed the commands have changed the security rights have changed, the repo drivers are too old... Lots of luck. By fortuitous (?) coincidence, my SSD failed yesterday, and I tried Ubuntu 16.04 this morning. The good news is that after figuring out the partitioning, I was able to get it installed without incident, except that you have to use a wired connection at first; the WiFi would not connect. Even installing the Nvidia drivers for my GTX 960 was easy enough with the "System Setting" icon and then "Software Updates/Additional Drivers". That I thought would be the hardest part. Then, I went to "Ubuntu Software" and searched for BOINC. Wonder of wonders, it found it (I don't know which version), and it installed without incident. I could even attach to POEM, GPUGrid and Universe. We are home free, right? Not quite. None of them show any work available, which is not possible. So we are back to square zero, and I will re-install Win7 when a new (larger) SSD arrives. EDIT: Maybe I spoke too soon. The POEM website does show one work unit completed under Linux at 2,757 seconds, which is faster than the 3,400 seconds that I get for that series (1vii) under Windows. So maybe it will work, but it appears that you have to manage BOINC through the website; I don't see much in the way of local settings or information available yet. We will see. | |

| ID: 43485 | Rating: 0 | rate:

| |

|

Thanks Jim, | |

| ID: 43490 | Rating: 0 | rate:

| |

|

More good news: BOINC downloaded a lot of Universe work units too. | |

| ID: 43491 | Rating: 0 | rate:

| |

|

The basic problem appears to be that there is a conflict between the X11VNC server and BOINC. I can do one or the other, but not both. I will just uninstall X11VNC and maybe I can make do with BoincTasks for monitoring this machine, which is a dedicated machine anyway. Hopefully, a future Linux version will fix it. | |

| ID: 43492 | Rating: 0 | rate:

| |

|

Got Ubuntu 16.04-x64 LTS up and running last night via a USB stick installation. | |

| ID: 43493 | Rating: 0 | rate:

| |

|

3d3lR4-SDOERR_opm996-0-1-RND0292 I've received it after 10 days and 20 hours and 34 minutes | |

| ID: 43494 | Rating: 0 | rate:

| |

The POEM website does show one work unit completed under Linux at 2,757 seconds, which is faster than the 3,400 seconds that I get for that series (1vii) under Windows. Not that it matter much, but I must have misread BOINCTasks, and was comparing a 1vii to a 2k39, which always runs faster. So the Linux advantage is not quite that large. Comparing the same type of work units (this time 2dx3d) shows about 20.5 minutes for Win7, and 17 minutes for Linux, or about a 20% improvement (all on GTX 960s). That may be about what we see here. By the way, BOINCTasks is working nicely on Win7 to monitor the Linux machine, though you have to jump through some hoops to set the permissions on the folders in order to copy the app_config, gui_rpc_auth.cfg and remote_hosts.cfg. And that is after you find where Linux puts them; they are a bit spread out as compared to the BOINC Data folder in Windows. It is a learning experience. | |

| ID: 43495 | Rating: 0 | rate:

| |

|

How does a host with 2 cards have 6 WUs in progress https://www.gpugrid.net/results.php?hostid=326161 at one time Monday 23 May 8:36 UTC | |

| ID: 43496 | Rating: 0 | rate:

| |

|

On the topic of WU timeouts, while all the issues raised in this discussion can cause them, let me point to the most probable (IMO) cause, thoroughly reported by affected users, but not resolved as yet: | |

| ID: 43498 | Rating: 0 | rate:

| |

On the topic of WU timeouts, while all the issues raised in this discussion can cause them, let me point to the most probable (IMO) cause, thoroughly reported by affected users, but not resolved as yet: "GPUGRID's network issues" The GPUGrid network issues are a problem and they never seem to be addressed. Just looked at the WUs supposedly assigned to my machines and there are 2 phantom WUs that the server thinks I have, but I don't: https://www.gpugrid.net/workunit.php?wuid=11594782 https://www.gpugrid.net/workunit.php?wuid=11602422 As you allude, some of the timeout issues here are due to poor network setup/performance or perhaps BOINC misconfiguration. Maybe someone from one of the other projects could help them out. Haven't seen issues like this anywhere else and have been running BOINC extensively since its inception. Some of (and perhaps a lot of) the timeouts complained about in this thread are due to this poor BOINC/network setup/performance (take your pick). | |

| ID: 43500 | Rating: 0 | rate:

| |

How does a host with 2 cards have 6 WUs in progress https://www.gpugrid.net/results.php?hostid=326161 at one time Monday 23 May 8:36 UTC GPUGrid issues 'up to' 2 tasks per GPU and that system has 3 GPU's, though only 2 are NVidia GPU's! CPU type AuthenticAMD AMD A10-7700K Radeon R7, 10 Compute Cores 4C+6G [Family 21 Model 48 Stepping 1] Coprocessors [2] NVIDIA GeForce GTX 980 (4095MB) driver: 365.10, AMD Spectre (765MB) It's losing the 50% credit bonus but at least it's a reliable system. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43501 | Rating: 0 | rate:

| |

How does a host with 2 cards have 6 WUs in progress https://www.gpugrid.net/results.php?hostid=326161 at one time Monday 23 May 8:36 UTC If it has only 2 CUDA GPU's it should only get 2 WU's per CUDA GPU since this project does NOT send WU's to NON Cuda cards. Card switching is the answer, basically you put 3 cards into one host and get 6 tasks you then take a card out and put it into another host and get more tasks. And if you are asking whether I am accusing Caffeine of doing that....YES. I am. | |

| ID: 43511 | Rating: 0 | rate:

| |

How does a host with 2 cards have 6 WUs in progress https://www.gpugrid.net/results.php?hostid=326161 at one time Monday 23 May 8:36 UTC Work fetch is where Boinc Manager comes into play and confuses the matter. GPUGrid would need to put in more server side configurations and routines to try and better deal with that, or remove the AMD app (possibly), but this problem just happened upon GPUGrid. Setting 1 WU per GPU would be simpler, and more fair (especially with so few tasks available), and go a long way to rectifying the situation. While GPUGrid doesn't presently have an active AMD/ATI app, it sort-of does have an AMD/ATI app - the MT app for CPU's+AMD's: https://www.gpugrid.net/apps.php Maybe somewhere on the GPUGrid server they can set something up so as not to send so many tasks, but I don't keep up with all the development of Boinc these days. It's not physical card switching (inserting and removing cards) because that's an integrated AMD/ATI GPU. Ideally the GPUGrid's server would recognised that there are only 2 NVidia GPU's and send out tasks going by that number, but it's a Boinc server that's used. While there is a way for the user/cruncher to exclude a GPU type against a project (Client_Configuration), it's very hands on and if AMD work did turn up here they wouldn't get any. http://boinc.berkeley.edu/wiki/Client_configuration My guess is that having 3 GPU's (even though one isn't useful) inflates the status of the system; as the system returns +ve results (no failure) it's rated highly, but more-so because there are 3 GPU's in it. So its even more likely to get work than a system with 2 GPU's with an identical yield. Not sure anything is being done deliberately. It's likely the iATI is being used exclusively for display purposes and why would you want to get 25% less credit for the same amount of work? From experience 'playing' with the use of various integrated and mixed GPU types, they are a pain to setup, and when you get it working you don't want to change anything. That might be the case here. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43516 | Rating: 0 | rate:

| |

|

x. | |

| ID: 43517 | Rating: 0 | rate:

| |

|

I notice lot of Users have great trouble installing Linux+BOINC. | |

| ID: 43518 | Rating: 0 | rate:

| |

|

"Trickles", not "tickles". | |

| ID: 43519 | Rating: 0 | rate:

| |

|

How to - install Ubuntu 16.04 x64 Linux & setup for GPUGrid | |

| ID: 43520 | Rating: 0 | rate:

| |

3d3lR4-SDOERR_opm996-0-1-RND0292 I've received it after 10 days and 20 hours and 34 minutes Here's the kind of thing that I find most mystifying. Running a 980Ti GPU, then holding the WU for 5 days until it gets sent out again, negating the usefulness of the next users contribution and missing all bonuses. Big waste of time and resources: https://www.gpugrid.net/workunit.php?wuid=11602161 | |

| ID: 43527 | Rating: 0 | rate:

| |

|

Doesn't bother cooling the $650 card either! | |

| ID: 43528 | Rating: 0 | rate:

| |

|

I have 2 GTX 980 Ti's in my new rig. I have an aggressive MSI Afterburner fan profile, that goes 0% fan @ 50*C, to 100% fan @ 90*C. | |

| ID: 43529 | Rating: 0 | rate:

| |

|

Possibly running other NV projects and not getting back to the GPUGrid WU until BOINC goes into panic mode. | |

| ID: 43530 | Rating: 0 | rate:

| |

I have 2 GTX 980 Ti's in my new rig. I have an aggressive MSI Afterburner fan profile, that goes 0% fan @ 50*C, to 100% fan @ 90*C. # GPU 1 : 74C # GPU 0 : 78C # GPU 0 : 79C # GPU 0 : 80C # GPU 1 : 75C # GPU 0 : 81C # GPU 0 : 82C # GPU 0 : 83C # BOINC suspending at user request (exit) # GPU [GeForce GTX 980 Ti] Platform [Windows] Rev [3212] VERSION [65] That suggests to me that the GPU was running too hot, the task became unstable and the app suspended crunching for a bit and recovered (recoverable errors). Matt added that suspend-recover feature some time ago IIRC. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43531 | Rating: 0 | rate:

| |

I have 2 GTX 980 Ti's in my new rig. I have an aggressive MSI Afterburner fan profile, that goes 0% fan @ 50*C, to 100% fan @ 90*C. No. I believe you are incorrect. The simulation will only terminate/retry when it says: # The simulation has become unstable. Terminating to avoid lock-up (1) # Attempting restart Try not to jump to conclusions about hot machines :) I routinely search my results, for "stab", and if I find an instability message matching that text, I know to downclock my overclock a bit more. | |

| ID: 43532 | Rating: 0 | rate:

| |

|

Truthfully I'm not entirely sure if a rig with multiple GPUs can even be kept very cool. I keep my 980ti fan profile at 50% and it tends to run about 60-65c, but the heat makes my case and even cpu cooler fans crank up, I'd imagine a pair of them would get pretty toasty. My fan setup I am quite sure could keep 2 below 70c, but it would sound like a jet engine and literally could not be kept in living quarters. | |

| ID: 43533 | Rating: 0 | rate:

| |

|

The workunit with the worst history I've ever received: | |

| ID: 43542 | Rating: 0 | rate:

| |

... we should find other means to reach the problematic contributors.I'd like to add the following: 4. Don't suspend the GPU tasks while your computer is in use, or at least set its timeout to 30 minutes. It's better to set up the list of exclusive apps (games, etc) in BOINC manager. 5. If you don't have a high-end GPU & you switch your computer off daily then GPUGrid is not for you. I strongly recommend for the GPUGrid staff to broadcast the list of worst hosts & the tips above in every month (while needed) | |

| ID: 43543 | Rating: 0 | rate:

| |

|

Wow ok, this thread derailed. We are supposed to keep discussions related just to the specific WUs here, even though I am sure it's a very productive discussions in general :) | |

| ID: 43545 | Rating: 0 | rate:

| |

Wow ok, this thread derailed.Sorry, but that's the way it goes :) We are supposed to keep discussions related just to the specific WUs here, even though I am sure it's a very productive discussions in general :)It's good to have a confirmation that you are reading this :) I am a bit out of time right now so I won't split threads and will just open a new one because I will resend OPM simulations soon.Will there be very long ones (~18-20 hours on GTX980Ti)? As in this case I will reduce my cache to 0.03 days. Right now I am trying to look into the discrepancies between projected runtimes and real runtimes as well as credits to hopefully do it better this time.We'll see. :) I keep my fingers crossed. The thing with excluding bad hosts is unfortunately not doable as the queuing system of BOINC apparently is pretty stupid and would exclude all of Gerard's WUs until all of mine finished if I send them with high priority :(This is left us to the only possibility of broadcasting to make things better. | |

| ID: 43546 | Rating: 0 | rate:

| |

|

There are things within your control that would mitigate the problem. | |

| ID: 43547 | Rating: 0 | rate:

| |

The thing with excluding bad hosts is unfortunately not doable as the queuing system of BOINC apparently is pretty stupid and would exclude all of Gerard's WUs until all of mine finished if I send them with high priority :( The only complete/long-term way around that might be to separate the research types using different apps & queues. Things like that were explored in the past and the biggest obstacle was the time-intensive maintenance for everyone; crunchers would have to select different queues and be up to speed with what's going on and you would have to spend more time on project maintenance (which isn't science). There might also be subsequent server issues. If the OPM's were release in the beta queue would that server priority still apply (is priority applied per queue, per app or per project)? Given how hungry GPUGrid crunchers are these days how long would it take to clear the prioritised tasks and could they be drip fed into the queue (small batches)? ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 43548 | Rating: 0 | rate:

| |

Reduce baseline WUs available per GPU per day from the present 50 to 10That's a good idea. Even it could be reduced to 5. ... and reduce WUs per gpu to one at a time.I'm ambivalent about this. Perhaps a 1 hour delay between WU downloads would be enough to spread the available workunits evenly between the hosts. I see different max task per day numbers on my different hosts with same GPUs, is this how it should be? | |

| ID: 43549 | Rating: 0 | rate:

| |

I believe so. You start at 50, when you send a valid result it goes up 1 when you send an error, abort a WU or server cancels, it goes back down to 50. 50 is a ridiculously high number anyway and as you have said could be reduced to 5 with benefit to user and project. | |

| ID: 43550 | Rating: 0 | rate:

| |

The thing with excluding bad hosts is unfortunately not doable as the queuing system of BOINC apparently is pretty stupid and would exclude all of Gerard's WUs until all of mine finished if I send them with high priority :(I recall that there was a "blacklist" of hosts in the GTX480-GTX580 era. Once my host got blacklisted upon the release of the CUDA4.2 app, as this app was much faster then the previous CUDA3.1, so the cards could be overclocked less and my hosts began to throw errors until I've reduced its clock frequency. It could not get tasks for 24 hours IIRC. However it seems that later when the BOINC server software was updated at GPUGrid this "blacklist" feature disappeared. It would be nice to have this feature again. | |

| ID: 43551 | Rating: 0 | rate:

| |

|

Ok, Gianni changed the baseline WUs available per GPU per day from 50 to 10 | |

| ID: 43554 | Rating: 0 | rate:

| |

Ok, Gianni changed the baseline WUs available per GPU per day from 50 to 10Thanks! EDIT: I don't see any change yet on my hosts' max number of tasks per day... | |

| ID: 43556 | Rating: 0 | rate:

| |

Ok, Gianni changed the baseline WUs available per GPU per day from 50 to 10 I don't want to sound in anyway disrespectful with this post so please don't take offence, here goes. WOOHOO! A sign things CAN be done instead of, can't do that, not doable. Thank you Stefan and Gianni for taking this first important step to making this project more efficient and when you witness the decline in error rates on the server status page which, while small, should be evident you will maybe consider reducing baseline to 5 and employ other initiatives to reduce errors/timeouts and ensure work is spread evenly/fairly over the GPUGrid userbase which will make this project faster, more efficient with a happier and hopefully growing core userbase. | |

| ID: 43557 | Rating: 0 | rate:

| |

... we should find other means to reach the problematic contributors.I'd like to add the following: I know my opinions aren't liked very much here, but I wanted to express my response to these 5 proposed "minimum requirements". 1. A decent NVidia GPU (GTX 760+ or GTX 960+) --- I disagree. The minimum GPU should be one that is supported by the toolset the devs release apps for, and one that can return results within the timeline they define. If they want results returned in a 6-week-time-period, and a GTS 250 fits the toolset, I see no reason why it should be excluded. 2. No overclocking (later you can try, but read the forums) --- I disagree. Overclocking can provide tangible performance results, when done correctly. It would be better if the task's final results could be verified by another GPU, for consistency, as it seems currently that an overclock that is too high can still result in a successful completion of the task. I wish I could verify that the results were correct, even for my own overclocked GPUs. Right now, the only tool I have is to look at stderr results for "Simulation has become unstable", and downclock when I see it. GPUGrid should improve on this somehow. 3. Other GPU projects are allowed only as a backup (0 resource share) project. --- I disagree. Who are you to define what I'm allowed to use? I am attached to 58 projects. Some have GPU work, some have CPU work, some have ASIC work, and some have non-CPU-intensive work. I routinely get "non-backup" work from about 15 of them, all on the same PC. 4. Don't suspend the GPU tasks while your computer is in use, or at least set its timeout to 30 minutes. It's better to set up the list of exclusive apps (games, etc) in BOINC manager. --- I disagree. I am at my computer during all waking hours, and I routinely suspend BOINC, and even shut down BOINC, because I have some very-long-running-tasks (300 days!) that I don't want to possibly get messed up, as I do things like install/uninstall software or update Windows. Suspending and shutting down should be completely supported by GPUGrid, and to my knowledge, they are. 5. If you don't have a high-end GPU & you switch your computer off daily then GPUGrid is not for you. --- I disagree. GPUGrid tasks have a 5-day-deadline, currently, to my knowledge. So, if your GPU isn't on enough to complete any GPUGrid task within their deadline, then maybe GPUGrid is not for you. These "minimum requirements" are... not great suggestions, for someone like me at least. I realize I'm an edge case. But I'd imagine that lots of people would take issue with at least a couple of the 5. I feel that any project can define great minimum requirements by: - setting up their apps appropriately - massaging their deadlines appropriately - restricting bad hosts from wasting time - continually looking for ways to improve throughput I'm glad the project is now (finally?) looking for ways to continuously improve. | |

| ID: 43558 | Rating: 0 | rate:

| |

I don't know whether your right about your opinions not being liked or not but you are entitled to them. Opinions are just that, you have a right to espouse and defend them. I for one can see nothing wrong with the above list that you posted. If a host is reliable and returns WUs within the deadline period I don't think it matters whether it's a 750ti or a 980ti or whether it runs 24/7 or 12/7. I myself have a running and working 660ti which is reliable and does just that. | |

| ID: 43559 | Rating: 0 | rate:

| |

|

If you like success stories Betting Slip then you can have another one :D | |

| ID: 43562 | Rating: 0 | rate:

| |

I know my opinions aren't liked very much hereThat should not ever make you to refrain from expressing your opinion. but I wanted to express my response to these 5 proposed "minimum requirements".It was a mistake to call these "Minimum requirements", and it's intended for dummies. Perhaps that's makes itself unavailing. These "minimum requirements" are... not great suggestions, for someone like me at least. I realize I'm an edge case. But I'd imagine that lots of people would take issue with at least a couple of the 5.If you keep an eye on your results, you can safely skip these "recommendations". We can, we should refine these recommendations to make them more appropriate, and less offensive. I've made the wording of these harsh on purpose to induce a debate. But I can show you results or hosts which validate my 5 points. (just browse the links in my post about the workunit with the worst history I've ever received, and the other similar ones) The the recommended minimum GPU should be better than the recent (~GTX 750-GTX 660), as the release of the new GTX 10x0 series will result in longer workunits by the end of this year, and the project should not lure new users with lesser cards to frustrate them in 6 months. | |

| ID: 43563 | Rating: 0 | rate:

| |

If you like success stories Betting Slip then you can have another one :D Thanks Stefan and let em rip. Hope these simulations are producing the results you expected. | |

| ID: 43566 | Rating: 0 | rate:

| |