| Author |

Message |

|---|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

I expect a cut down version of the GTX680 to arrive, probably a GTX670 and ~20% slower (192 less shaders). A GTX660Ti could possibly turn up too (384 fewer shaders than a GTX680).

While I suppose a GTX690 (dual GTX680) could arrive, I'm not so sure it's going to happen just yet.

Two smaller OEM cards have already arrived, both GT 640's (28nm, GK107). One is a GDDR5 version and the other uses DDR3.

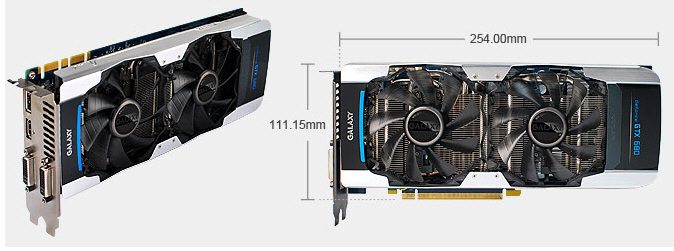

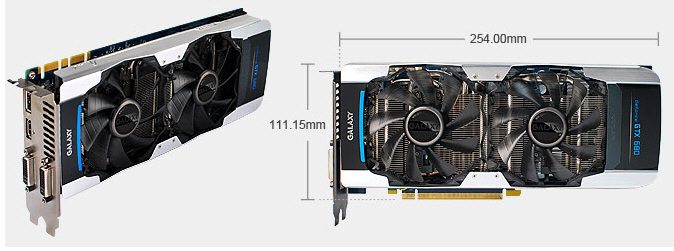

I see the manufacturers have started launching non-reference GTX680 designs.

Galaxy is launching a non-reference, full GTX680 Kepler,

Typically, these come with redesigned boards, higher stock clocks, dual or triple fans, and some contain additional functionality (support for more monitors, more memory...). While 1202/1267MHz, for example, is a nice boost (19%) it's worth noting that some such cards need two 8-pin power connectors to power them.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

690 was just "released". Shown anyway in China. Find it weird that they release this card, when they can't even keep up with supplies for 680. Figure this will be more of a paper release, like the 680 ;) |

|

|

|

|

Find it weird that they release this card, when they can't even keep up with supplies for 680

1st prestige, 2nd: at double the price of a GTX680 the win margin is probably comparable, so they don't care whether they sell 2 GTX680 or one GTX690.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

|

|

|

well, GTX-690 is already official: http://www.geforce.com/whats-new/articles/article-keynote/

as expected, 2 GK-104 on a single board with slightly reduced clocks.. |

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

So the GTX690 is being released on the 3rd May.

Price tag aside, I'm quite impressed with this.

Firstly, I wasn't expecting it just as soon, given the GPU shortages. This is the first time (in recent years anyway) that NVidia has released a dual GPU in a series ahead of AMD/ATI.

Two hardware specs are of obvious interest:

The Power requirement is only 300W. Considering a GTX590 requires 375W (TDP) that's a sizable drop. This leaves the door open for OC'ing and might mean there is less chance of cooking the motherboard.

The other interesting thing is the GPU frequency, relative to the GTX680. Both cores are at ~91% of a GTX680. A GTX590's cores were only 78% of a reference GTX580 - which made it more like two GTX570's in terms of performance.

This certainly looks like a good replacement for the GTX590 (if you don't need FP64).

There seems to be a noticeable improvement in the basic GPU design, but I have not yet seen a review of actual performance (noise, heat, temps).

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

|

|

|

OMFG - ROTFASTC!!

http://www.techpowerup.com/165149/A-Crate-at-TechPowerUp-s-Doorstep.html

brilliant marketing gag to send a crate in advance, and labeling the box 0b1010110010 is plain simple as geeky as you can go. ;) |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

Pretty funny, but me thinks marketing has a little too much time on their hands

It is incredible looking though. Too bad it sits inside a case. Again, marketing team did a GREAT job. |

|

|

|

|

It is incredible looking though. Too bad it sits inside a case. Again, marketing team did a GREAT job.

design and finish are a real statement. never seen a GPU looking that evil. ;)

i'm sure gaming-performance will be awesome. that's the goal for this thing.

bad news: those freaky-design overclocking suppliers will have a hard time now, and most of all, team red has lost this one.

|

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

0b1010110010 =))

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

|

|

|

Hi, A simple and direct question.

The GTX680(or future GTX690)work well and without problems GPUGRID ...? Thanks |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

So apparently Newegg decided to sell the 690 for $1200 ($200 over EVGA price on their website) http://www.newegg.com/Product/Product.aspx?Item=N82E16814130781 |

|

|

|

|

The GTX680(or future GTX690)work well and without problems GPUGRID ...? Thanks

As far as I know: the new app looks good, but is not officially released yet.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

Well the 670 is "basically" the same as the 680, but I would ASSUME will be priced around $400, http://www.tweaktown.com/articles/4710/nvidia_geforce_gtx_670_2gb_video_card_performance_preview/index1.html |

|

|

|

|

|

It's the same chip with similar clocks, but less shaders active -> less performance.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

Not like difference.between 580 ->570 though. Rather small, which I guess is a good thing. |

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

Quite the opposite actually!

A GTX570 was ~89% as fast as a GTX580.

The difference in terms of performance between the GTX670 and GTX680 will be ~20% :

1344/1536 shaders * 915/1006 MHz = 0.796 (~80%).

Assumes performance can be calculated in the same way as previous generations...

The GTX580 only had 512 shaders, so disabling just 32 was not a lot.

The GTX680 has three times the shaders (1536), but disabling 192 is relatively twice as much; 32*3 would only be 96.

So I'm hoping for a price closer to £300. When you consider that the HD7970 presently costs ~£370 and the HD7950 can be purchased for as little as £299, the GTX670 is likely to come in at around £330. While this is similar to original GTX470 and GTX570 prices, it would be better value at £300 to £320.

To begin with the lack of supplies will justify a £330 price tag, but unless it beats an HD7970 in most games (unlikely) anything more than £330 would be a rip-off - when it does turn up the HD7950 and HD7970 prices are likely to fall again.

At present most UK suppliers don't have any GTX680's, and are listing them as £400 to £420 (stock delayed, 3-4 weeks, down to 4days).

I did see a GTX690 at £880 (5-7days), but at that price what you save in terms of performance per Watt would be lost in the original price tag.

Anyway, £400 * 0.8 = £320.

When the GTX670 does arrive, I would not expect its price to fall for a couple of months, or at least until GTX660's turn up (two of which may or more likely may not be a viable alternative).

No idea when AMD/ATI will have their next refresh, but it's likely to be a while (few months), and might depend more on the new memory than anything.

If anything it will probably be competition between the rival manufacturers that drives prices down (and up), with various bespoke implementations.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

Gaming performance though doesn't seem to make much difference. But your math is always better than mine lol, so would it be "safe" to say a 670>580 here? Based on the #'s? |

|

|

|

|

so would it be "safe" to say a 670>580 here? Based on the #'s?

They'll be close and püower consumption of the newcomer will obviously be better. Anything more specific is just a guess, IMO, until we've got the specs officially.

@SK: AMD could give us a 1.5 GB HD7950 to put more pressure on the GTX670.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

Here come the 670's

http://www.shopblt.com/cgi-bin/shop/shop.cgi?action=enter&thispage=011004001505_BPF8397P.shtml&order_id=!ORDERID!

http://www.overclock.net/t/1251038/wccf-msi-geforce-gtx-670-oc-edition-spotted-in-the-wild

With this being the most interesting an in-depth, apparently someone in Australia got their hands on one already

http://www.overclock.net/t/1253432/gigabyte-gtx-670-oc-version-hands-on

From all 30 pages of posts, it appears the 670 can OC MUCH higher, due to the fact that it has 1 SMX disabled. This guy was getting like 1300MHz, and the voltage was still locked at 1.175 Don't know how this will translate to here, but found it VERY interesting.

For $400, it will be interesting to see what happens. |

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

A 1.5GB HD7950 would be a good card to throw into the mix. Price likely to be ~£250 to £280, so very competitive. It would also go some way to filling the gap between the HD7950 and HD7870, and compete against any forthcoming GTX 660Ti/660 which are anticipated to arrive with 1.5GB.

All good news for crunching.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

His had a 8+6, and never posted power draw (wouldn't expect him too). But at this price point, and it competing or beating a 580 with less power, gonna pick one up myself once GDF gets this app out.

Multiple "rumors" out not to expect the 660Ti for quite some time. This may be the August release card. |

|

|

|

|

The card would officially launch and be available globally by May 7th for a price of $399.

It's already Monday (7th May) in Australia. But I wonder, how did this guy get one so early in the morning? :) |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

Midnight release?

GPUz still says May 10th for release date. So assuming May 7th, we should be hearing something "official" from NVIDIA tomorrow right?

EDIT. The way it's looking so far, the higher clock of the 670 keeps up with, and may beat a 680 despite losing a SMX. This will be interesting indeed. |

|

|

wiyosayaSend message

Joined: 22 Nov 09

Posts: 114

Credit: 589,114,683

RAC: 0

Level

Scientific publications

|

|

I don't think this is particularly the right thread for this info, but since the conversation has covered some of this already - some benchmarks on GTX 670 compute performance are out. It looks like the 670 is available at the same price as GTX 580s are now. For single-precision, the 670 looks to be on par with the 580 and close to the 680 in performance. The advantage, as I see it, the 6XX series has over the 5XX series is significantly better power consumption. So flops/watt is better with the 6XX series than with the 5XX series.

However, for double-precision performance, the 580 is about double the 680. So, if you are running projects that need or benefit from DP support and given the lower prices on 580s at the moment, a 580 may be a better buy, and perhaps more power-efficient, too.

Here is the compute performance section of the one 670 review I have seen.

____________

|

|

|

Zydor Zydor  Send message Send message

Joined: 8 Feb 09

Posts: 252

Credit: 1,309,451

RAC: 0

Level

Scientific publications

|

|

Guru3d review of the 670 is out - including a 2 and 3 way SLI review

http://www.guru3d.com/news/four-geforce-gtx-670-and-23way-sli-reviews/

Regards

Zy |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

Ordered a gigabyte 670. Should be here monday. Hope windows is up soon. $400.

EDIT: OUT OF STOCK glad I ordered :-) |

|

|

|

|

|

The only BOINC project I know which currently uses DP is Milkyway.. where the AMDs are superior anyway.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

I agree, neither a Fermi or a Kepler is any good at FP64. It's just that Kepler is relatively less good. Neither should be considered as practical for MW. Buying a Fermi rather than a Kepler is just sitting on the fence. If you want to contribute to MW get one of the recommended AMD cards. Even a cheap second hand HD 5850 would outperform a GTX580 by a long way. If you want to contribute here get one of the recommended cards for here. As yet these don't include Kepler, because I have not updated the list, and won't until there is a live app. That said, we all know the high end GTX690, GTX680 and GTX670's will eventually be the best performers.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

wiyosayaSend message

Joined: 22 Nov 09

Posts: 114

Credit: 589,114,683

RAC: 0

Level

Scientific publications

|

|

I went through the decision making process of GTX 580 or AMD card. The older AMD cards are getting hard to find. Basically, a used one for something in the 5XXX series from e-bay is about the only realistic choice.

6XXX series can still be found new, and if you can catch one, e-bay has some excellent deals.

However, with AMD Milkyway is one of the very few projects that have AMD GPU solutions.

So, I went with the 580. It is not that far behind the top AMD cards on MW, and it is proving to be about 3X as fast as my GTX 460 on MW and on GPUGrid. IMHO, it is a far more flexible card for BOINC in that it is supported by far more BOINC projects than AMD; thus, the reasoning behind my choice. Plus, I got it new for $378 after rebate, and for me, it was by far the "value" buy.

I expect that I run projects a bit differently from others, though. On weekends, I run GPUGrid 24x7 on my GPUs and do no work for other projects due to the length of the GPUGrid WUs. Then during the week, I take work from other projects but not GPUGrid. The other project's WUs complete mostly in under 30 mins except for Einstein which takes about an hour on the 580, 80 min on the 460 and 100 min on my 8800 GT; these times are all well within the bounds of the length of time I run my machines during the week.

My aim is to get maximal value from the few machines I run. If I had more money, I might run a single machine with an AMD card and dedicate that GPU to MW or another project that has AMD support, however, I am building a new machine that will host the 580. At some point, I may put an AMD card in that machine if I can verify that both the 580 and the AMD card will play nice together, but not yet. The wife is getting nervous about how much I've spend so far on this machine. LOL

____________

|

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

The Folding@Home CUDA benchmark is interesting, as it has a reference GTX670 within 6% of a reference GTX680.

Anandtech, http://images.anandtech.com/graphs/graph5818/46450.png

Despite using CUDA this app is very different to the one here and it would be wrong to think such performance is likely here.

Going by the numerous manufacturer's implementations I'm now expecting substantial variations in performance from GTX670 cards; from what I can see the boost speeds will tend upwards from the reference rates (980MHz), with some FOC's reaching ~1200MHz (+22%), which would best a reference GTX680. Overclockers have went past this and 1250 or 1266 is common. Some even reference >1300MHz on air. Of course a GTX680 can overclock too. For hear the OC has to be sustainable, stable for weeks/months rather than minutes.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

|

|

|

This is a little off-topic, but I'll add my 2 cents anyway: bying anything AMd older than the 5000 series wouldn't make sense since these 55+ nm chips are less power efficient. Currently the best bet would probably be a Cayman chip. An unlocked HD6950 is still very good value.

At MW my Cayman, which I bought over 1 year ago for a bit over 200€, was doing a WU each ~50s. I guess that's tough to beat for a GTX580 ;)

Anyway, it's running POEM now: more credits, less power consumption, hopefully more useful science :)

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

|

|

|

What to buy? I have 2 open slots for graphics cards so I continue to troll ebay for GTX 570s and 580s at a good prices. I purchased a 580 for about $300 and have an active auction for another at about the same price.

Is it a good idea to pick-up a 580 right now or just wait a bit and see what happens to the resale value of these cards? Should I wait for the GTX 670s to come down in price a bit as they appear to overclock very well? What is the expected performance per watt advantage of the 6x0 series? Is it 20% or 50%? And now for the big question, would it be better to look at the 590s? We don't have that many working on the project but we limited slots, the 590 would appear to be a good way to increase performance.

Advice? Used 580s @ $300 now? Wait and see on GTX 580s? Wait and move to 670s? Start looking for 590s?

____________

Thx - Paul

Note: Please don't use driver version 295 or 296! Recommended versions are 266 - 285. |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

From what I've been reading, a 670 will AT LEAST be able to be on par if not 10% faster than 580 (670 @ stock), and OC'd it should be able to be pretty close to a 680.

The main advantages of spending the extra $100, are decrease in runtimes, LESS POWER, and LESS HEAT.

Don't think anyone has attached a 670 to GPUgrid for betas yet, but it should be worth the extra money, or extra wait in time until the extra money is in hand.

The 680 is completing the betas around 50 sec (one was consistently), while I believe Stoneageman's 580 was around 75 secs. So as the researchers said, a 680 is about 30% faster.

Whenever Windows is released I'll have 670 waiting, and will post results. |

|

|

|

|

|

I don't have new hard numbers, but Kepler is looking quite good for GPU-Grid. Comparing GTX580 and GTX670 you'll save almost 100 W in typical gaming conditions. At 0.23 €/kWh that's 200€ less of electricity cost running 24/7 for a single year. I can't say how much the cards will draw running GPU-Grid, but I'd expect about this difference, maybe a bit less (since overall power draw may be smaller).

That would mean choosing a GTX670 over a GTX580 would pay for itself in about 6 months.. pretty impressive!

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

A reference GTX580 has a TDP of 244W, and a GTX670 has a TDP of 170W.

GPUGrid apps typically use ~75% of the TDP. So a GTX580 should use around 183W and a GTX670 should use around 128W. The GTX670 would therefor save you ~55W.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

|

|

|

You're assuming Keplers use the same percentage of their TDP-rating as Fermis. That may not be true for several reasons:

- different architecture

- on high end GPUs nVidia tends to specify unrealistically low TDPs (compared to lower end parts of the same generation)

- for Keplers the actual power consumption is much more in line due to their Turbo mode, this essentially makes them use approximately their maximum boost target power under typical load

For the 100 W difference in games I'm referring to such measurements.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

|

|

For the 100 W difference in games I'm referring to such measurements.

probably that's about the range we will see. talking about at least 2 KWh per day.

how many tons of CO² per year?

|

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

When running 3 Einstein WUs concurrent, at 1200 clock and 3110 memory, Precision power usage.monitor says around 60-70% at 85-90% gpu usage. |

|

|

|

|

|

NVIDIA GeForce GTX 680: Windows 7 vs. Ubuntu 12.04 Linux

NVIDIA GeForce graphics comparisons between Windows and Linux

http://www.phoronix.com/scan.php?page=article&item=nvidia_gtx680_windows&num=1 |

|

|

DagorathSend message

Joined: 16 Mar 11

Posts: 509

Credit: 179,005,236

RAC: 0

Level

Scientific publications

|

|

I've been checking out 680 cards and they're all PCIe 3.0. Would I take a performance hit if I put one in a PCIe 16 2.0 slot. There would be no other expansion cards in that machine so there would be no lane sharing.

edit added:

This is the mobo |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

It shouldn't, maybe a little but no one knows yet. But I would wait a little while until everything is sorted out with this project in regards to 6xx.

Wish NVIDIA dev's would fix this x79 PCIE issue (currently only allows PCIE 2.0 until they "certify" everything). Once this happens I'll be able to report the differences in runtimes between varying PCIE speeds (can switch it on mobo in BIOS).

|

|

|

DagorathSend message

Joined: 16 Mar 11

Posts: 509

Credit: 179,005,236

RAC: 0

Level

Scientific publications

|

|

I guess I've missed something somewhere. What's not sorted out wrt 6xx series?

|

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

Yes, but this is untested. My guess ~4.5%

AMD don't support PCIE3.0 Motherboards/CPU's!!

Also, worth noting again that there is no XP support for GTX600's.

For a single card I'm thinking IB is the best option, but outlay for such a rig is very high, and I'm convinced that moving from SB to IB is not a realistic upgrade; it's more of a detour!

As AMD offer much cheaper systems they make good sense. >90% as efficient at half the outlay price makes sense. Running costs is open to debate, especially if you replace often.

So, for a single card (especially GTX670 or less) don't let PCIE2 deter you.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help |

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

@Dagorath,

I was just referring to the issues in the researchers coding and getting the app out. Once the actual app is out, we will be able to test for ourselves to see the difference between PCIE 2 & 3 ourselves.

But as skgiven noted, PCIE 3 is not on AMD cpus and motherboards. |

|

|

DagorathSend message

Joined: 16 Mar 11

Posts: 509

Credit: 179,005,236

RAC: 0

Level

Scientific publications

|

|

@5pot,

OK. I thought you were saying this project might end up not supporting 6xx. It's just PCI 2 vs. PCI 3 that needs investigation.

@skgiven

I think I'll go for a 680 on this PCI 2 board. All I'll need is the GPU and a PSU upgrade. If performance isn't decent I'll upgrade the mobo later, maybe when cost of IB goes down a bit, maybe never. With more and more projects supporting GPUs, buying powerful CPUs doesn't make as much sense as it used to. A good GPU, even with a small performance hit from running on PCI 2.0 rather than PCI 3.0, still gives incredible bang for buck compared to a CPU.

|

|

|

|

|

|

View PCIe 3 as a bonus if you get it, but noting else. At least for GPU-Grid.

For a single card I'm thinking IB is the best option, but outlay for such a rig is very high, and I'm convinced that moving from SB to IB is not a realistic upgrade; it's more of a detour!

Of course it's not an upgrade which makes sense for people already owning a SB - it's not meant to be, anyway. However, IB is a clear step up from SB. It's way more power efficient and features slightly improved performance per clock. Never mind the people complaining about overclockability. Which cruncher in his/her right mind is pushing his SB to 4.5+ GHz at 1.4 V for 24/7 anyway? And if you run IB at ~4.4 GHz and ~1.1 V you'll be a lot more efficient than if you'd push a SB up to this clock range. I'd even say you could run IB 24/7 in this mode.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

|

|

View PCIe 3 as a bonus if you get it, but noting else. At least for GPU-Grid.

For a single card I'm thinking IB is the best option, but outlay for such a rig is very high, and I'm convinced that moving from SB to IB is not a realistic upgrade; it's more of a detour!

Of course it's not an upgrade which makes sense for people already owning a SB - it's not meant to be, anyway. However, IB is a clear step up from SB. It's way more power efficient and features slightly improved performance per clock. Never mind the people complaining about overclockability. Which cruncher in his/her right mind is pushing his SB to 4.5+ GHz at 1.4 V for 24/7 anyway? And if you run IB at ~4.4 GHz and ~1.1 V you'll be a lot more efficient than if you'd push a SB up to this clock range. I'd even say you could run IB 24/7 in this mode.

MrS

I run My 2600k @ 4.5 Ghz @1.28V 24/7. But I also run 7 threads of either WCG or Docking at the same time.

At the moment I'm sticking with my 560ti. Clocked at 925 it completes long tasks in 8-15 hours.

Would love to upgrade to Kepler. Being in Australia though means an automatic 50% markup in price sadly. I will not pay $750 for a 680 or $600 for a 670.

Guess I'll be waiting until the 780's or whatever they end up calling them come out and push the prices down(I hope)

|

|

|

5potSend message

Joined: 8 Mar 12

Posts: 411

Credit: 2,083,882,218

RAC: 0

Level

Scientific publications

|

|

x79 for me from now on. 40 PCIe lanes direct to CPU. Pure goodness. No detours, and higher lane bandwidth with multiple cards.

Right now at MC, a 3820 can be had for $230 in store. Kinda hard to beat that deal.

If using Windows, you currently gotta hack registry (easy to do) to get PCIe 3 working though. |

|

|

|

|

Despite using CUDA this app is very different to the one here and it would be wrong to think such performance is likely here.

skgiven,

Can you explain that statement a bit more?

I am trying to select my next gpugrid-only card with a budget of $400 - $500 ( max).

It will run gpugrid 24x7.

Right now it looks like the 670 is my best value.

Any additional data, formal or anecdotal is greatly appreciated.

Ken |

|

|

|

|

|

It means that Folding@Home is a different CUDA project. Which means that the relative performance of cards with different architectures may not be the same on GPU-Grid.

Anyway, the app supporting Kepler should be out in a few days and we'll have hard numbers. So far GTX670 looks pretty good here.

MrS

____________

Scanning for our furry friends since Jan 2002 |

|

|

skgivenVolunteer moderator skgivenVolunteer moderator

Volunteer tester

Send message

Joined: 23 Apr 09

Posts: 3968

Credit: 1,995,359,260

RAC: 0

Level

Scientific publications

|

|

Nice specs from VideoCards, albeit speculative:

GeForce 600 Series GPU CUDA Cores Memory Memory Interface Launch Price Release

GeForce GT 640 D3 GK107 384 1GB / 2GB GDDR3 128-bit $99 April

GeForce GT 640 D5* GK107 384 1GB / 2GB GDDR5 128-bit $120 August

GeForce GT 650 Ti* GK106 960 1GB / 2GB GDDR5 192-bit $120 – $160 August

GeForce GTX 660* GK106 1152 1.5 GB GDDR5 192-bit $200 – $250 August

GeForce GTX 660 Ti* GK104 1344 1.5GB / 3GB GDDR5 192-bit $300 – $320 August

GeForce GTX 670 GK104 1344 2GB / 4GB GDDR5 256-bit $399 May

GeForce GTX 680 GK104 1535 2GB / 4GB GDDR5 256-bit $499 March

GeForce GTX 690 2xGK104 3072 4GB GDDR5 512-bit $999 May

*Not yet released

http://videocardz.com/33814/nvidia-readies-geforce-gtsx-650-ti-for-august

The 660Ti might be a sweet card for here, if the specs are true, and we would have a good mid-range to choose from. No mention of a 768 card though, and there is a bit of a gap between 960 and 384. Their imagination doesn't seem to stretch down to a GT 650 (no Ti, 768). I can't see a GT650Ti going for the same as a GT640 either, and if a 960 card cost $120, you could just buy 2 and outperform a GTX680 (all else being equal) and save ~$260. With those specs 3 GT650Ti's would almost match a GTX690, but cost ~half as much.

____________

FAQ's

HOW TO:

- Opt out of Beta Tests

- Ask for Help

|

|

|