Message boards : Graphics cards (GPUs) : NVidia GTX 650 Ti & comparisons to GTX660, 660Ti, 670 & 680

| Author | Message |

|---|---|

|

As expected, NVidia has released an additional GeForce card in the form of the GTX650Ti, filling out the GeForce 600 range. | |

| ID: 27059 | Rating: 0 | rate:

| |

|

Update: GTX660 with GK106 and 960 shaders at 980 MHz went retail a few weeks ago. | |

| ID: 27060 | Rating: 0 | rate:

| |

|

Several etailers are offering a free code for download of Assassins Creed III. This could likely be sold (eg on ebay) for at least $30 making this a VERY high value card IMO! | |

| ID: 27117 | Rating: 0 | rate:

| |

|

yes i read about the release from this card to and was impressed by the number of cuda cores. | |

| ID: 27129 | Rating: 0 | rate:

| |

Several etailers are offering a free code for download of Assassins Creed III. This could likely be sold (eg on ebay) for at least $30 making this a VERY high value card IMO! well I was able to sell the Assassin's Creed 3 code for $43. But the buyer is claiming the code is "already used". So I may end up being out the $43 with nothing to show for it if the buyer requests a refund through PayPal. :( ____________ XtremeSystems.org - #1 Team in GPUGrid | |

| ID: 27178 | Rating: 0 | rate:

| |

|

Ouch, that's pretty bad! By now you won't be able to use the code either, and you'll never know if that guy is happily running the game now.. or is there anything the game distributor could help you with here? Like invalidate all current installations using this code? | |

| ID: 27179 | Rating: 0 | rate:

| |

|

the buyer tried it again the next day and it worked! | |

| ID: 27187 | Rating: 0 | rate:

| |

|

For the price its fantastic number cruncher. Its doing a long work unit in 8 hours! | |

| ID: 27200 | Rating: 0 | rate:

| |

|

My 650ti is currently posting slightly better averages than my 560 , and it has not peaked yet. So im not exactly sure what its peak performance is. | |

| ID: 27292 | Rating: 0 | rate:

| |

|

About 190k RAC using only long-run tasks. | |

| ID: 27293 | Rating: 0 | rate:

| |

|

How does it do for desktop lag? I usually use a dedicated card for DC projects, but this time can only free up the display card slot. I do some video editing, but otherwise just do web browsing with Firefox. | |

| ID: 27378 | Rating: 0 | rate:

| |

|

worst case scenario you can just disable "Use GPU while computer is in use" in BOINC. | |

| ID: 27379 | Rating: 0 | rate:

| |

|

With my GTX660Ti I didn't notice disturbing desktop lag, although HD video playback may sometimes have dropped below 25 fps for short periods. But it's got a lot more raw horse power than a GTX650Ti. | |

| ID: 27380 | Rating: 0 | rate:

| |

|

Short test with GTX650TI (MSI Power Edition/OC) & ACEMD2: GPU molecular dynamics v6.16 (cuda42), i7-3770K @3,8 Ghz, 9 threads in BOINC (8xSIMAP, 1xGPUgrid), no problem if this slows down the performance. The progress counting moves constantly over all nine threads. | |

| ID: 27400 | Rating: 0 | rate:

| |

|

(edit timeout ?) | |

| ID: 27406 | Rating: 0 | rate:

| |

|

Your core-OC still yielded more, percentage wise, than your mem-OC. However, GT640, GTX650Ti and GTX660Ti are less balanced than usual cards in the way that they pack a surprising amount of shader power into a package with "usually just enough" memory bandwidth. In games these cards are love memory OC's, much more so than usual cards. Seems like on these cards GPU-Grid is finally also affected by the mem clock by a measureable amount. | |

| ID: 27417 | Rating: 0 | rate:

| |

|

Thank you, MC ~48% at the OC mode, switched to normal mode (=MSI Core OC, Memory stock) MC ~46%, underclocking to Core @928MHz not possible, the offset -65 was not accepted. | |

| ID: 27425 | Rating: 0 | rate:

| |

Should I try a long run workunit ? Yes... ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 27426 | Rating: 0 | rate:

| |

|

Two long run workunits finished (GTX 650 TI; GK106, 1GB), looks like these are different, sorry but not enough samples to show effect of OCing: | |

| ID: 27464 | Rating: 0 | rate:

| |

|

Thanks for collecting and sharing that data. This should be enough for anyone who wants to know how well this card (and some comparably fast ones) performs :) | |

| ID: 27465 | Rating: 0 | rate:

| |

|

Interesting read. - 54,151 s: 101,850 credits - bonus missed ? Bonus wasn't missed; the task was returned & reported inside 24h. In this case the credit is less than might be expected due to the WU type; you can see the credit doesn't line up with other WU's of similar runtime. Usually WU's grant the same credit per time (give or task a very small percentage), however some were not assessed accurately enough and the credit awarded is skewed, one way or another. This time down compared to the others. So you really have to compare like for like Work Units to get enough accuracy to compare card performances. The tasks that were granted 60900 credits are the give away for GPU comparison. Of note is that the GTX650Ti matches a GTX560 for performance. This is a good generation on generation marker. With more powerful cards other variables (and there are many that influence performance) can significantly influence GPU comparison. Yesterday I was looking at two same type GPU's on different OS's. I wanted to work out the relative performance influence of the operating systems, but I encountered a discrepancy - basically it was due to the system RAM; DDR2x800 vs DDR3 2133. It's been a while since I've encountered such an unbalanced system. You just can't configure your way out of slow memory. At this stage I'm fairly convinced that a GTX660Ti matches a GTX580, so we have a few generation on generation markers. I think it's still up for debate whether or not the GTX660Ti fully matches a GTX670. The speculation is that it does, or near-enough, but without hard figures we don't know for sure. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 27476 | Rating: 0 | rate:

| |

|

Many thanks for the explanations ! | |

| ID: 27478 | Rating: 0 | rate:

| |

|

Yeah thx perfect analyis from the 650TI, i was waiting for something like that :) | |

| ID: 27479 | Rating: 0 | rate:

| |

|

Hi, GDF: | |

| ID: 28625 | Rating: 0 | rate:

| |

|

John, | |

| ID: 28626 | Rating: 0 | rate:

| |

|

http://www.newegg.com/Product/ProductList.aspx?Submit=ENE&DEPA=0&Order=BESTMATCH&N=-1&isNodeId=1&Description=gtx+650&x=0&y=0 | |

| ID: 28627 | Rating: 0 | rate:

| |

|

Many thanks for your help, Jim1348. Prices in US & canada appear similar these days. | |

| ID: 28628 | Rating: 0 | rate:

| |

|

Many thanks, Dylan. Both you and Jim1348 have given me enough information to start the hunt! | |

| ID: 28629 | Rating: 0 | rate:

| |

|

Be sure to get a GTX 650 Ti, as it's massively faster than a GTX 650 for crunching. Oh that sweet naming madness.. | |

| ID: 28644 | Rating: 0 | rate:

| |

|

The GTX 650 Ti is twice as fast as the GTX 650, and costs about 35% more. It's well worth the extra cost. | |

| ID: 28645 | Rating: 0 | rate:

| |

|

Hi, | |

| ID: 28913 | Rating: 0 | rate:

| |

|

John; | |

| ID: 28919 | Rating: 0 | rate:

| |

|

John, your issue is caused by the new features employed in the NOELIA WU. It's not related to your card at all. | |

| ID: 28993 | Rating: 0 | rate:

| |

|

Many thanks to all for responses. Processing short tasks and non-NOELIA tasks: all well. | |

| ID: 29020 | Rating: 0 | rate:

| |

|

Thought I'd follow up on GTX 650 Ti performance... | |

| ID: 29168 | Rating: 0 | rate:

| |

|

Going by your runtimes I would say you are getting around 190K/day | |

| ID: 29169 | Rating: 0 | rate:

| |

|

Last days I clocked my GTX 650 TI with reduced OC frequencies (EVGA Precision 4.0.0): +100/+100 Mhz without extra volting (@stock: 1.087 V), runtimes are within the levels from other users. | |

| ID: 29171 | Rating: 0 | rate:

| |

My current average credit with this card running long tasks exclusively is 75,446.58. Actually, I was looking at the wrong computer's stats. The average credit for the one with the GTX 650 Ti is 150,825.35. Sorry for any confusion. Operator ____________ | |

| ID: 29173 | Rating: 0 | rate:

| |

|

This looks to be the right thread for my questions. I confess I don’t understand most of the technical aspects posted here, but I think I get the gist. | |

| ID: 29600 | Rating: 0 | rate:

| |

The budget puts me in the GTX 650/660 (perhaps TI…) range. I recommend the GTX 660. It takes about 6 hours to complete a Nathan long, which seem to be about the same (or not much longer) as for a GTX 660 Ti. And I just completed a new build, and was able to measure the power into the card as being 127 watts while crunching Nathan longs (that includes 10 watts static power, and accounts for the 91% efficiency of my power supply). The GTX 650 Ti is very efficient too, but takes about 9 or 10 hours on Nathan longs the last time I tried it a couple of months ago. While the GTX 660 Ti adds more shaders, that does not necessarily result in correspondingly faster performance. It depends on the complexity of the work; they found that out a long time ago on Folding@home, where the more complex proteins do better on the higher-end cards, but the ordinary ones can often do better on the mid-range cards, since their clock rate is usually higher than the more expensive cards, which may more than compensate for fewer shaders. I like the Asus cards too; they are very well built, but to be safe I would avoid the overclocked ones, though Asus seems to do a better job than most in testing the chips used in their overclocked cards. But that may not be saying much; the main market for all of these cards is for gamers, where an occasional error is not noticed, but can completely ruin a GPUGrid work unit. | |

| ID: 29601 | Rating: 0 | rate:

| |

|

A reference GTX660 has a boost clock of 1084. My GTX660Ti operates at around 1200MHz, and the reference GTX660Ti is 1058MHz, so you can't say the GTX660 has faster clocks. | |

| ID: 29602 | Rating: 0 | rate:

| |

|

This "not using all shaders" is not the best wording if you don't know what it means. As a brief explanation: it's a property of the fundamental chip architecture, every chip / card since "compute capability 2.1" has it, which would be the mainstream Fermis and then all Keplers. This move allowed nVidia to increase the number of shaders dramatically compared to older designs, but at the cost of sometimes not being able to use all of them. At GPU-Grid it seems like the "bonus shaders" can not be used at all.. but this matters only when comparing to older cards. The newer ones are way more power efficient, even with this handicap. | |

| ID: 29603 | Rating: 0 | rate:

| |

I would like to see some actual results from your GTX660, to see if it really is completing Nathan Long tasks in 6h. I doubt it because my GTX660Ti takes around 5 1/2h and it's 40% faster by my reckoning: Here is the first one at 5 hours 50 minutes: 6811663 150803 29 Apr 2013 | 17:21:11 UTC 30 Apr 2013 | 0:44:44 UTC Completed and validated 21,043.76 21,043.76 70,800.00 Long runs (8-12 hours on fastest card) v6.18 (cuda42) You can look at the others for a while, though I will be changing this card to a different PC shortly. http://www.gpugrid.net/results.php?hostid=150803 This GTX 660 is running non-overclocked at 1110 MHz boost (993 MHz default), and is supported at the moment by a full i7-3770. However, when I run it on a single virtual core, the time will increase to about 6 hours 15 minutes. | |

| ID: 29607 | Rating: 0 | rate:

| |

I would like to see some actual results from your GTX660, to see if it really is completing Nathan Long tasks in 6h. I doubt it because my GTX660Ti takes around 5 1/2h and it's 40% faster by my reckoning: That's amazingly fast. My 660Ti does Nathan's in about 19000 @ 1097Mhz . I would like to see what a 660 can do with a Noelia unit (when they start working again) and see if the more complex task takes a proportionate time increase. | |

| ID: 29608 | Rating: 0 | rate:

| |

|

My GTX670's do the current NATHANS in about 4 hours at 1200MHz, that's not the same as a 660. When we were doing the NOELIA's, the difference was even greater. I know some will disagree with this but I believe the 256 bit onboard bus makes a difference, I'm not pushing data through a smaller pipe. They do have a much greater advantage on power consumption and the amount of heat they put off. | |

| ID: 29611 | Rating: 0 | rate:

| |

|

@Flashhawk: you've also got the significant performance bonus of running Win XP. The other numbers here are from Win 7/8. | |

| ID: 29613 | Rating: 0 | rate:

| |

That's amazingly fast. My 660Ti does Nathan's in about 19000 @ 1097Mhz . Good question. I am changing this machine around today so the link will no longer be good for this card, but will post later once I get some. | |

| ID: 29616 | Rating: 0 | rate:

| |

I recommend the GTX 660. It takes about 6 hours to complete a Nathan long, which seem to be about the same (or not much longer) as for a GTX 660 Ti. And I just completed a new build, and was able to measure the power into the card as being 127 watts while crunching Nathan longs (that includes 10 watts static power, and accounts for the 91% efficiency of my power supply). The GTX 650 Ti is very efficient too, but takes about 9 or 10 hours on Nathan longs the last time I tried it a couple of months ago. I'm running 3 MSI Power Edition GTX 650 TI cards and the times on the long run Nathans range from 8:15 to 8:19. They're all OCed at +110 core and +350 memory (these cards use very fast memory chips) and none of them has failed a WU yet. Temps range from 46C to 53C with quite low fan sttings. I like the Asus cards too; they are very well built, but to be safe I would avoid the overclocked ones, though Asus seems to do a better job than most in testing the chips used in their overclocked cards. Interesting, for years I have run scores of GPUs (21 running at the moment on various projects) of pretty much all brands and for me ASUS has has BY FAR the highest failure rate. In fact ASUS cards are the ONLY ones that have had catastrophic failures, other brands have only had fan failures. Of the many ASUS cards I've had only 1 is still running, every other ASUS has failed completely except for 1 that is waiting for a new fan. Personally have had good luck with the XFX cards (among other brands) with double lifetime warranty, out of many XFX cards have had 2 fan failures and they've shipped me complete new HS/fan assemblies in 2 days both times. Also have had good luck with MSI, Sapphire, Powercolor and Diamond. | |

| ID: 29618 | Rating: 0 | rate:

| |

|

Many thanks to all who contributed to my question. Quite stimulating! | |

| ID: 29619 | Rating: 0 | rate:

| |

With apologies to Beyond, who is not impressed with ASUS, I've decided to go with the ASUS GTX 660. All the ASUS GPUs I've had have turned in exemplary performance. No need to apologize, just relating my experience with the 7 ASUS cards I've owned as opposed to the scores of other brands. For me the ASUS cards failed at an astounding rate. Of course YMMV. | |

| ID: 29621 | Rating: 0 | rate:

| |

|

Jim1348, is that definitely a 960shader version and not an 1152 OEM card? | |

| ID: 29622 | Rating: 0 | rate:

| |

|

40% is really good, I think you're actually using more shaders than I am on my 670's. The highest I've seen on the memory controllers is 32% for a 670 and 34% for a 680. | |

| ID: 29623 | Rating: 0 | rate:

| |

|

My GTX660Ti is made by Gigabyte, and comes with a dual fan. It's a descent model, but fairly standard; most boost up to around 1200MHz. | |

| ID: 29624 | Rating: 0 | rate:

| |

- It just occurred to me that the drop from 32 to 24 ROP's could be the issue for the GTX660Ti rather than or as well as the memory bandwidth; the GTX670 has 32 Rops, but the GTX660Ti only has 24 Rops. The GTX660 also has 24 Rops - perhaps a better Rops to Cuda Core ratio. I'd be interested in seeing the performance of the 650 TI Boost if anyone has some figures for GPUGrid. So far I've been adding 650 TI cards as they seem to run at about 65% of the speed of the 660 TI, are less than 1/2 the cost and are very low power. The 650 TIs I've been adding have very fast memory chips and boosting the memory speed makes them significantly faster. That would make me think that more memory bandwidth might also help. PCIe speed (version) has no effect at all in my tests, but that's on the 650 TI. Can't say for faster cards. Will say though that the 650 TI is about 35-40% faster than the GTX 460 at GPUGrid, yet the 460 is faster at other projects (including OpenCL). As an aside, it's also interesting that the 660 is faster than the 660 TI at OpenCL Einstein, wonder why? | |

| ID: 29626 | Rating: 0 | rate:

| |

Jim1348, is that definitely a 960shader version and not an 1152 OEM card? Yes, 960 shaders at 2 GB; nothing special. http://www.newegg.com/Product/Product.aspx?Item=N82E16814500270

I noticed when I bought it that it had good memory bandwidth, relatively speaking. But another factor is that the GTX 660 runs on a GK106 chip, whereas the GTX 660 Ti uses a GK104 as you noted above. The GK106 is not in general a better chip, but there may be other features of the architecture that favor one chip over another for a given type of work unit. Nvidia sells them mainly for gaming, with number-crunching being an afterthought for them. It could well be that the GK104 runs Noelias better; we won't know until we see. | |

| ID: 29628 | Rating: 0 | rate:

| |

|

Some quick measurements / observations: | |

| ID: 29637 | Rating: 0 | rate:

| |

|

It looks as though you found part of the puzzle. Just for reference, my GTX680's have a 96% GPU utilization and the 670's are at 94%. I'm sure you and sk will figure this out. What about downclocking due to heat? All my rigs are liquid cooled and don't get over 45* C. | |

| ID: 29639 | Rating: 0 | rate:

| |

|

Heat isn't an issue; dual fan card, open case with additional large case fans, 137W used - GTX660Ti presently at 55°C and would rarely reach 60°C. | |

| ID: 29645 | Rating: 0 | rate:

| |

|

Ah, I forgot to comment on the ROP suggestion yesterday. Well, ROP stands for "render output processor". I've never heard of these units being used in GP-GPU at all, which seems logical to me, since the calculation results wouldn't need to be assembled into a framebuffer the size of the screen. And blended/softened via anti-aliasing.. which you really wouldn't want to do with accurate calculation results :D | |

| ID: 29666 | Rating: 0 | rate:

| |

|

Been following this thread as I recently put together a dedicated folding machine on a tight budget hence been looking for the best 'bang per buck per watt'. | |

| ID: 29675 | Rating: 0 | rate:

| |

|

The GTX660Ti has one less Rop cluster (of ROPs, L2 cache and Memory) than a GTX670. So it drops from 32 ROPs, with 512KB L2 cache and quad channel memory to 24 ROPs, 384KB L2 cache and triple channel memory. | |

| ID: 29685 | Rating: 0 | rate:

| |

I suspect the performance improvement of the gtx 660 over say the gtx 650ti is due to the larger cache size (384K v 256K) rather than bandwidth (gtx 670/680 incidently has 512K). Don't forget the amount of shaders, which is the most basic performance metric! Well.. the L2 cache coupled to the memory controller and ROP block is something which could make a difference here. Not for games, as things are mostly streamed there and bandwidth is key. But for number crunching caches are often quite important. I'm currently using EVGA Precision X to set clock speeds. And this won't let me underclock GPU memory more than 250 (real) MHz. I suspect I could get memory controller utilization above 41% if I could set lower clocks. But I'm not too keen on installing another one of these utilities :p MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 29693 | Rating: 0 | rate:

| |

I would like to see what a 660 can do with a Noelia unit (when they start working again) and see if the more complex task takes a proportionate time increase. My first two Noelias are in, and are running 12-13+ hours on my two GTX 660s, each supported by one virtual core of an i7-3770 (not overclocked). The other six cores of the CPU are now running WCG/CEP2. 063ppx25x2-NOELIA_klebe_run-0-3-RND3968_1 12:05:29 (05:47:34) 5/7/2013 5:46:00 AM 5/7/2013 6:05:05 AM 0.627C + 1NV 47.91 Reported: OK * 041px24x3-NOELIA_klebe_run-0-3-RND0769_0 13:15:57 (06:17:45) 5/7/2013 5:09:05 AM 5/7/2013 5:22:42 AM 0.627C + 1NV 47.46 Reported: OK * The Nathans now run a little over 6 hours on this setup. http://www.gpugrid.net/results.php?hostid=150900&offset=0&show_names=1&state=0&appid= Also, I run a GTX 650 Ti, and the first Noelia was 18 hours 14 minutes. | |

| ID: 29722 | Rating: 0 | rate:

| |

|

As some people suggested it appears that the GTX660Ti is relatively better (slightly) when it comes to Noelia's WU's, compared to Nate's. | |

| ID: 29724 | Rating: 0 | rate:

| |

|

How does the 650 Ti BOOST perform compared to the 650 Ti and 660? | |

| ID: 29889 | Rating: 0 | rate:

| |

|

Unfortunately I have not seen any stats for the 650 ti boost, however personally as gpugrid appears to be mainly shader bound I would go for either a base 650 ti or the 660. I would expect the performance of the boost to only be marginally quicker than a base 650 ti; I guess it really depends on your budget; the price differences of the products in your area; the supporting hardware for the card and whether the machine will be used for other purposes other than crunching. | |

| ID: 29891 | Rating: 0 | rate:

| |

|

The 650TiBoost is basically a 660 with less shaders. While the shaders are historically the most important component they have been trimmed down significantly from CC2.0 cards and CC2.1 cards to CC3.0 cards. This means that there is more reliance on other components especially the core but even the CPU. So the relative importance of the shaders is open to debate. It certainly seems likely that the GPU memory bandwidth and probably the different cache memories have more significance with the GeForce 600 GPU's. I think the new apps have exposed this (they generally optimize better for the newer GPU's), making initial assessments of performances outdated. There seems to be some performance difference with the different WU types, but that would need to be better explored/reported on before we could assess it's importance when it comes to choosing a GPU. Even then it's down to the researchers to decide what sort of research they will be doing long term. I'm expecting more Noelia type WU's, as they extend the research boundaries, but that's just my hunch. | |

| ID: 29892 | Rating: 0 | rate:

| |

|

Pulled a GTX660Ti and a GTX470 from a system to test a GTX660 and a GTX650TiBoost: | |

| ID: 29914 | Rating: 0 | rate:

| |

|

Interesting comparison, be be great to see further results for the various WUs; out of curiosity which cards do you have running in there at the moment? | |

| ID: 29923 | Rating: 0 | rate:

| |

TDP - personal experience (as indeed you mentioned) can be a little misleading as the actual power draw for running a particular app on a particular card really depends on a number of factors e.g num of memory modules; chip quality (binning) not to mention Boost (which I hate) as you know can ramp up the voltage to 1.175V - I have to limit the power target of my 670 to 72% as it gets toasty. I would leave in the TDP - it is useful for comparative purposes, even if it varies in absolute terms for the factors you mentioned. However, I would add a correction for power supply efficiency. For example, a Gold 90+ power supply would probably run about 91% efficient at those loads, and so the GTX 660 that measures 140 watts at the AC input (as with a Kill-A-Watt) is actually drawing 140 X 0.91 = 127 watts. That is not particularly important if you are just comparing cards to be run on the same PC, but could be if you are comparing the measurements for your card with someone else's on another PC, or when building a new PC and choosing a power supply for example. | |

| ID: 29925 | Rating: 0 | rate:

| |

|

Yes, upon reflection your right - TDP useful for comparative purposes, was thinking out loud. | |

| ID: 29926 | Rating: 0 | rate:

| |

|

The TDP is for reference but it's certainly not a simple consideration. You would really need to list all your components when designing a system if TDP (power usage) is a concern. Key would be the PSU; if your PSU is only 80% efficient, it's lack of efficiency impacts upon all the other components. However, if you are buying for a low end system with only 1 6pin PCIE required then the purchase cost vs the running cost of such a PSU (and other components) are likely to make it more acceptable. For mid-range systems (with mid-range GPU's) an 85%+ PSU is a reasonable balance. For a high end system (with more than one high end GPU) I would only consider a 90+ efficiency PSU (Professional series). | |

| ID: 29929 | Rating: 0 | rate:

| |

|

A very nice bit of information. Thanks! To put these mid-range GPU performances into perspective, against the first high-end GPU (running Nathan WU’s): Prices are a bit different in the states though. Best price at newegg AR shipped: 650 TI: $120 (after $10 rebate) (MSI) 650 TI Boost: $162 (no rebate, rebate listed at newegg in error on this GPU) (MSI) 660: $165 (after $15 rebate) (PNY) 660 TI: $263 (after $25 rebate) (Galaxy) So for instance the 650 TI is only 45.6% of the cost of the 660 TI here and the 660 is only 62.7% of the cost of the 660 TI. Neither the 650 TI Boost nor the 660 TI is looking too good (initial purchase) at these prices. Running costs: I don't think the TDP estimates mean too much for our purposes. Do you have some Kill-A-Watt figures? | |

| ID: 29930 | Rating: 0 | rate:

| |

|

In the UK running cost difference for these mid-range GPU's would only be around £10 a year, so yeah, no big deal, and only a consideration if you are getting two or three. In a lot of locations it would be less, and in Germany and a few other EU countries it would be more. So it depends where you are. | |

| ID: 29936 | Rating: 0 | rate:

| |

|

I posted here at the end of April about my plan to upgrade my PSU and my GTX 460. | |

| ID: 29938 | Rating: 0 | rate:

| |

|

I would suggest a very modest OC, and no more. Antec don't make bad PSU's, especially 600W+ models, but I would still be concerned about pulling too many Watts through the PCIE slot on that motherboard. I suggest the first thing you do is to compete a WU and get a benchmark, then nudge up the clocks ever so slightly. I'll try to resist the urge to OC for a while, to complete more runs and get a more accurate performance table. | |

| ID: 29940 | Rating: 0 | rate:

| |

|

That sounds like very good advise! | |

| ID: 29941 | Rating: 0 | rate:

| |

|

Measured Wattages: | |

| ID: 29942 | Rating: 0 | rate:

| |

|

Just for comparison: | |

| ID: 29943 | Rating: 0 | rate:

| |

|

To me it looks like the reported power percentage is calculated relative to the power target rather than TDP. The former is not reported as widely (though it is in e.g. Anandtech reviews), but is generally ~15 W below the TDP. If this was true we could easily calculate real power draw by "power target * claimed power draw". | |

| ID: 29944 | Rating: 0 | rate:

| |

|

You have raised a few interesting points: | |

| ID: 29946 | Rating: 0 | rate:

| |

Jim, if a GPU has a TDP of 140W and while running a task is at 95% power, then the GPU is using 133W. To the GPU it's irrelevant how efficient the PSU is, it still uses 133W. However to the designer, this is important. It shouldn't be a concern when buying a GPU but when buying a system or upgrading it is. OK, I was measuring it at the AC input, as mentioned in my post. Either should work to get the card power, though if you measure the AC input you need to know the PS efficiency, which is usually known these days for the high-efficiency units. (I trust Kill-A-Watt more than the circuitry on the cards for measuring power, but that is just a personal preference.) | |

| ID: 29952 | Rating: 0 | rate:

| |

|

My GTX660 really doesn't like being overclocked. Stability was very poor when it comes to crunching with even a very low OC. It's definitely not worth a 1.3% speed up (task return time) if the error rate rises even slightly, and my error rate rose a lot. This might be down to having a reference GTX660 or it being used for the display; I hadn't been using the system for a bit, and with the GPU barely overclocked, within a minute of me using the system a WU failed, and after 6h with <10min to go! It's been reset to stock. | |

| ID: 29957 | Rating: 0 | rate:

| |

It's looking like a FOC GTX660 is the best mid-range card to invest in. What's "FOC"? ____________ | |

| ID: 29959 | Rating: 0 | rate:

| |

|

Factory Over Clocked. | |

| ID: 29966 | Rating: 0 | rate:

| |

Factory Over Clocked. Thank you! ____________ | |

| ID: 29969 | Rating: 0 | rate:

| |

|

Actually the higher the GPU utilization, the more a GPU core OC should benefit performance. Because every additional clock is doing real work, whereas at lower utilization levels only a fraction of the added clocks will be used. | |

| ID: 29978 | Rating: 0 | rate:

| |

|

Yeah, you're right. I was thinking that you wouldn't be able to OC as much to begin with, but 105% of 99% GPU - 99% utilization is > 105% of 88% GPU - 88% utilization; 4.95% > 4.4% | |

| ID: 29983 | Rating: 0 | rate:

| |

|

Just meant as a very rough guide, but serves to highlight price variation and the affect on performance/purchase price. | |

| ID: 30076 | Rating: 0 | rate:

| |

|

I think it's best to stick with one manufacturer when comparing prices. This also includes reference vs OC models. Looking at the very lowest prices isn't always great as I don't want another Zotac (my 560ti448 was very loud and hot). There are also mail-in-rebates, but I tend to ignore those when comparing prices. | |

| ID: 30079 | Rating: 0 | rate:

| |

|

Newegg just had the MSI 650 Ti (non OC) on sale for $84.19 shipped AR. Unfortunately the sale just ended yesterday, only lasted a day or two. | |

| ID: 30081 | Rating: 0 | rate:

| |

The GTX 650 Ti is twice as fast as the GTX 650, and costs about 35% more. It's well worth the extra cost. Well, it's been a few short months and it looks like the 650 Ti has had it's day at GPUGrid. While it's very efficient It can no longer (in non-OCed form) make the 24hr cutoff for the crazy long NATHAN_KIDc22 WUs. So I suspect the 650 TI Boost and the 660 are the next victims to join the DOA list. Just a warning :-( http://www.gpugrid.net/workunit.php?wuid=4490227 | |

| ID: 30617 | Rating: 0 | rate:

| |

|

I've completed 4 NATHAN_KID WUs with my stock-clocked 650ti all in ~81K secs (~22.5 hours). I am about to finish my fifth, also expected to take ~22.5 hours. | |

| ID: 30620 | Rating: 0 | rate:

| |

Maybe something just slowed down crunching for this WU? No it's a machine that's currently dedicated to crunching. You're running Linux which is about 15% faster than Win7-64 at GPUGrid according to SKG. That's the difference, and even then you would have to micromanage and still you don't always make the 24 hour cutoff: 25 May 2013 | 7:52:09 UTC 26 May 2013 | 9:50:00 UTC Completed and validated 81,103.17 139,625.00 credits out of 167,550.00 | |

| ID: 30624 | Rating: 0 | rate:

| |

|

I see, yes, maybe it is because of Linux. Maybe you could cut some time with a mild OC? Or maybe you could install Linux? :) | |

| ID: 30629 | Rating: 0 | rate:

| |

|

For a long time the difference between Linux and XP was very small (<3%) and XP was around 11% faster than Vista/W7/W8. However since the new apps have arrived it's not as clear. Some have reported big differences and some have reported small differences. The question is why? | |

| ID: 30632 | Rating: 0 | rate:

| |

So I suspect the 650 TI Boost and the 660 are the next victims to join the DOA list. Just a warning :-( That's why I prefer few large GPUs here over more smaller ones, as long as the price does not become excessive (GTX680+). However, I also think there's no need to increase the WU sizes too fast. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 30655 | Rating: 0 | rate:

| |

|

My gtx 650 ti's are running NATHAN_KIDc22 in less than 70k secs with a mild OC of 1033, 1350 - Win XP mind. Nathan's seem to be very much CPU bound from what I have seen - have you tried upping the process priority to 'Normal' and if only using one GPU per machine setting the CPU affinity with something like ImageCFG? | |

| ID: 30659 | Rating: 0 | rate:

| |

|

Got some PCIE risers to play with :)) | |

| ID: 30881 | Rating: 0 | rate:

| |

Got some PCIE risers to play with :)) I can't visualize what that looks like, but how about this for an alternative... I recently upgraded to a GTX 660, so my old GTX 460 now sits in its box. Is there an adapter I can mount in a PCI slot, on top of which I mount the 460? I have a 620W PSU and four PCIE power connectors. | |

| ID: 30882 | Rating: 0 | rate:

| |

|

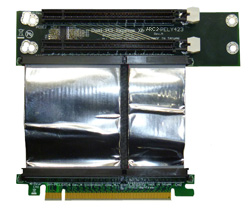

I think this 'dual PCIE splitter/riser' is what you are interested in, | |

| ID: 30883 | Rating: 0 | rate:

| |

|

My first WU using a riser failed after 7h 55min :( | |

| ID: 30884 | Rating: 0 | rate:

| |

|

I've spent all day looking at how to add my redundant GTX 460 to my GTX 660 crunching effort. Thanks, skgiven, for the leads! A 1 to 16 slot converter might also interest some, Note that, for Europe (me), the link is: http://www.thedebugstore.com/acatalog/SKU-074-01.html With all these risers you need to understand the power consequences! A PCIE2.1 X16 slot can deliver up to 75W of power to the GPU. An X1 slot cannot, therefore all the power would need to be delivered through the PCIE power connector(s). That looks like a possibility for me. Love the plug 'n' play bit. I have an almost-4-year-old Dell XPS 435, whose performance still amazes me! First question: do I have any PCIe x1 slots. I found I have two:  Good start! Next, is there room in the case? Amfeltech don't give the dimensions of their card, but I reckon I have five cm of height before it becomes a problem and I need to run with the cover off. Finally, do I have power? Previously I posted I had four PCIe power connectors. Not true. I have two, but one of these, for the 460 (it needs two), should be enough: http://tinyurl.com/mkmof9g And... I have 620W of power:  Enough? * | |

| ID: 30886 | Rating: 0 | rate:

| |

I wouldn't do it. There's a reason your PSU didn't come with 4 PCIe connectors. I would get a new motherboard and a new PSU if I was going to run dual GPUs having that setup. You don't want to be at the limitations of your hardware when it comes to power supply. You want some headroom. | |

| ID: 30889 | Rating: 0 | rate:

| |

Might you be a tad pessimistic? Here's the way I see it. My (new) 620 watts PSU has two PCIe connectors so it can run two GPUs. I need the splitter to run the (legacy) GTX 460 since it requires two feeds. The (new) GTX 660 requires just one. Nvidia's Web site tells me that the minimum system power requirement for each of these GPUs, separately, is 450 watts. That means I have 170 watts spare to run a second GPU. Surely that's enough? | |

| ID: 30892 | Rating: 0 | rate:

| |

|

Personally, I say go ahead! It will either work, or it won't, you don't have much to lose! | |

| ID: 30896 | Rating: 0 | rate:

| |

|

Tomba, | |

| ID: 30899 | Rating: 0 | rate:

| |

Finally, do I have power? Previously I posted I had four PCIe power connectors. Not true. I have two, but one of these, for the 460 (it needs two), should be enough: That splitter won't make any difference to power delivery! It would just split 75W into two - not give you two times 75W. This is what you would need,  http://www.amazon.co.uk/Neewer-PCI-E-Splitter-Power-Adapter/dp/B005J8DGTU/ref=pd_sim_computers_10 The PSU should be powerful enough. A GTX460 has a TDP of 150W or 160W depending on the model. Two PCIE 6pin power connectors can supply 150W, which should be sufficient for crunching here (actual usage is likely to be ~115W on the GPU). I'm not convinced it would work, but there is nothing obvious to prevent it so I would give it a try, if only to advise others that it doesn't work. Since I started using my riser cable I've had lots of errors. In part these may be due to using the iGPU on my i7-3770K (display is a bit less responsive) and down to some CPU tasks, however I think I was having a power issue; the card being raised was a GeForce GTX650TiBoost which only has one 6-pin PCIE power connector. That would have been delivering 75W, but the TDP is 134W for a reference card. The card works at 1134MHz rather than 1033, so it might have had a higher bespoke TDP/needed more power. It's also on a PCIE2 slot, unlike the other slots. Anyway, there is likely to be some loss of power delivery from the cable and it might have caused a problem. I've swapped GPU's around now, so that my GTX660Ti (with two PCIE 6-pin power cables supplying 150W). That's actually enough for a reference model on its own, without any power from the PCIE slot. ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 30900 | Rating: 0 | rate:

| |

Tomba, Great link! Thank you!! I spent the afternoon there. I started with my Dell XPS 435 as delivered, four years ago, with a GeForce 210. 386 watts for a 475 PSU. No problem. I then gave the calculator my three-year-old GTX 460; 553 watts vs. 475 PSU!! That sure is one power-hungry device. And I ran GPUGrid 24/7, under-watted, for three years! Immediate conclusion? Give the GTX 460 to my grandson for gaming (making sure he has enough watts). And I would still like to keep in the range of my new 610 PSU (should have done the sums before I bought it). The bottom of the Nvidia range today is the GTX 650. One takes 429 watts, two take 512. One GTX 660 takes 527 watts, two take 709 watts. Unfortunately the Newegg calculator does not allow for more than one GPU but, given these numbers, I reckon one of each - 650 and 660 - will be fine for a 610 watts PSU. Or am I dreaming? Your thoughts will be most welcome! Thank you, Tom | |

| ID: 30901 | Rating: 0 | rate:

| |

That splitter won't make any difference to power delivery! It would just split 75W into two - not give you two times 75W. This is what you would need, Many thanks for the heads-up. Ordered... The PSU should be powerful enough. I shall certainly try the GTX 460 in the riser, with that molex power supply. Tom | |

| ID: 30902 | Rating: 0 | rate:

| |

|

I think that newegg calculator is only taking into account the Nvidia PSU recommendations, not the cards' actual power draw. | |

| ID: 30905 | Rating: 0 | rate:

| |

Just keep an eye (and your nose) on the system when you start crunching, observe its temps* for a few hours, don't let it unattended. You'll want to stop it before the motherboard starts burning in flames! Thanks for your response. Re "motherboard temperature" - I found a Win 7 app that measures the CPU core temps. Is that what you mean, or is it something else? Tom | |

| ID: 30908 | Rating: 0 | rate:

| |

|

Motherboard temperature is different from CPU temperature. Just found and installed SPECCY http://www.piriform.com/speccy a Windows tool which will tell you both (and a whole lot more information about your system). They have a free version and a pay version, I used the free version. | |

| ID: 30912 | Rating: 0 | rate:

| |

Since I started using my riser cable I've had lots of errors. In part these may be due to using the iGPU on my i7-3770K (display is a bit less responsive) and down to some CPU tasks, however I think I was having a power issue; the card being raised was a GeForce GTX650TiBoost which only has one 6-pin PCIE power connector. That would have been delivering 75W, but the TDP is 134W for a reference card. The card works at 1134MHz rather than 1033, so it might have had a higher bespoke TDP/needed more power. It's also on a PCIE2 slot, unlike the other slots. Anyway, there is likely to be some loss of power delivery from the cable and it might have caused a problem. I've swapped GPU's around now, so that my GTX660Ti (with two PCIE 6-pin power cables supplying 150W). That's actually enough for a reference model on its own, without any power from the PCIE slot. Just an update, Since I moved the GTX660Ti onto the PCIE Riser (instead of the GTX650TiBoost) I have been getting fewer errors. All cards are returning completed results and the GTX660Ti despite being in a PCIE2 @X2 slot (going by GPUz) is doing very well. If anything it's the most stable of the cards. On the other hand the GTX650TiBoost is running @98% power, 95% GPU usage, and 69C (which is surprisingly high for that card and probably causing issues). Relative performances (GTX660Ti, GTX660, GTX650TiBoost): I397-SANTI_baxbim1-2-62-RND5561_0 4536452 21 Jun 2013 | 13:03:04 UTC 21 Jun 2013 | 17:21:28 UTC 10,299.97 10,245.68 20,550.00 I693-SANTI_baxbim1-1-62-RND4523_0 4535331 21 Jun 2013 | 5:50:58 UTC 21 Jun 2013 | 16:32:07 UTC 12,587.32 12,434.03 20,550.00 I538-SANTI_baxbim1-2-62-RND4314_0 4535821 21 Jun 2013 | 9:54:01 UTC 21 Jun 2013 | 17:49:17 UTC 14,005.43 13,897.61 20,550.00 Even on PCIE2 @X2 the GTX660Ti is 36% faster than the GTX650TiBoost and 22% faster than the GTX660. For these WU's and on this setup (Intel HD Graphics 4000 being used for display) PCIE width is irrelevant. This confirms what Beyond and dskagcommunity reported. Update - 23 valid from last 26, with all cards completing tasks. Reasonably stable... ____________ FAQ's HOW TO: - Opt out of Beta Tests - Ask for Help | |

| ID: 30913 | Rating: 0 | rate:

| |

TL/DR: Don't buy too big PSUs without reason! Find the power draw of your major components, add a safety margin (something like 10%), factor in PSU efficiency (80-90%) and THEN buy the PSU you need. Vagelis is right here, and with the rest of his post. The only points I'd change are these: 1. For power supplies you want way more than 10% safety margin under sustained load. Drive the PSU too hard and it will fail earlier. And maximum PSU efficiency is achieved around 50% load. The historical guideline has been to shoot for ~50% load. However, with more efficient PSUs I think it's no problem to aim for 50 - 80% load, as the amount of heat generated inside the PSU (which kills it over time) is significantly reduced by the higher PSU efficiency, and by now we've got 120 mm fans instead of 80 mm (it's easier to cool things this way). And modern PSU have flatter power responce curves, so efficiency doesn't drop much if you exceed 50% load. To summarize: I'd want more than 20% safety margin between the maximum power draw of the system and what the PSU can deliver. Typical power draw under BOINC will be lower than whatever you come up with considering maximums. 2. PSU efficiency determines how much power the PSU draws from the wall plug. For the PC a "400 W" unit can always deliver 400 W, independent of its efficiency. At 100% load and 80% efficiency you'd be paying for 500 W, whereas at 90% efficiency you'd be paying for 444 W from the wall plug. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 30923 | Rating: 0 | rate:

| |

|

I agree completely, ETA! Very valid points! | |

| ID: 30931 | Rating: 0 | rate:

| |

|

Because you wrote about the pcie x1->x16 adapters. There are some with Molex power to get the needed power. But not easy to get them shipped to austria in my case :( In Germany they are available over several shops. I found one too for UK people. | |

| ID: 30940 | Rating: 0 | rate:

| |

Because you wrote about the pcie x1->x16 adapters. There are some with Molex power to get the needed power. But not easy to get them shipped to austria in my case :( In Germany they are available over several shops. I found one too for UK people. I bought one here. | |

| ID: 30941 | Rating: 0 | rate:

| |

Just found and installed SPECCY http://www.piriform.com/speccy a Windows tool which will tell you both (and a whole lot more information about your system). They have a free version and a pay version, I used the free version. That's a "Bingo!". Thank you. Right now, before I install the second GPU, the room temperature is 26.5C and Speccy shows my Dell/Intel mobo between 63C and 67C. When I install the second GPU, what is the max mobo temperature I should be looking for to avoid it bursting into flames?? Thanks, Tom | |

| ID: 30942 | Rating: 0 | rate:

| |

|

That's difficult to say if we don't know what is actually measured here. Usually reported mainboard temperatures in well ventilated cases are in the range of 35 - 45°C. So >60°C would already be really hot. But if that temperature only applies to the VRM circuitry then you're fine, as these can usually take 80 - 100°C. | |

| ID: 30943 | Rating: 0 | rate:

| |

Because you wrote about the pcie x1->x16 adapters. There are some with Molex power to get the needed power. But not easy to get them shipped to austria in my case :( In Germany they are available over several shops. I found one too for UK people. Thats not a pcie x1 to x16 adapter ;) ____________ DSKAG Austria Research Team: http://www.research.dskag.at  | |

| ID: 30944 | Rating: 0 | rate:

| |

Because you wrote about the pcie x1->x16 adapters. There are some with Molex power to get the needed power. But not easy to get them shipped to austria in my case :( In Germany they are available over several shops. I found one too for UK people. Oh dear... This is getting complicated. Please show me one. Thank you. | |

| ID: 30946 | Rating: 0 | rate:

| |

|

Interesting what I read here. | |

| ID: 30947 | Rating: 0 | rate:

| |

And 358W with a GTX660 and 7 cores from an i7, with also 1 SSD, 1 Blueray, 1 DVD and 2 HD. This PSU gives 880W. How much is it drawing when your HDDs and optical drives are in use? You have a lot of headroom with your PSU, but imagine you only had around 600W (at 100% PSU capacity) and two mid-high range GPUs at full load. With HDDs connected I would be concerned for possible damage, meaning data backups would need greater frequency. Overclocking also adds to power stability requirements. Many people may be comfortable with 80%+ load, but I like to keep it under that. | |

| ID: 30950 | Rating: 0 | rate:

| |

|

Modern desktop HDDs draw about 3 W more under load than when idle. Add 1 - 2 W for older ones. Idle power draw is in the range of 5 W, up to 8 W for older ones. At startup they'll briefly need 20 - 25 W, but at this point the GPUs do not yet draw power, so for cruncher PCs this doesn't matter at all ;) | |

| ID: 30954 | Rating: 0 | rate:

| |

|

Western Digital Black drives pull about 10-11 W at load. I could debate the numbers on optical drives, etc, but it would distract from my main point. The more PSU headroom the better, and going over 80% load may cause stability issues. This is just for caution. If someone is comfortable with pushing their PSU, more power(hah) to them. | |

| ID: 30957 | Rating: 0 | rate:

| |

Just found and installed SPECCY http://www.piriform.com/speccy a Windows tool which will tell you both (and a whole lot more information about your system). They have a free version and a pay version, I used the free version. >60C for your motherboard is VERY hot! In the hot Greek summer I'm going through now, with ambient temps ~35C, my temps are: coretemp-isa-0000 Adapter: ISA adapter Core 0: +72.0°C (high = +83.0°C, crit = +99.0°C) Core 1: +66.0°C (high = +83.0°C, crit = +99.0°C) Core 2: +64.0°C (high = +83.0°C, crit = +99.0°C) Core 3: +64.0°C (high = +83.0°C, crit = +99.0°C) atk0110-acpi-0 Adapter: ACPI interface Vcore Voltage: +1.22 V (min = +0.80 V, max = +1.60 V) +3.3V Voltage: +3.38 V (min = +2.97 V, max = +3.63 V) +5V Voltage: +5.14 V (min = +4.50 V, max = +5.50 V) +12V Voltage: +12.26 V (min = +10.20 V, max = +13.80 V) CPU Fan Speed: 1110 RPM (min = 600 RPM) Chassis1 Fan Speed: 1520 RPM (min = 600 RPM) Chassis2 Fan Speed: 1052 RPM (min = 600 RPM) Power Fan Speed: 896 RPM (min = 0 RPM) CPU Temperature: +71.0°C (high = +45.0°C, crit = +45.5°C) MB Temperature: +38.0°C (high = +45.0°C, crit = +46.0°C) Fan Speed : 55 % Gpu : 67 C With a 27C ambient temperature, my temps would be <60C (around 55) for CPU and GPU and about 30C for my motherboard. Most probably, the temperature you're seeing is not your motherboard's. ____________  | |

| ID: 30962 | Rating: 0 | rate:

| |

Most probably, the temperature you're seeing is not your motherboard's.  | |

| ID: 30963 | Rating: 0 | rate:

| |

|

That is hot Tomba. | |

| ID: 30964 | Rating: 0 | rate:

| |

Your temperature is to high, you should try do to something about it. If you clean all the fans with canned air and you close to case (airflow will be tunneled then, Dell does that smart), and you don't start any applications for a while, what is then the temperature reading? Thanks for responding. As a first step I suspended the active GPUGrid WU. Temp is now 40C/41C. Now for the blow bit... | |

| ID: 30965 | Rating: 0 | rate:

| |

|

First I'd make sure the reading is actually correct. Which sensor measures what is something which can quickly be screwed up any party involved. That the tool calls it "system temperature" doesn't mean anything on its own. Hence my suggestion to feel the air for yourself - then you'll know for sure if you've got some software reading error or if you're cooking your hardware. | |

| ID: 30974 | Rating: 0 | rate:

| |

|

Going by that window grab 'System Temperature' means the South Bridge Chip, and it's 63°C. It's probably covered by a heatsink. IIRC 63°C is reasonable enough for an early i7. | |

| ID: 30982 | Rating: 0 | rate:

| |

Now for the blow bit... I usually blow out the case every three months so, apart from a small amount of dust, it was clean enough. All the case-side ventilation holes were clear of fluff and spider webs(!) After reassembling I took temp readings with nothing running - top pic - and with just GPUGrid running - bottom pic:  | |

| ID: 30995 | Rating: 0 | rate:

| |

|

Your CPU and system temperatures are too high. The CPU, I can attribute to the lousy Intel stock cooler, it is an EPIC fail on its part. I HIGHLY recommend, especially for prolonged crunching, a good aftermarket cooler. You can find many decent ones at very reasonable prices, that will just blow the Intel cooler out of the water! | |

| ID: 30996 | Rating: 0 | rate:

| |

|

I know some of you will be cringing, with finger on fire department emergency button, but I had to do it... | |

| ID: 31002 | Rating: 0 | rate:

| |

|

Did you try this? | |

| ID: 31005 | Rating: 0 | rate:

| |

|

cc_config - use all GPU's - FAQ - Best configurations for GPUGRID | |

| ID: 31006 | Rating: 0 | rate:

| |

Did you try this? Where do I save it in Win 7 ?? | |

| ID: 31007 | Rating: 0 | rate:

| |

|

C:\ProgramData\BOINC (this folder will likely be hidden, but should open if you type it into Explorer) | |

| ID: 31008 | Rating: 0 | rate:

| |

C:\ProgramData\BOINC (this folder will likely be hidden, but should open if you type it into Explorer Wow! It's all happening!! Thank you! First, I got two POEMs, and the GPUGrid WU went to sleep. Then one of the POEMs died and a GPUGrid WU downloaded. Here we are now, which I guess is what I was aiming for! Many thanks, Tom  Temps are here:  | |

| ID: 31012 | Rating: 0 | rate:

| |

|

One interesting observation: | |

| ID: 31013 | Rating: 0 | rate:

| |

|

You can't run more than 1 nVidia for POEM (and all other OpenCL projects, I think). To keep running some POEMs you'd need to exclude one GPU from this project, or mix AMDs and nVidias in one machine. | |

| ID: 31015 | Rating: 0 | rate:

| |

You can't run more than 1 nVidia for POEM (and all other OpenCL projects, I think). To keep running some POEMs you'd need to exclude one GPU from this project, or mix AMDs and nVidias in one machine. I wonder why I got two... Edit: yes, the CPU usage again. For Keplers a whole thread is used, for older cards much less. That would explain it. Thank you! | |

| ID: 31016 | Rating: 0 | rate:

| |

You can't run more than 1 nVidia for POEM (and all other OpenCL projects, I think). To keep running some POEMs you'd need to exclude one GPU from this project, or mix AMDs and nVidias in one machine. Random chance. Not sure you understood correctly: any POEM WU assigned to your 2nd GPU will fail in the stock config. MrS ____________ Scanning for our furry friends since Jan 2002 | |

| ID: 31024 | Rating: 0 | rate:

| |

I know some of you will be cringing, with finger on fire department emergency button, but I had to do it... Well - if you were cringing you were right! Too many times the CPU temp went into the red zone, so I removed the riser, and its GTX 460. I'm now back to just the GTX 660, and all is well. Question: was my problem the lack of power (610W for the two GPUs), or was it insufficient cooling? | |

| ID: 31070 | Rating: 0 | rate:

| |

|

If the CPU becomes too hot it's insufficient case cooling, assuming the CPU fan already works as hard as it can. Too hot is actually caused by too much being burned, so the PSU did its job just fine ;) | |

| ID: 31078 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : NVidia GTX 650 Ti & comparisons to GTX660, 660Ti, 670 & 680