Message boards : Number crunching : Limit on GPUs per system?

| Author | Message |

|---|---|

|

Is there a limitation on how many GPUs that can be run from one system? | |

| ID: 28468 | Rating: 0 | rate:

| |

|

Good timing as I've been wondering about similar things. | |

| ID: 28469 | Rating: 0 | rate:

| |

|

I believe Nvidia had an 8 card limit in GeForce drivers, although this may have been removed from later drivers. I have run an expansion chassis with 4 x GTS450SP and another two in the machine itself without any issues. I believe BOINC also had a limit (8 or 16) and we asked for it to be changed, some time back now. Not sure if its actually been done. | |

| ID: 28470 | Rating: 0 | rate:

| |

|

Found an expansion chassis. Now I see. Hmmm, bottleneck in the cables and it looks to me like the slots will run at x8 if all occupied. | |

| ID: 28473 | Rating: 0 | rate:

| |

|

I was originally considering something like the Dell C410x chassis but it's complete overkill. | |

| ID: 28474 | Rating: 0 | rate:

| |

|

I've run Tyan FT48-B7025 units with 16 Tesla C1070s, and also seen them configured with 8x K10s. It's not cost-effective, in terms of $/GPU, because the more exotic host system has a significant price premium. | |

| ID: 28475 | Rating: 0 | rate:

| |

|

Aye, they're pricey and I don't like the bottleneck inherent in the cable. The PSU in the one I linked to didn't have enough capacity in the PSU for the GPUs I am considering. 6 x PCIe 2.0 x16 (triple x16 or six x8) To me that means I can put 4 cards in the 4 blue slots and they'll all run at x16, is that right? I would much rather have Gen. 3 PCI-E even at the additional expense, for future considerations, but the word is GPUgrid tasks don't need that much bandwidth and do just fine on Gen. 2 so maybe I would compromise on that point. I am considering starting out with this mobo and one card then add say one more card per month/paycheque. For CPU probably a low cost Xeon like this one with 8MB L2 cache. I like big cache for multitasking. For power supply.... 4 X GTX690 needs at least 2800 watts so I am seriously considering 2 of these 1500 watt EVGA SuperNOVA NEX1500 gold certified model. They have a 10 year warranty. One review says this model can be configured via onboard DIP switches to run when not connected to a mobo. So the plan would be to buy the mobo + CPU + 1 GTX690 + 1 PSU for now and the PSU has enough capacity for a second GTX690 which I'll purchase later. On purchase of the third 690 (or perhaps one of the rumored Titans) I would buy another of the same PSU and configure it to run without the mobo connection. It will also power the fourth GTX690/TiTan/whatever. I haven't looked hard but the only 2800 watt PSUs I can find are these 2 models by Cisco and at $1675 they're too rich for me. Two of the 1500 watt models mentioned above are only $920 and the pair deliver 200 watts more. Buying 2 X 1500 watt PSU has the advantage of being modular. If one fails I will still have the CPU and 2 GPUs running. There is also the advantage of splitting the cost over 2 payments. And I can't find a 3,000 watt PSU anywhere! Another PSU option is to have 1 regular PSU to power the mobo and 1 card plus another that puts out only the +12 required for the other cards. A big watt +12 only PSU is less complicated than a normal PSU so it's gotta be cheaper. Also it would probably be very simple and cheap to build one. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 28477 | Rating: 0 | rate:

| |

Thanks, MJH! I'm interested mainly in that motherboard. Newegg.ca stocks them and here it is for $377, $90 cheaper than the mobo I mentioned in my previous post. The thing about it that gives me concern is the PCI-E specs. It's PCI-E Gen 3 but the slots run at x8 if they're all occupied. The mobo I mentioned in my previous post has 4 slots that run at x16 even when all occupied but they're only Gen 2 not Gen 3. Which mobo would give the best performance on GPUgrid tasks? My hunch is x16 is more important than Gen 3 but I really don't know for sure.

The best case is no case, IMHO, and I won't waste money on another one. A cardboard box is good enough for me. ____________ BOINC <<--- credit whores, pedants, alien hunters | |

| ID: 28478 | Rating: 0 | rate:

| |

|

With Windows is possible to have "only" 4 GPU, for example 4xGTX680 or 2xGTX690 (dual GPU). | |

| ID: 28479 | Rating: 0 | rate:

| |

|

PCI3 3.0 is double the bandwidth of 2.0. So x16/2.0 == x8/3.0. So no difference in bandwidth. | |

| ID: 28480 | Rating: 0 | rate:

| |

I'm interested mainly in that motherboard. Newegg.ca stocks them and here it is for $377, $90 cheaper than the mobo I mentioned in my previous post. The thing about it that gives me concern is the PCI-E specs. It's PCI-E Gen 3 but the slots run at x8 if they're all occupied. The mobo I mentioned in my previous post has 4 slots that run at x16 even when all occupied but they're only Gen 2 not Gen 3. Which mobo would give the best performance on GPUgrid tasks? My hunch is x16 is more important than Gen 3 but I really don't know for sure. Dagorath - For GPUGRID tasks, PCIe speed isn't tremendously important, except in the occasional experiment where we add customisations. In that case, having a fast CPU is just as important, if not more. Also, for best performance, run Linux. For GPUGRID tasks alone, check out the Asus P8Z77-WS board. It'll give the same performance at a lower price point. Similarly, 16GB is overkill - 1GB per GPU is adequate. MJH | |

| ID: 28481 | Rating: 0 | rate:

| |

|

Thanks for all the info guys, Gattorantolo, werdwerdus, MJH. It helps us GPU newbies a lot. | |

| ID: 28484 | Rating: 0 | rate:

| |

With Windows is possible to have "only" 4 GPU, for example 4xGTX680 or 2xGTX690 (dual GPU). This seems the best answer to the OP question. BUT is there a limit to the amount of gpu's per machine on GPUGrid ?? I ask because I can't get a third GTX 690 to be recognized correctly. Looks like it will be going into another system because it is just wasting power draw sitting in a slot doing sweet fa. Willing to provide all information required if someone willing to help me solve this issue - if it is solvable. Thanks | |

| ID: 31734 | Rating: 0 | rate:

| |

|

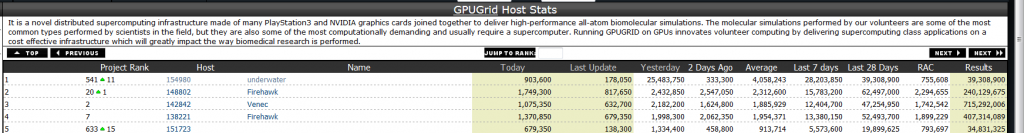

Have you ever checked out the top host list? | |

| ID: 31736 | Rating: 0 | rate:

| |

|

Cheers - yep running xml files. | |

| ID: 31737 | Rating: 0 | rate:

| |

|

What you're going to want to do is investigate "PCIe lanes." | |

| ID: 31746 | Rating: 0 | rate:

| |

You can get 7 discrete GPU chips (e.g. three 690s and a 680) to work under Windows 7 provided that your CPU, that's with a "C" like CAT, has the "connections" to support that many PCIe "lanes." Do you think Windows Server 2008 HPC would support more than 7 if the CPU supports it? | |

| ID: 31747 | Rating: 0 | rate:

| |

You can get 7 discrete GPU chips (e.g. three 690s and a 680) to work under Windows 7 provided that your CPU, that's with a "C" like CAT, has the "connections" to support that many PCIe "lanes." I really did tell everything I know and I'm not even sure that information is exhaustively correct. It seems like I've seen more than 7 cards running on a system, so someone has figured-out something; but I also know that someone else was trying to write a custom BIOS to get 4 GTX 690s to run on a consumer board and found-out that it wasn't possible due to the CPU hardware limitations. | |

| ID: 31751 | Rating: 0 | rate:

| |

|

I can see that you've solved this problem. | |

| ID: 31752 | Rating: 0 | rate:

| |

I can see that you've solved this problem. It was a driver issue. Uninstalled everything Nvidia, then ran drive-sweeper. When re-booted and installed latest Nvidia drivers suite everything was recognized and worked in harmony. I also updated the SR-2 to new Bios recently released (thanks EVGA) but that did not make any difference to the problem I had. running 2 x Xeon 5650 cpus This score form yesterday just can't be correct can it ?? 25,483,750 from this one host. Have no idea what the glitch was, but today seems more normal.  | |

| ID: 31753 | Rating: 0 | rate:

| |

|

that happens when you merge computers on the GPUGrid site | |

| ID: 31764 | Rating: 0 | rate:

| |

that happens when you merge computers on the GPUGrid site After I got 3rd card working that is exactly what I did. Thanks | |

| ID: 31769 | Rating: 0 | rate:

| |

|

I've been semi-corrected elsewhere, so just wanted to correct the information I gave here in an effort not to confuse anyone who might read this thread. | |

| ID: 31906 | Rating: 0 | rate:

| |

I've been semi-corrected elsewhere, so just wanted to correct the information I gave here in an effort not to confuse anyone who might read this thread. Actualy why 4x690 not work is nothing related to the CPU/MB is related to the windows itself but that limitation is only in place due the way the 690 works, 3x690+590 actualy works (or any other combination of 4 GPU´s), so actualy you could run up to 8 GPU´s on a single host as a theorical maximum. But of course you need to have the PSU, a way to cool, a fast CPU and a top of the class MB to feed this monster. Probabily (i don´t know one who try) 4x690 could work on Linux or other OS. The information about that is very limited and hard to find, and yes even with the help of a new bios from NVidia the 4x690 not works, and one warning, the mate who try needs to send back to NVidia his 690... for repairs... ____________  | |

| ID: 32637 | Rating: 0 | rate:

| |

We build machines with the following spec: Question: How do you (or anyone) get the bottom card to fit? I have several different models of these "4x dual wide GPU boards", and they all have the same flaw: They put a lot of headers under the space of the 4th double-wide GPU. And so you can't push the GPU down into the PCIe slot without crushing the cables plugged into those headers. ____________ Reno, NV Team: SETI.USA | |

| ID: 32865 | Rating: 0 | rate:

| |

Question: How do you (or anyone) get the bottom card to fit? We only connect power and LED switches. The flying leads are bent sharply but do fit. See: https://www.youtube.com/watch?v=0FIRG6H0sSI MJH | |

| ID: 32867 | Rating: 0 | rate:

| |

We build machines with the following spec: FWIW maybe one could buy a couple of these and experiment with removing the cables and cutting down the plastic and pins so they don't stick up so high. Just a thought. | |

| ID: 32868 | Rating: 0 | rate:

| |

|

Ah. That explains it. Thanks! | |

| ID: 32873 | Rating: 0 | rate:

| |

Message boards : Number crunching : Limit on GPUs per system?