Message boards : Graphics cards (GPUs) : General buying advice for new GPU needed!

| Author | Message |

|---|---|

|

Currently I am facing a few questions that I don't know the answers to as I am quite new in the market for GPUs. Felt that due to next gen of NVIDIA cards with their RTX 3000 series, it would be time to consider an "upgrade" myself. At the moment I am running a pretty old rig which is a HP workstation with an older Xeon processor and a GTX 750 Ti OC Edition. So far I am pretty happy with it, but would like to get a more efficient card with the Turing chip which seems to me as a great balance between performance, efficiency and price. As I am currently constrained by the available x16 PCIe slots on my Mobo, as well as cooling/airflow issues and power supply bottleneck (475 W @ 80% efficiency), I only want to consider cards with ~100 W TDP and <250€. Thus, I wouldn't be able to run a dual-GPU rig, but rather had to substitute the old vs. new card. Having read up on the forum, I see that a GTX 1650 seems to run rather efficient. Really can't afford any RTX 2000 or 3000 series card. With a GTX 1650 Super sitting at ~160€ right now, I definitely consider upgrading right now. Thanks for any input! | |

| ID: 55305 | Rating: 0 | rate:

| |

|

Have a look a the GPUFlops Theory vs Reality chart. The GTX 1660Ti is top of the chart for efficiency. | |

| ID: 55306 | Rating: 0 | rate:

| |

|

Well, thanks first of all for your timely answer! I appreciate the pointer to the efficiency comparison table very much. Impressive how detailed it is. Kind of confirms what I already suspected as it really seems that the GTX 1660 / Ti would be more costly initially but well worth the investment even though all Turing based cards score pretty well. That also is very similar to the data ServicEnginIC shared with me in another recent post of mine. | |

| ID: 55307 | Rating: 0 | rate:

| |

A GTX 1660 Ti would require an 8 pin connector but my PSU only offers a single 6 pin. What about some spare Molex connectors from the power supply. A lot of cards include Molex to PCIe power adapters with the card. I'm sure there are adapters to go from Molex to 8-pin available online. Might be a solution. But a new build solves a lot of compatibility issues since you are spec'ing out everything from scratch. I like my Ryzen cpus as they provide enough cpu threads to crunch cpu tasks for a lot of projects and still provide enough cpu support to support GPUGrid tasks on multiple gpus. | |

| ID: 55308 | Rating: 0 | rate:

| |

|

Thanks for this idea. I'll investigate this possibility further while I actually prefer to wait now to plan the new rig first. Do you know how much W a molex connector supplies? Have read different values online so far... | |

| ID: 55311 | Rating: 0 | rate:

| |

Interesting to see the GTX 750 Ti still making it to the top of the list performance-wise :) I am running GTX750Ti in two of my old and small machines. And I don't want to miss these cards :-) They have been doing a perfect job so far, for many years. | |

| ID: 55312 | Rating: 0 | rate:

| |

|

Keith is correct that adaptors are available. The power to the 1660Ti is what's also known as a 6+2 pin VGA power plug. That might help with your search. We're also assuming that your PSU is at least 450W. | |

| ID: 55313 | Rating: 0 | rate:

| |

|

Something to think about, two GTX1660 Supers on a machine can potentially keep pace with a GTX2080. | |

| ID: 55314 | Rating: 0 | rate:

| |

|

Oops, I mistakenly called the RTX GPUs GTX. My bad.🙄 | |

| ID: 55315 | Rating: 0 | rate:

| |

Interesting to see the GTX 750 Ti still making it to the top of the list performance-wise :) I built from recovered parts this system to return to work a repaired GTX 750 Ti graphics card. It is currently scoring a 50K+ RAC at GPUGrid. A curious collateral anecdote: I attached this system to PrimeGrid, for maintaining its CPU working also. OK, finally it collaborated as double checker system in discovering the prime number 885*2^2886389+1 Being 868.893 digits long, it entered T5K list for largest known primes at position 971 ;-) | |

| ID: 55316 | Rating: 0 | rate:

| |

Thanks for this idea. I'll investigate this possibility further while I actually prefer to wait now to plan the new rig first. Do you know how much W a molex connector supplies? Have read different values online so far... The standard Molex connector can supply 11A for the 12V pin or 132W. The quick perusal at Amazon and Newegg.com showed a Molex to 8 pin PCIe adapter for $8. | |

| ID: 55319 | Rating: 0 | rate:

| |

|

Thank you all for your comments. Definitely seems to me that all Turing chip based cards seem rather efficient and a good bang for your buck :) | |

| ID: 55320 | Rating: 0 | rate:

| |

... I'll first run with a dual-GPU system with my GTX 750Ti and one of the aforementioned cards. I am not sure whether you can have a mix different GPU types (Pascal, Turing) in the same machine. One of the specialists here might give more information on this. | |

| ID: 55325 | Rating: 0 | rate:

| |

... I'll first run with a dual-GPU system with my GTX 750Ti and one of the aforementioned cards. Yes, the cards can be mixed. The only issue is on a PC restart (or Boinc service restart) the gpugrid tasks must attach to the same gpu they were started on or both tasks will fail immediately. Refer this post by Retvari Zoltan for more information on this issue and remedial action. http://www.gpugrid.net/forum_thread.php?id=5023&nowrap=true#53173 Also, refer the ACEMD3 faq http://www.gpugrid.net/forum_thread.php?id=5002 | |

| ID: 55326 | Rating: 0 | rate:

| |

... I'll first run with a dual-GPU system with my GTX 750Ti and one of the aforementioned cards. Yes, you can run two different GPUs in the same computer - my host 43404 has both a GTX 1660 SUPER and a GTX 750Ti, and they both crunch for this project. Three points to note: i) The server shows 2x GTX 1660 SUPER - that's a reporting restriction, and not true. ii) You have to set a configuration flag 'use_all_gpus' in the configuration file cc_config.xml - see the User manual. Otherwise, only the 'better' GPU is used. iii) This project - unusually, if not uniquely - can't start a task on one model of GPU and finish it on a different model of GPU. You need to take great care when stopping and re-starting BOINC, to make sure the tasks restart on their previous GPUs. | |

| ID: 55327 | Rating: 0 | rate:

| |

Then if budget, and running cost allow, I consider upgrading further. Keep in mind that electricity bills here in Germany tend to be often threefold of what other users are used to and that can definitely influence hardware decision when running a rig 24/7. A OC GTX 1660 Ti with 120 W, keeping in mind the degree of efficiency of most PSUs might easily pull 140-150 W from the wall and with 24/7 that relates to 24h*150W=3.6 kWh which at the current rate of .33€ is about 1.19€ per day only for a single GPU. So efficiency is on mind, as CPU and other peripherals also pull a significant wattage.... Still wouldn't like to have the power bill of some of the users here with a couple RTX 2xxx or 3xxx soon :) If running costs are an important factor, then consider the observations made in this post: https://gpugrid.net/forum_thread.php?id=5113&nowrap=true#54573 | |

| ID: 55328 | Rating: 0 | rate:

| |

|

Whatever the decision be, I'd recommend to purchase a well refrigerated graphics card. | |

| ID: 55329 | Rating: 0 | rate:

| |

Whatever the decision be, I'd recommend to purchase a well refrigerated graphics card. +1 on attention to cooling ability (The GTX 1650 Super when power limited to 70W (minimum power), ADRIA Tasks execution Time: 20800 seconds) EDIT: GERARD has just released some work units that will take a GTX 750 Ti over 1 day to complete. A good time to retire the venerable GTX 750 Ti. | |

| ID: 55333 | Rating: 0 | rate:

| |

|

Thank you all! That thread turned out to be a treasure trove of information. I will keep referring to it in the future as it is almost like a timelier version of the FAQs. | |

| ID: 55335 | Rating: 0 | rate:

| |

|

bozz4science wrote: At the moment my GTX 750 Ti with the earlier mentioned OC setting sitting at 1365 core clock, is currently pushing it to nearly 100k credit per day... may I ask you at which temperature this card is running 1365 MHz core clock? Question also to the other guys here who mentioned that they are running a GTX 50 Ti: which core clocks at which temperatures? Thanks in advance for your replies :-) | |

| ID: 55336 | Rating: 0 | rate:

| |

|

Anybody found a RTX 3080 crunching GPUGrid tasks yet? | |

| ID: 55337 | Rating: 0 | rate:

| |

Question also to the other guys here who mentioned that they are running a GTX 50 Ti: which core clocks at which temperatures? On this 4-core CPU system, based on a GTX 750 Ti running at 1150 MHz, Temperature is peaking 66 ºC, as seen at this Psensor screenshot. At the moment of taking screenshot, there were in process three Rosetta@home CPU tasks, thus using 3 CPU cores, and one TONI ACEMD3 GPU task, using 100% of GPU and the remaining CPU core to feed it. Processing room temperature : 29,4 ºC PS: Some perceptive observer might have noted that in previous Psensor screenshot, Max. "CPU usage" was reported 104%... Nobody is perfect. | |

| ID: 55338 | Rating: 0 | rate:

| |

Question also to the other guys here who mentioned that they are running a GTX 50 Ti: which core clocks at which temperatures? For starters keep in mind that I have a factory overclocked card, an Asus dual fan gtx 750 ti OC. Thus to achieve this OC, I don't have to add much on my own. Usually I apply a 120-135 MHz overclock to both core and memory clock that seems to yield me a rather stable setup. Minor hiccups with the occasional invalid result every week or so. This card is running in an old HP Z 400 workstation with bad to moderate airflow. See here: http://www.gpugrid.net/show_host_detail.php?hostid=555078 Thus, I adjusted the fan curve of the card slightly upwards to help with that. 11 out of 12 HT threads run, always leaving one thread overhead for the system. 10 run CPU tasks, 1 is dedicated to the GPU task. Cards is usually sitting between 60-63 ºC. Never seen temps above that range. When ambient temperatures are low to moderate between 18-25 ºC fans usually run at the 50% mark, for higher temps 25-30 ºC fans run at 58% and for above 30 ºC ambient temp, fans run usually at 66%. Running this OC setting at higher ambient temps means that it is harder to maintain the boost clock, so it rather fluctuates around it. Card is always under 100% CUDA compute load. Still hope that the card's fans will go slower into the autumn/winter season with ambient temps being great for cooling. The next lower fan setting is at 38% and you can't usually hear the card crunching away at its limit. Hope that helps. | |

| ID: 55339 | Rating: 0 | rate:

| |

|

Thank you very much for your pleasant comments. Forums have gained with you an excellent explainer! | |

| ID: 55340 | Rating: 0 | rate:

| |

I have a factory overclocked card, an Asus dual fan gtx 750 ti OC. Thus to achieve this OC, I don't have to add much on my own. Usually I apply a 120-135 MHz overclock to both core and memory clock that seems to yield me a rather stable setup. Minor hiccups with the occasional invalid result every week or so.If there are any invalid results, you should lower the clocks of your GPU and/or its memory. An invalid result in a week could cause more loss in your RAC than the gain of overclocking. Overclocking the memory of the GPU is not recommended. Your card tolerates the overclocking because of two factors: 1. the GTX 750Ti is a relatively small chip (smaller chips tolerate more overclocking) 2. you over commit your CPU with CPU tasks, and this hinders the performance of your GPU tasks. This has no significant effect on the points per day of a smaller GPU, but a high-end GPU will loose significant PPD under this condition. Perhaps the hiccups happen when there are not enough CPU tasks running simultaneously making your CPU feed the GPU a bit faster than usual, and these rare circumstances reveal that it's overclocked too much. 11 out of 12 HT threads run, always leaving one thread overhead for the system. 10 run CPU tasks, 1 is dedicated to the GPU task.I recommend to run as many CPU tasks simultaneously as many cores your CPU has (6 in your case). You'll get halved processing times on CPU tasks (more or less depending on the tasks), and probably a slight decrease in the processing time of GPUGrid tasks. If your system had a high-end GPU I would recommend to run only 1 CPU task and 1 GPU task, however these numbers depend on the CPU tasks and the GPU tasks as well. Different GPUGrid batches use different number of CPU cycles, so some batches have higher impact of an over-committed CPU. Different CPU tasks utilize the memory/cache subsystem to a different extent: Running many single-threaded CPU tasks simultaneously (this is the most common approach in BOINC) is the worst case, as this scenario results in multiplied data sets in the RAM. Operating on those simultaneously need multiplied memory cycles, and this results in increased cache misses, and using up all the available memory bandwidth. So the tasks will spend their time waiting for the data, instead of processing it. For example: Rosetta@home tasks usually need a lot of memory, so these tasks hinder not just each other's performance, but the performance of GPUGrid tasks as well. General advice for building systems for crunching GPUGrid tasks: A single system cannot excel in both CPU and GPU crunching at the same time, so I build systems for GPU (GPUGrid) crunching with low-end (i3) CPUs, this way I don't mind if the CPU's only job is to feed a high-end GPU unhindered (by CPU tasks). | |

| ID: 55341 | Rating: 0 | rate:

| |

|

Broader statement to say small Maxwell chips don't last as long Pascal or Turing larger chips. In my experience equal chance they die. GTX 750 at 1450MHz dead in 18 months when Turing 2.0GHz dead in 24 hours. GPU are worthless random no matter the size of GPU die. | |

| ID: 55342 | Rating: 0 | rate:

| |

|

Thank you all for getting back to me! I guess I first had to digest all this information. If there are any invalid results, you should lower the clocks of your GPU and/or its memory. An invalid result in a week could cause more loss in your RAC than the gain of overclocking. This thought has already crossed my mind, but I never thought this through. Your answer is very logical so I guess I will monitor my OC setting a bit more closely. Haven't had any invalid results for at least 1 week, so the successively lowered OC setting finally reached a stable level. Temps and fans are at a very moderate level. But it definitely makes sense that the "hiccups" together with a overcommitted CPU would strongly penalise the performance of a higher end GPU. I recommend to run as many CPU tasks simultaneously as many cores your CPU has (6 in your case). Thanks for this advice. I have read this debate about HT vs. not HT your physical cores and especially coming from the WCG forums of how to improve your speed in reaching runtime based badges, I thought by HT I don't only double my runtime as virtual core threads also count equally but also would see some efficiency gains. What I had seen so far was an improvement of throughput in points of roughly 2-5% over the course of a week based solely on WCG tasks. However I didn't consider what you outlined here very well explained and thus I have already returned to using 6 threads only. – One curious question though: Will only running 6 out of 12 HT threads while HT is enabled in the BIOS setting, effectively result in the same result as running 100% of cores while HT is turned off in the BIOS? So the tasks will spend their time waiting for the data, instead of processing it. This is what I could never really put my finger around so far. Because what I saw while HT was turned on was that some tasks more than doubled their average runtime while some stayed below the double average mark. What I want to convey here is that there was much more variability in the tasks what is consistent with what you describe. I guess some tasks got priority in the CPU queue while others were really waiting for data and those who skipped the line essentially didn't quite doubled while others did more than double by quite some margin. Also, having thought that the same WUs on 6 physical cores would generate the same strain on my system as running WUs on all 12 HT threads, I saw that CPU temps ran roughly 3-4 degrees higher (~ 4-6%) while at the same time my heatsink fan revved up about 12.5-15% to keep up with the increase in temps. A single system cannot excel in both CPU and GPU crunching at the same time. As I plan my new system build to be a one-for all solution, I won't be able to execute on this advice. I do plan however to keep more headroom for GPU related tasks. But I am still speccing the system as I am approaching the winter months. All I am sure of so far is that I want to base it on a 3700X. When I read Turing had worst lasting lifetime for me than others.I questioned my initial gut feeling as to go with a Turing based GTX 1660 Ti. For me it seemed like the sweet spot in terms of TDP/power and efficiency as seen in various benchmarks. Looking at the data I posted today from F@H, I do however wonder a GTX 1660 Ti will keep up with the pace of hardware innovation we currently see. I don't want to have my system basically rendered outdated in just 1 or 2 years. Keep in mind that this comes from someone running a i5 4278U as the most powerful piece of silicone at the moment. I don't mean to keep up with every gen and continually upgrade my rig and at the same time I know that no system will be able to maintain an awesome relative benchmark to the ever rising average compute power of volunteers' machines over the year, but want to build a solid system that will do me good for at least some years. And now, in retrospective a mere GTX 1660 Ti seems to be rather "low-end". Even an older 1080Ti can easily outperform this card. Something to think about, two GTX1660 Supers on a machine can potentially keep pace with a GTX2080. From what I see 2x 1660 Ti would essentially yield the same performance of a RTX 2060. That goes in the direction of what Pop Piasa initially also put up for discussion. Basically yielding the same performance but for a much more reasonable price. While power draw and efficiency is of concern to me, I do see a GTX 16xx / Super / Ti especially constrained on the VRAM side. And as discussed with Erich56, rod4X4 and Richard Haselgrove, I now know that while I have to pay attention to a few preliminary steps, running a system with 2 GPUs simultaneously is possible. Then I am coming back to the statement of Keith Myers who kindly pointed me in the direction of the GTX 1660 Ti, especially after I stressed that efficiency is a rather important issue for me. Have a look a the GPUFlops Theory vs Reality chart. The GTX 1660Ti is top of the chart for efficiency. That is what supported my initial gut feeling of looking within the GTX 16xx gen for a suitable upgrade. At least I am still very much convinced with my upgrade being a Ryzen chip! :) Maybe wrapping this up and apologies for this rather unconventional and long post. I have taken away a lot so far in this thread. 1) I will scale back to physical cores only and turn HT off. 2) OC a card will ultimately decrease its lifetime and there is tradeoff between performance improvement vs. stability issues. 3) OC a card makes absolutely no sense efficiency wise if this is a factor you consider. Underclocking might be a thing to reach the efficiency sweet spot. 4) Some consumer grade cards have a compute penalty in place that can potentially be solved by overclocking the memory clock to revert back to P0 power state. 5) An adapter goes a long into solving any potential PSU connectivity issues. 6) Planning a system build/upgrade is a real pain as you have so many variables to consider, hardware choices to pick from, leaving headroom for future upgrades etc... 7) There is always someone around here who has an answer to your question :) Thanks again for all your replies. I will continue my search for the ultimate GPU for my upgrade. I am now considering a RTX 2060 Super which currently retails at 350€ vs a GTX 1660 Ti at 260€. An RTX would sit at 175 TDP which would be my 750 Ti combined with a new 1660 Ti. So many considerations. | |

| ID: 55400 | Rating: 0 | rate:

| |

Will only running 6 out of 12 HT threads while HT is enabled in the BIOS setting, effectively result in the same result as running 100% of cores while HT is turned off in the BIOS?Of course not. The main reason for turning HT off in the BIOS is to strengthen the system against cache sideband attacks (like spectre, meltdown, etc.). I recommend to leave HT on in the BIOS, because in this way the system still can use the free threads for its own purposes, or you can increase the number of running CPU tasks, if the RAC increase follows it. See this post. What I want to convey here is that there was much more variability in the tasks what is consistent with what you describe.That's true. Also, having thought that the same WUs on 6 physical cores would generate the same strain on my system as running WUs on all 12 HT threads, I saw that CPU temps ran roughly 3-4 degrees higher (~ 4-6%) while at the same time my heatsink fan revved up about 12.5-15% to keep up with the increase in temps.I lost you. The temps got higher with HT on? It's normal btw. Depending on the CPU and the tasks the opposite could happen also. As I plan my new system build to be a one-for all solution, I won't be able to execute on this advice.That's ok. My advice was a "warning". Some might get frustrated that my i3/2080Ti performs better at GPUGrid than an i9-10900k/2080Ti (because of its overcomitted CPU). When I readI run 4 pieces of 2080Tis for 2 years without any failures so far. However I have a second hand 1660Ti, which has stripes sometimes (it needs to be "re-balled").Turing had worst lasting lifetime for me than others.I questioned my initial gut feeling as to go with a Turing based GTX 1660 Ti. I do however wonder a GTX 1660 Ti will keep up with the pace of hardware innovation we currently see. I don't want to have my system basically rendered outdated in just 1 or 2 years.I would wait for the mid-range Ampere cards. Perhaps there will be some without raytracing cores. Or if not, the 3060 could be the best choice for you. Second hand RTX cards (2060S) could be very cheap considering their performance. | |

| ID: 55401 | Rating: 0 | rate:

| |

|

Thanks Zoltán for your insights! Of course not. ... I recommend to leave HT on in the BIOSGreat explanation. Will do! I lost you. The temps got higher with HT on?Yeah, it got pretty confusing. What I tried to convey here was that when HT was off and I ran all 6 physical cores at a 100% system load as opposed to HT turned on and running 12 virtual cores on my 6 core system, the latter one produced more heat as the fans revved up considerably over the non-HT scenario. Running only 6 cores out of 12 HT cores, produces a comparable result as leaving HT turned off and running all 6 physical ones. Hope that makes sense. That's ok. My advice was a "warning". Some might get frustrated that my i3/2080Ti performs better at GPUGrid than an i9-10900k/2080Ti (because of its overcomitted CPU).Couldn't this be solved by just leaving more than 1 thread for the GPU tasks? What about the impact on a dual-/multi GPU setup? Would this effect be even more pronounced here? I run 4 pieces of 2080Tis for 2 years without any failures so far.Well that is at least a bit reassuring. But after all, running those cards 24/7 at full load is a tremendous effort. Surly the longevity decreases as a result of hardcore crunching. I would wait for the mid-range Ampere cards. Perhaps there will be some without raytracing cores. Or if not, the 3060 could be the best choice for you. Second hand RTX cards (2060S) could be very cheap considering their performance.Well, thank you very much for your advice! Unfortunately, I am budget constrained for my build at the ~1000 Euro mark and have to start from scratch as I don't have any parts that can be reused. Factoring in all those components (PSU, motherboard, CPU heatsink, fans, case, etc.) I will not be able to afford any GPU beyond the 300€ mark for the moment. I'll probably settle for a 1660 Ti/Super where I currently see the sweetspot between price and performance. I hope it'll complement the rest of my system well. I will seize the next opportunity then (probably 2021/22) for a GPU upgrade. We'll see what NVIDIA will deliver in the meantime and hopefully by then, I can get in the big league with you :) Cheers | |

| ID: 55421 | Rating: 0 | rate:

| |

I'm talking about the performance of the GPUGrid app, not the performance of other projects' (or mining) GPU apps. The performance loss of the GPU depends on the memory bandwidth utilization of the given CPU (and GPU) app(s), but generally it's not enough to leave 1 thread for the GPU task(s) to achieve maximum GPU performance. There will always be some loss of GPU performance caused by the simultaneously running CPU app(s) (the more the worse). Multi-threaded apps could be less harmful for the GPU performance. Everyone should decide how much GPU performance loss he/she tolerates, and set their system accordingly. As high-end GPUs are very expensive I like to minimize their performance loss.Some might get frustrated that my i3/2080Ti performs better at GPUGrid than an i9-10900k/2080Ti (because of its overcomitted CPU).Couldn't this be solved by just leaving more than 1 thread for the GPU tasks? I've tested it with rosetta@home: when more than 1 instance of rosetta@home were running, the GPU usage dropped noticeably. The test was done on my rather obsolete i7-4930k. However, this obsolete CPU has almost twice as much memory bandwidth per CPU core than the i9-10900 has: CPU CPU #memory memory memory mem.bandwidth

CPU cores threads channels type&freqency bandwidth per CPU core

i7-4930k 6 12 4 DDR3-1866MHz 59.7GB/s 9.95GB/s/core

i9-10900 10 20 2 DDR4-2933MHz 45.8GB/s 4.58GB/s/core (These CPUs are not in the same league, as the i9-10920X would match the league of the i7-4930k).My point is that it's much easier to saturate the memory bandwidth of a present high-end desktop CPU with dual channel memory, because the number of CPU cores increased more than the available memory bandwidth. What about the impact on a dual-/multi GPU setup? Would this effect be even more pronounced here?Yes. Simultaneously running GPUGrid apps hinder each other's performance as well (even if they run on different GPUs). Multi GPUs share the same PCIe bandwidth, that's the other factor. Unless you have a very expensive socket 2xxx CPU and MB, but it's cheaper to build 2 PCs with inexpensive MB and CPU for 2 GPUs. ...running those cards 24/7 at full load is a tremendous effort. Surly the longevity decreases as a result of hardcore crunching.It does, especially for the mechanical parts (fans, pumps). I take back the power limit of the cards for the summer, also I take the hosts with older GPUs offline for the hottest months (this year was an exception due to the COVID-19 research). | |

| ID: 55431 | Rating: 0 | rate:

| |

|

Thanks Zoltán again for your valuable insights. The advice about the power limit lead me to limit it down and set the priority of temperature over performance in MSI afterburner. All GPU apps now run at night comfortably sitting at 50-55 degrees at 35% fan speed vs. 62/63 degrees at 55% fan speed which is now not audibly noticeable at all anymore. That comes along with a small performance penalty but I don't notice a radical slowdown. The power limit of this card is now set to 80 percent which corresponds to a maximum temp of 62 degrees. This had a tremendous effect on overall operating noise of my computer as prior to that adjustment with the card running at 62 degrees in my badly ventilated case and the hot air continually heated up the CPU environment (CPU ran only at 55-57 degrees) and the CPU heatsink fan had to run faster to compensate for the GPU's hot air exhaust. Now both components are running at similar temps and the heatsink fan is now working at a slower speed reducing the overall noise. | |

| ID: 55653 | Rating: 0 | rate:

| |

|

Depends on the cooler design. Typically a 3 fan design cools better than a 2 fan design simply because the cooler heat sink is larger because of the need to mount 3 axial fans side by side onto the heat sink and thus has a larger amount of radiating surface area compared to a 2-fan heat sink. | |

| ID: 55657 | Rating: 0 | rate:

| |

Is there any particular brand that anyone of you can recommend for superior cooling and/or low noise levels?Superior cooling is done by water, not air. You can build your own water cooled system (it's an exacting process, also expensive and needs a lot of experience), or you can buy a GPU with a water cooler integrated on it. They usually run at 10°C lower temperatures. I have a Gigabyte Aorus Geforce RTX 2080Ti Xtreme Waterforce 11G, and I'm quite happy with it. This is my only card crunching GPUGrid tasks at the moment: it runs at 250W power limit (originally it's 300W), 1890MHz GPU 64°C, 13740MHz RAM, 1M PPD. As for air cooling the best cards are the MSI Lightning (Z) and MSI Gaming X Trio cards. They have 3 fans, but these are large diameter ones, as the card itself is huge (other 3-fan cards have smaller diameter fans, as the card is not tall and long enough for lager fans). I have two MSI Geforce Gaming X Trio cards (a 2080 Ti and a 1080 Ti) and these have the best air cooling compared to my other cards. If you buy a high-end (=power hungry) card, I recommend to buy only the huge ones. (look at their height and width as well). | |

| ID: 55658 | Rating: 0 | rate:

| |

|

Thank you both for your response. As always I really appreciate the constructive and kind atmosphere on this forum! | |

| ID: 55665 | Rating: 0 | rate:

| |

|

I have been purchasing nothing but EVGA Hybrid cards since the 1070 generation. | |

| ID: 55677 | Rating: 0 | rate:

| |

|

That's interesting. Didn't even know that hybrid cards existed up until now. Those temps definitely look impressive and are really unmatched compared to air cooling only solutions. Seems like a worthwhile option that I'll likely consider down the road but not for now as I am still very much budget constrained. | |

| ID: 55680 | Rating: 0 | rate:

| |

|

https://www.evga.com/products/product.aspx?pn=08G-P4-3178-KR | |

| ID: 55684 | Rating: 0 | rate:

| |

|

Now, I have narrowed it down further to finally go with a 1660 Super. The premium you end up paying beyond (1660Ti) is just not worth the additional marginal performance. The intention behind this is to now get an efficient card that still has adequate performance (~50% of RTX 2080Ti) at an attractive price point and save up more to invest in a latest gen low-end card (preferably a hybrid one) that will boost overall performance and stay comfortably within my 750W power limit. | |

| ID: 55738 | Rating: 0 | rate:

| |

|

The ASUS ROG Strix cards have an overall well liked reputation for performance, features and longevity. | |

| ID: 55739 | Rating: 0 | rate:

| |

|

Thanks for your feedback! As always Keith, much appreciated. Definitely value your feedback highly. | |

| ID: 55740 | Rating: 0 | rate:

| |

|

I second what Keith said, and I also have found that to be true of motherboards, when comparing Asus to Gigabyte. | |

| ID: 55741 | Rating: 0 | rate:

| |

|

The choice of 1660 SUPER for price and performance, combined with Asus ROG for it's features, longevity and thermal capacity are both excellent choices. | |

| ID: 55742 | Rating: 0 | rate:

| |

|

Thank you both for your replies as well. I guess consensus is that Asus might offer the superior product. That's definitely reassuring :) | |

| ID: 55747 | Rating: 0 | rate:

| |

|

I am in the process of upgrading my GTX 750 Ti to a GTX 1650 Super on my Win7 64-bit machine, and thought I would do a little comparing first to my Ubuntu machines. The new card won't arrive until tomorrow, but this is what I have thus far. The output of each card is averaged over at least 20 work units. i7-4771 Win7 64-bit Conclusion: The GTX 1650 Super on Ubuntu is almost twice as efficient as the GTX 750 Ti on Win7. But these time averages still jump around a bit when using BOINCTask estimates, so you would probably have to do more to really get a firm number. The general trend appears to be correct though. | |

| ID: 55749 | Rating: 0 | rate:

| |

|

It's great seeing you again here Jim. From the discussion over at MLC I know that your top priority in GPU computing is efficiency as well. The numbers you shared seem very promising. I can only imagine the 1660 Super being very close to the results of a 1650 Super. I don't know if you already know the resources hidden all over in this thread, but I recommend you take a look if you don't. | |

| ID: 55750 | Rating: 0 | rate:

| |

Let me point one out in particular that Keith shared with me. It's a very detailed GPU comparison table at Seti (performance and efficiency): https://setiathome.berkeley.edu/forum_thread.php?id=81962&postid=2018703Thanks. The SETI figures are quite interesting, but they optimize their OpenCL stuff in ways that are probably quite different than CUDA, though the trends should be similar. I am out of the market for a while though, unless something dies on me. | |

| ID: 55751 | Rating: 0 | rate:

| |

|

Incidentally all, have you seen the Geekbench CUDA benchmark chart yet? | |

| ID: 55752 | Rating: 0 | rate:

| |

|

When the science app is optimized for CUDA calculations, it decimates ANY OpenCL application. | |

| ID: 55753 | Rating: 0 | rate:

| |

|

Well, that thought has crossed my mind before. But I guess that availability will become an issue again next year and current retail prices still seem quite a bit higher than the suggested launch prices from Nvidia. And unfortunately I will still be budget constrained. Currently depending on the deals I would get, I look at ~650/700$ for all my components excluding the GPU. That leaves just a little over 300$ for this component. Looking at the rumoured price predictions for the 3050/3060 cards, at cheapest I will look at ~350$ for a 3050 Ti and that is without the price inflation we'll likely see on the retailer side at launch. Small inventory and an initial lack of supply will likely make matters even worse. | |

| ID: 55755 | Rating: 0 | rate:

| |

|

I am only aware of the projects I run. That said, we are still waiting for Ampere compatibility here. | |

| ID: 55756 | Rating: 0 | rate:

| |

I am only aware of the projects I run. That said, we are still waiting for Ampere compatibility here. I think the RTX3000 "should" work at MW since their apps are openCL like Einstein. But it's probably not worth it vs other cards since Nvidia nerfed FP64 so bad on the Ampere Geforce line, even worse than Turing. ____________  | |

| ID: 55757 | Rating: 0 | rate:

| |

- 84% of a RTX 3050 Ti --> 119% vs. GTX 1660 Super RTX 3050 Ti RTX 3060 Based on stats you quoted, I would definitely hold off on Ampere until Gpugrid releases Ampere compatible apps. GTX 1660 SUPER vs Ampere: RTX 3050 Ti - 119% performance increase, 125% power increase. RTX 3060 - 137% performance increase, 150% power increase. Not great stats. I am sure these figures are not accurate at all. Once an optimised CUDA app is released for Gpugrid, the performance should be better. (but the increase is yet unknown) To add to your considerations. Purchase a GTX 1650 SUPER now, selling real cheap at the moment and a definite step up on your current GPU. Wait until May, by then a clearer picture of how the market and BOINC projects are interacting with Ampere, and then purchase an Ampere card. This gives you time to save up for the Ampere GPU as well. The GTX 1650 SUPER could be relegated to your retired rig for projects, testing etc, or sold on eBay. Pricing on Ampere may have dropped a bit by May, (due to pressure from AMD and demand for Nvidia waning after the initial launch frenzy) so the extra money to invest in a GTX 1650S now, may not be that much. | |

| ID: 55758 | Rating: 0 | rate:

| |

we are still waiting for Ampere compatibility hereThanks Keith! Well, that's one of the reasons that I am hesitant still about purchasing an Ampere card yet. I'd much rather wait for the support at major projects and see from there. And that is very much along the same lines as rod4x4 has fittingly put it. Wait until May, by then a clearer picture of how the market and BOINC projects are interacting with Ampere, and then purchase an Ampere card.Delaying an RTX 3000 series card purchase, from my perspective, seems like a promising strategy. The initial launch frenzy you are mentioning is driving prices up incredibly... And I predict that the 3070/360Ti will attract the most demand that'll inevitably drive prices up further – at least in the short term. Purchase a GTX 1650 SUPER now, selling real cheap at the moment and a definite step up on your current GPU.Very interesting train of thought rod4x4! I guess, the 160-180$ would still be money well worth spent (for me at least). Any upgrade would deliver a considerable performance boost from a 750 Ti :) I'd give up 2GB of memory, a bit of bandwidth, and just ~20% performance but at a 30-40% lower price... I'll think about that. This gives you time to save up for the Ampere GPU as well.Don't know if this is gonna happen this quickly for me, but that's definitely the upgrade path I am planning on. Would an 8-core ryzen (3700x) offer enough threads to run a future dual GPU setup with a 1650 Super + RTX 3060 Ti/3070 while still allowing free resources to be allocated to CPU projects? Based on stats you quoted, I would definitely hold off on Ampere until Gpugrid releases Ampere compatible apps.Well, there is definitely no shortage of rumours recently and numbers did change while I was looking up the stats from techpowerup. So, there is surely lots of uncertainty surrounding these preliminary stats, but they do offer a bit of guidance after all. Pricing on Ampere may have dropped a bit by May, (due to pressure from AMD and demand for Nvidia waning after the initial launch frenzy) so the extra money to invest in a GTX 1650S now, may not be that much.I fully agree on your assessment here! Thanks. Now it looks as I am going down the same route as Jim1348 after all! :) since Nvidia nerfed FP64 so bad on the Ampere Geforce line, even worse than Turing.I saw that too Ian&Steve C. Especially compared to AMD cards, their F64 performance actually looks rather poor. Is this due to a lack of technical ability or just a product design decisions to differentiate them further from their workstation/professional cards? | |

| ID: 55759 | Rating: 0 | rate:

| |

Would an 8-core ryzen (3700x) offer enough threads to run a future dual GPU setup with a 1650 Super + RTX 3060 Ti/3070 while still allowing free resources to be allocated to CPU projects? I am running a Ryzen 7 1700 with dual GPU and WCG. I vary the CPU threads from 8 to 13 threads depending on the WCG sub-project. (Mainly due to the limitations of the L3 cache and memory controller on the 1700) The GPUs will suffer a small performance drop due to the CPU thread usage, but this is easily offset by the knowledge other projects are benefiting from your contributions. The 3700X CPU is far more capable than a 1700 CPU and does not have the same limitations, so the answer is YES, the 3700X will do the job well! | |

| ID: 55760 | Rating: 0 | rate:

| |

I saw that too Ian&Steve C. Especially compared to AMD cards, their F64 performance actually looks rather poor. Is this due to a lack of technical ability or just a product design decisions to differentiate them further from their workstation/professional cards? Yes, it is a conscious design decision since the Kepler family of cards. The change in design philosophy started with this generation. The GTX 780Ti and the Titan Black were identical cards for the most part based on the GK110 silicon. The base clock of the Titan Black was a tad lower but the same number of CUDA cores and SM's. But you could switch the Titan Black to 1:3 FP64 mode in the video driver when the driver detected that card type while the GTX 780Ti had to run at 1:24 FP64 mode. Nvidia makes design changes in the silicon of the gpu to de-emphasize the FP64 performance in later designs and didn't rely on just a driver change. So it is not out of incompetence, just the focus on gaming because they think that anyone that buys their consumer cards is solely focused on gaming. But in reality we crunchers use the cards not for their intended purposes because it is all we can afford since we don't have the industrial strength pocketbooks of industry, HPC computing and higher education entities to purchase the Quadros and the Teslas. The generation design of the silicon is the same among the consumer cards and professional cards, but the floating point pathways and registers are implemented very differently in the professional card silicon. So there is actual differences in the gpu dies between the two product stacks. The professional silicon gets the "full-fat" version and the consumer dies are very cut-down versions. | |

| ID: 55761 | Rating: 0 | rate:

| |

|

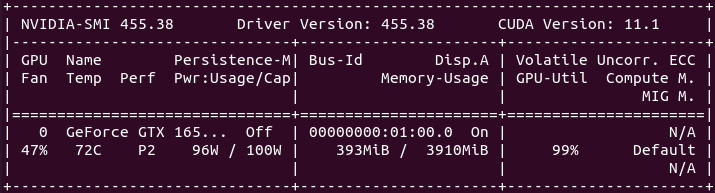

On November 16th rod4x4 wrote: To add to your considerations. At this point, perhaps I can help a bit the way I like: with a real-life example. I have both GTX 1650 SUPER and GTX 750 TI graphics cards running GPUGrid 24/7 in dedicated systems #186626 and #557889 for more than one month. Current RAC for GTX 1650 SUPER is settled at 350K, while for GTX 750 TI is at 100K: about 3,5 performance ratio. Based on nvidia-smi: GTX 1650 SUPER power consumtion: 96W at 99% GPU utilization GTX 750 Ti power consumtion: 38W at 99% GPU utilization Power consumtion ratio is about 2,5. Taking in mind that performance ratio was 3,5, power efficiency for GTX 1650 SUPER is clearly beating GTX 750 Ti. However, GTX 750 Ti is winning in cooling performance: it is maintaining 60 ºC at full load, compared to 72 ºC for GTX 1650 SUPER, at 25 ºC room temperature. But this GTX 750 Ti is not exactly a regular graphics card, but a repaired one... | |

| ID: 55762 | Rating: 0 | rate:

| |

The 3700X CPU is far more capable than a 1700 CPU and does not have the same limitations, so the answer is YES, the 3700X will do the job well!Well, that is again tremendously reassuring! Thanks GPUs will suffer a small performance drop due to the CPU thread usageWell, Zoltan elaborated a couple times on this topic very eloquently. While I can definitely live with a small performance penalty, it was interesting to me that newer CPUs might actually see their memory bandwidth saturated faster than earlier lower-thread count CPUs. And that by comparison, the same high-end GPU running along a high-end desktop processor might actually perform worse than if being run along an earlier CPU with higher # of memory channels and bandwidth. Yes, it is a conscious design decision since the Kepler family of cards.Unfortunately, from the business perspective, it makes total sense for them to introduce this price discrimination barrier between their retail and professional product lines. But in reality we crunchers use the cards not for their intended purposes because it is all we can afford ... industry, HPC computing and higher education entities to purchase the Quadros and the Teslas.Still, it is a rather poor design decision if you compare it to the design philosophy of AMD and the F64 they are delivering with their retail products... At this point, perhaps I can help a bit the way I like: with a real-life example.You never disappoint with your answers as I love the way you go about giving feedback. Real-life use cases are always best to illustrate arguments. Definitely interesting. I thought, that given most card manufactures offering very similar cooling solutions for the 1650 Super and 1660 Super cards, the 1650 Super rated @ only 100W TDP would run at similar temps if not lower than the 1660 Super cards. Might I ask what model you are running on? And at what % the GPU fans are running. Eying the Rog Strix 1650 Super, it seems to offer the same cooling solution as its 1660 Super counterpart and test reviews I read suggested that this card runs very cool (< 70 ºC) and this is at 125W. Would be keen on your feedback. | |

| ID: 55763 | Rating: 0 | rate:

| |

Current RAC for GTX 1650 SUPER is settled at 350K, while for GTX 750 TI is at 100K: about 3,5 performance ratio. I just received my GTX 1650 Super, and have run only three work units, but it seems to be about the same gain. It is very nice. I needed one that was quiet, so I bought the "MSI GeForce GTX 1650 Super Ventus XS". It is running at 66 C in a typical mid-ATX case with just a rear fan, in a somewhat warm room (73 F). Now it is the CPU fan I need to work on. | |

| ID: 55764 | Rating: 0 | rate:

| |

|

That's great news Jim! Thanks for providing feedback on this. Definitely seems that the 1650 Super is a capable card and will accompany my 750Ti for the interim time :) | |

| ID: 55765 | Rating: 0 | rate:

| |

The variation seen in the operating temperature is probably mostly due to case airflow characteristics right? ... as I can't really see how such a big gap in temp could only result from the different cooling solutions on the cards. (That's ~9% difference!) Maybe so. I can't really tell. I have one other GTX 1650 Super on GPUGrid, but that is in a Linux machine. It is a similar size case, with a little stronger rear fan, since it does not need to be so quiet. It is an EVGA dual-fan card, and is running at 61 C. But it shows only 93 watts power, so a little less than on the Windows machine. If I were to choose for quiet though, I would go for the MSI, though they are both nice cards. | |

| ID: 55766 | Rating: 0 | rate:

| |

Might I ask what model you are running on? And at what % the GPU fans are running. Sure, The model I've talked about is an Asus TUF-GTX1650S-4G-GAMING: https://www.asus.com/Motherboards-Components/Graphics-Cards/TUF-Gaming/TUF-GTX1650S-4G-GAMING/ As Jim1348 confirms, I'm very satisfied with its performance, considering its power consumption. Regarding working temperature and fan setting, I usually let my cards to work at factory preset clocks and fan curves.  As can be seen in this nvidia-smi command screenshot, this particular card seems to feel comfortable at 72 ºC (161,6 ºF), since it isn't pushing fans beyond 47% at this temperature... It is installed at an standard ATX tower case, with a front 120 mm fan, two rear, and one upper 80 mm low noise chassis fans. Current room temperature is 25 ºC (77 ºF) | |

| ID: 55767 | Rating: 0 | rate:

| |

|

Interesting to hear about your personal experiences, now about 3 different models already. Seems to be a very decent card overall! | |

| ID: 55769 | Rating: 0 | rate:

| |

As can be seen in this nvidia-smi command screenshot, this particular card seems to feel comfortable at 72 ºC (161,6 ºF), since it isn't pushing fans beyond 47% at this temperature... Thanks again for pointing this out. As I wasn't aware of this command before, I was stoked to try it out with my card as well and was quite surprised with the output... Positively, as the card showed a much lower wattage than anticipated, but negatively as it did thereby obviously not reach its full potential. As NVIDIA specified the 750 Ti card architecture with 60W TDP, I suspected the card being very close to it. As it is additionally an OC edition, particularly an Asus 750 Ti OC, and it is powered with an additional 6 pin power adapter, in theory that command should theoretically output much more than the recommended 60W by NVIDIA. But to my surprise it was maxed out at 38.5W which was obtained by using nvidia-smi -q -d POWER Especially as it should give 75W (6 pin) + 75W (PCIe) = 150W. What the heck do I need the additional power for if the card would only need ~25% of the available power?As I heard many times by now, that overclocked cards can easily go above their specified power limit as their PCB normally support up to 120-130% depending on make and model, I am honestly quite stumped by this. Is there a way to increase that specified power limit in the card's BIOS somehow? I feel like, even by increasing it a bit, but staying close to the 60W, I could increase performance while still operating with safe settings as cooling looks to be sufficient. Any idea on how to do this? Currently I am 64.2% of NVIDIA's TDP rating. That seems to be way too cautious... As discussed in the beginning of this thread, my card sometimes threw a compute error while it was overclocked and Zoltan suggested this might be the cause. I can now better verify this as the nvidia-smi -q -d CLOCK command now said that when tasks failed, I operated the card at a memory clock that was higher (2,845 MHz) than the maximum supported clock speed (2,820 MHz), while according to the output there is still some headroom with the core clock (~91 MHz to 1,466 MHz). This mainly happened due to me setting of mem OC = core OC, which might not have been tested long enough with MSI Kombustor to attain an accurate stability reading from the benchmarks that were running. | |

| ID: 55771 | Rating: 0 | rate:

| |

|

You can't force a card to use more power than the application requires. | |

| ID: 55772 | Rating: 0 | rate:

| |

|

But in this case one single GPUGrid task utilises 100% of the CUDA compute ability and running into the power limit. It uses 38.5W out of the specified max wattage of 38.5W that can be read from the nvidia-smi -q -d POWER output. Isn't there a way to increase the max power limit on this card even if the application is demanding for it and the NVIDIA reference card design is rated at the much higher TDP of 60W? To increase the utilization of the card, you generally run two or more work units on the card.That's what I did so far with GPU apps that demanded less than 100% CUDA compute capacity for a single task such as Milkyway (still rather poor F64 perf.) or MLC which usually use 60-70%. | |

| ID: 55773 | Rating: 0 | rate:

| |

|

To get more performance on a card, you overclock it. | |

| ID: 55777 | Rating: 0 | rate:

| |

|

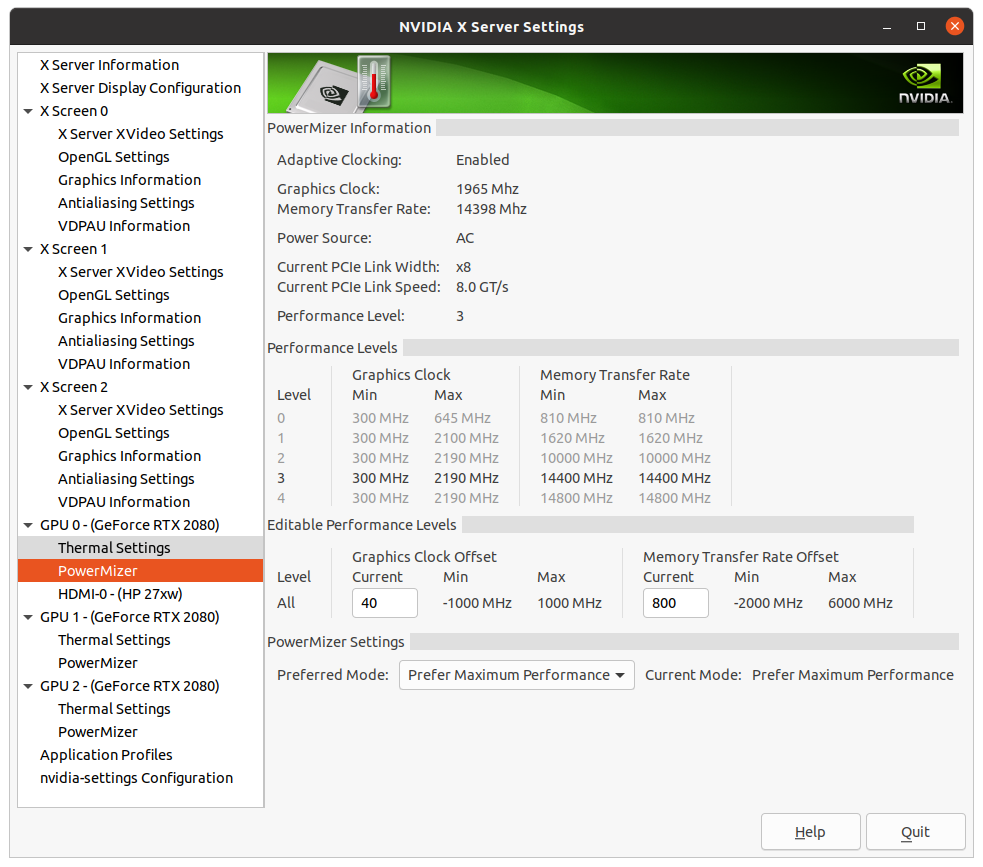

This is what you can do with the coolbits set for the cards in the system. | |

| ID: 55778 | Rating: 0 | rate:

| |

This is what you can do with the coolbits set for the cards in the system. Need to knock the s off the https for the img tags, with this old server software. | |

| ID: 55779 | Rating: 0 | rate:

| |

|

Thanks for the fix Richard. | |

| ID: 55783 | Rating: 0 | rate:

| |

|

Thanks for those awesome tips Keith! I will definitely give it a try as soon as my currently running climate prediction tasks will finish and I can safely reboot into Linux on my system. Thanks also for making it so clear by attaching the screenshots! First thing to do with the higher stack Nvidia cards is to restore the memory clock to P0 power state that the Nvidia drivers deliberately downclock when a compute application is run.Will take that advice to heart in the future for the next card :) Curious about what improvement these suggested changes might result in, I made some changes in MSI afterburner on Windows. Might be the "beginner solution", but after having changed to a bit more aggressive fan curve profile, increasing core overclock to now 1.4 GHz, and maxing out the temp. limit to 100C, I already start to see some of the desired changes. Prior: - 1365 MHz core/2840 MHz mem clock - occasional compute errors thrown on GPUGrid - 60-63C - 55% fan @20C ambient or 65% @30C ambient - GPUGrid task load: (1) compute: 100%, (2) power: 100% (38.5W) - boost clock not sustained for longer time periods Now: - 1400 MHz core/2820 MHz mem clock - no compute errors so far - 63C constantly (fan curve adjust. temp. point) - 70% fan@20C ambient - GPUGrid task load: (1) compute: 100%, (2) power: ø ~102% (39.2) / max 104.5% (40.2W) --> got these numbers by running a task today and averaging out the wattage numbers that I obtained over a 10 min period while under load with the following command "nvidia-smi --query-gpu=power.draw --format=csv --loop-ms=1000". Maybe I should have done it for a shorter period but at a lower "resolution". - boost clock now sustained at 1391 MHz for longer periods. While this is already an easy way of improving performance slightly, I still haven't figured out how to circumvent the card to always run into the power limit or increase it altogether for that matter. According to what I found out so far, the PCB of this card is not rated to operate safely for longer periods at higher than stock voltages, but what I have in mind is rather a more subtle change in max. power. I would like to see how the card would operate at 110% of its current 38.5W and then see from there. The only way I have come is to modify the BIOS and then flash it to the card. I did the first step successfully and have now a modified BIOS version with a power limit of 42.3W besides a copy of the original version, but unfortunately no way to flash it to the card. - Even just for trying, as I don't want to fry the card... Nvflash is apparently not working on Windows and I would have to boot into DOS in order for the Nvflash software to work and deploy the new BIOS. That is way over my head, especially as I don't feel comfortable to not understand all of the technical specifications of the BIOS, even though I only touched the power limit. For now, I am hitting a roadblock and feel much more comfortable with the current card settings. Thanks for the quick "power boost". Definitely looking forward to take a closer look when booted into Linux | |

| ID: 55785 | Rating: 0 | rate:

| |

|

Well as I mentioned earlier, Nvidia doesn't usually hamstring the lower stack cards like your GTX 750Ti. So you don't need to overclock the memory to make it run at P0 clocks because it already is doing that. sudo nvidia-xconfig --thermal-configuration-check --cool-bits=28 --enable-all-gpus That will un-grey the fan sliders and the entry boxes for core and memory clocks. Logout and log back in or reboot to enable the changes. Then open up the Nvidia X Server Settings application the drivers install and start playing around with the settings. | |

| ID: 55787 | Rating: 0 | rate:

| |

|

To flash card BIOS' you normally make a DOS boot stick and put the flashing software and the ROM file on it. | |

| ID: 55788 | Rating: 0 | rate:

| |

|

I certainly will, just hadn't much today to do my research. But yeah, this is how far I have been already with my google research. I strongly plan on further researching this using google just out of curiosity on the weekend when I have more time. :) So you don't need to overclock the memory to make it run at P0 clocks because it already is doing that.I will scale it back to stock speed then and see how/whether it'll impact performance. That command line is greatly appreciated. I just wish to understand the rationale of the cardmaker (Asus) to include a 6-pin power plug requirement but then restricting it to a much lower value on the other hand at which the PCIe wattage supply would easily have sufficed. Just can't wrap my head around that... | |

| ID: 55789 | Rating: 0 | rate:

| |

|

If I remember correctly, the GTX 750 Ti was a transition product that straddled the Kepler and Maxwell families. | |

| ID: 55791 | Rating: 0 | rate:

| |

|

Well, thanks! Both are plausible explanations. I will let things rest now :) | |

| ID: 55795 | Rating: 0 | rate:

| |

|

There is nice OC tool on Linux - GreenWithEnvy. Not powerful like MSI Afterburner on Windows, but still usable. And it has Windows look like apperance. | |

| ID: 55796 | Rating: 0 | rate:

| |

There is nice OC tool on Linux - GreenWithEnvy. Not powerful like MSI Afterburner on Windows, but still usable. And it has Windows look like apperance. I tried to run it when it first came out. Was incompatible with multi-gpu setups. I haven't revisited it since. Had to install a ton of precursor dependencies. Not for a Linux newbie in my opinion. | |

| ID: 55797 | Rating: 0 | rate:

| |

|

Great! Didn't know that such a software alternative existed for Linux. Will take a closer look as soon as I get the chance. Thanks for sharing! | |

| ID: 55798 | Rating: 0 | rate:

| |

|

Well I just revisited the GWE Gitlab repository and read through the changelogs. | |

| ID: 55802 | Rating: 0 | rate:

| |

|

Recently I tried out to run some WUs of the new Wieferich and Wall-Sun-Sun Prime Grid subproject out of curiosity. Usually I don't run this project, but in my experience these math tasks are usually very power hungry. However, I was still very much surprised to see, that this was the the first task that did push well beyond the power limit of this card with a max wattage draw of 64.8W and and average of 55W while maintaining the same settings. Even GPUGrid can't push the card this far. Though, this is well above the 38.5W defined power limit for this particular card, it is now close to the reference card's TDP rating of 65W. All is running smoothly at this wattage draw and temps are very stable, so I reckon that this card could hypothetically demand much higher power if it wanted to. | |

| ID: 55856 | Rating: 0 | rate:

| |

Interestingly, from what I could observe, the overall compute load was much lower than for GPUGrid (ø 70% vs. 100%) and similar strain on the 3D engine. What does/could cause the spike in wattage draw even though I cannot observe what is causing this? Any ideas? I just don't understand how a GPU can draw more power even though overall utilisation is down... What am I missing here? Any pointers appreciated The GPU usage reading shows the utilization of the "task scheduler" units of the GPU, not the utilization of the "task execution" units. The latter can be measured by the ratio of the power draw of the card and its TDP. Different apps cause different loads of the different parts of the GPU. Smaller math tasks can even fit into the cache of the GPU chip itself, so it won't interact with its memory much (making the "task execution" units even more busy). The GPUGrid app interacts a lot with the CPU and the GPU memory. | |

| ID: 55864 | Rating: 0 | rate:

| |

|

Thank you Zoltan for your answer. As always much appreciated. | |

| ID: 55923 | Rating: 0 | rate:

| |

Message boards : Graphics cards (GPUs) : General buying advice for new GPU needed!